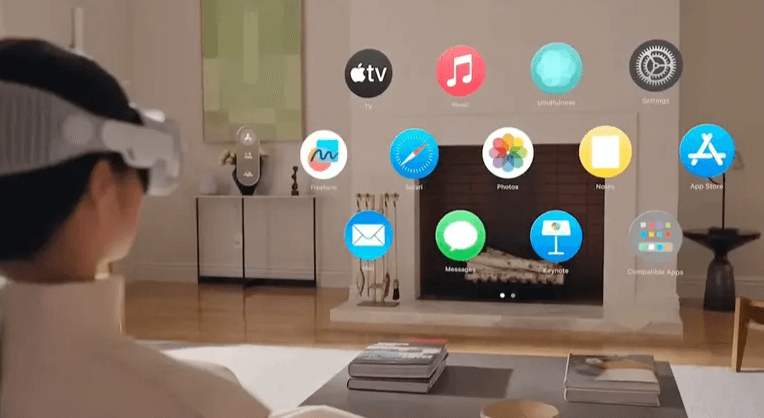

A few years ago, the VR field was developing slowly. However, since the release of Apple Vision Pro, the entire industry has undergone significant changes. The new ideas and designs introduced by Vision Pro have brought interaction design in the entire industry to new heights.

1. Innovative Breakthroughs in Vision Pro’s Interaction Design

Apple Vision Pro showcases remarkable innovations in interaction design, bringing users an unprecedented experience.

1.1 The Unique Charm of Multi-Interaction Fusion

Apple Vision Pro cleverly integrates three interaction methods: gestures, eye movements, and voice commands. By locking in the viewing position with the eyes, users can make selections through gestures, and then execute more complex actions with voice commands. This integration, although it raises the learning curve, offers users an exceptional operating experience once mastered. For example, when browsing applications, users only need to gaze at the app, which will respond, and then select the target with a simple gesture. At the same time, voice control adds convenience to the operation, allowing users to issue voice commands or use speech-to-text functionality through a microphone button.

1.2 Beyond Traditional Interaction Experiences

Compared to mainstream headsets, Vision Pro has a significant advantage in interaction design. First, it does not require handheld controllers, relying entirely on eyes, hands, and voice for interaction, making it more ergonomic. Mainstream headsets usually use handheld controllers to reduce the learning curve, but Vision Pro requires users to learn gesture controls. Although the initial adaptation cost is higher, it leads to a more efficient and smoother operating experience.

Additionally, Vision Pro is equipped with 6 SLAM + gesture cameras. Two of these cameras are downward-facing, specifically capturing the view of hands resting on the user's legs, while another two cameras are angled downward to simultaneously handle SLAM and gestures. To improve gesture recognition in low-light environments, two infrared LEDs were added. This design makes user interactions feel more natural, eliminating the need to constantly raise hands in front of the body, which reduces fatigue.

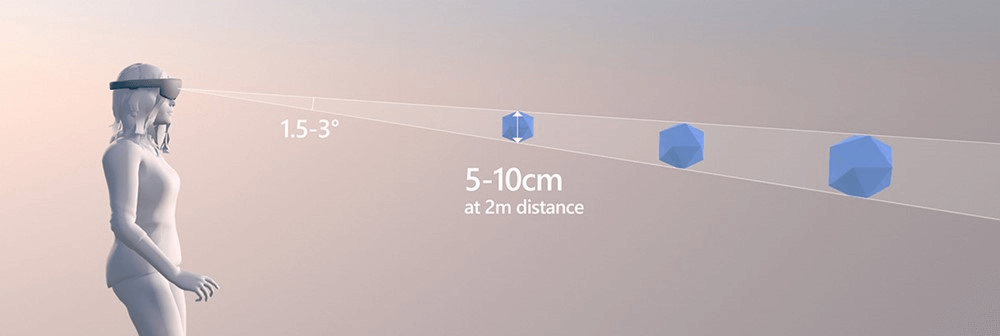

Moreover, the eye-tracking effect on Vision Pro is excellent. Users only need to gaze at a menu item or button, and the system will immediately highlight it. When combined with gesture control, this speeds up operations and improves efficiency. For example, in AR menu activation and selection tests, gaze ray + pinch took 2.5 seconds, while gesture ray took 4.6 seconds, with testers preferring gaze ray + pinch. In short, the interaction design of Apple Vision Pro surpasses traditional systems, offering a completely new user experience.

2. Advantages of Specific Interaction Methods

2.1 Precision and Convenience of Gesture Interaction

The gesture interaction on Apple Vision Pro demonstrates high precision and convenience. Equipped with 6 SLAM + gesture cameras, two downward-facing cameras capture the view of hands resting naturally on the legs, while two angled downward cameras handle both SLAM and gestures. This unique camera configuration significantly expands the range of gesture control. In traditional VR systems, gesture tracking is only supplementary, requiring users to hold their hands within the camera's field of view, often leading to arm fatigue from constantly raising hands. Vision Pro, however, can track most gestures with hands resting naturally on the legs or desk, which is more ergonomic.

For example, when users need to type, they typically need to raise their hands into the field of view of the headset lenses for direct gestures. While the camera has a wide capture range, frequent use of direct gestures can cause fatigue. However, Vision Pro also provides alternatives, such as connecting to an iPhone or Bluetooth keyboard, as well as a virtual keyboard or speech-to-text options for users seeking more choices.

2.2 Efficiency and Naturalness of Eye Tracking

Apple Vision Pro’s eye-tracking technology is efficiently applied. Vision OS’s underlying logic involves many 2D window interactions, including text selection and menu navigation, which can be completed using eye movements combined with gestures. Unlike mainstream VR devices, which focus more on VR application scenarios, Vision Pro emphasizes the integration of eye tracking with gesture control, improving user efficiency and naturalness in everyday operations.

In AR menu activation and selection tests, gaze ray + pinch took 2.5 seconds, while gesture ray took 4.6 seconds. Users also preferred gaze ray + pinch. This shows that the combination of eye tracking and gesture interaction in Vision Pro provides a faster and more efficient user experience. Additionally, Apple uses high-lighting and other UI cues to remind users of eye tracking, even though the gaze ray is hidden, still allowing users to feel the effect of eye tracking.

2.3 The Supporting Role of Voice Interaction

Voice interaction plays an important supportive role in Apple Vision Pro. Users can control the device with voice commands to execute gestures, interact with interface elements, and even dictate or edit text.

Setting up voice control is also easy. Before using “Voice Control,” ensure that Vision Pro is connected to the internet via Wi-Fi. After downloading a file, “Voice Control” can be used without an internet connection. In text input areas, users can easily switch between dictation, spelling, and command modes as needed. Command mode is especially useful when users need to issue a series of commands without unintentionally entering text into the input field.

Moreover, voice interaction can be combined with gesture controls and eye tracking, providing users with a more convenient operation experience. For example, users can open an app via voice command and then use eye tracking and gesture controls for specific operations. In short, voice interaction in Vision Pro is a powerful complement to the overall interaction experience.

3. Challenges and Future Prospects of Interaction Design

3.1 Current Shortcomings in Interaction Design

Although the interaction design of Apple Vision Pro has brought many innovations, it still has some shortcomings. First, the "void tapping" input method proves to be difficult for users when it comes to text input. As a computing device aimed at office environments, relying on finger tapping and voice input for text entry is relatively inefficient, especially when handling long-form content, which becomes cumbersome. This is a significant inconvenience for users who need frequent text input.

Additionally, battery life is a noticeable issue. Vision Pro’s battery life is relatively short, requiring frequent recharges, which limits usage time. For instance, WSJ reporters noted that they had to recharge the device after just 2-3 hours of use, equivalent to the length of a single movie. Frequent recharging interrupts the experience and may inconvenience users who wish to use Vision Pro for extended periods.

3.2 Future Development Trends in Interaction Design

Looking ahead, the interaction design of Vision Pro has vast potential for development. One promising direction is the use of smart bands or rings as haptic feedback tools. As mentioned earlier, the lack of tactile feedback in virtual interactions remains a major issue. Smart bands or rings could provide real physical sensations, such as vibration feedback, increasing user satisfaction, input efficiency, and reducing cognitive load.

For example, Meta Reality Labs has explored providing tactile feedback via gloves or wristbands during virtual keyboard input, demonstrating that vibration feedback based on finger movement can enhance user satisfaction and efficiency. While most users may not be willing to wear gloves for XR devices, smart wristbands (or integrated straps in smartwatches) are more acceptable to ordinary consumers.

With ongoing advancements in technology, we can expect the interaction design of Vision Pro to become more intelligent and personalized. Using AI, Vision Pro could better understand users’ intentions and habits, offering more precise interaction experiences. Moreover, with the continued development of wearable devices, more devices may seamlessly connect with Vision Pro, providing users with a richer interaction experience.

In conclusion, while Apple Vision Pro's interaction design faces some challenges, it has a promising future. We can expect it to continuously innovate and improve, delivering even better experiences for users.

4. How to Design Products or Interactions

4.1 User-Centered Design

When designing Apple Vision Pro or similar interactive products, user needs should always be the focus. It is important to understand the needs of users in various contexts, such as office work, entertainment, and learning, and tailor the interaction design accordingly. In office scenarios, improving text input efficiency should be a priority, possibly by optimizing the virtual keyboard or exploring better connections with external devices like office keyboards. For entertainment, the focus should be on interaction fun and smoothness, allowing users to easily enjoy different types of content.

4.2 Integration of Multiple Technologies

Interaction design should integrate various technological methods to provide richer experiences. For instance, combining gesture control, eye tracking, and voice interaction can be enhanced with more sensor technologies, such as accelerometers and gyroscopes, for more precise motion tracking, making user operations smoother. Some high-end wearable devices already successfully apply these technologies to improve user experience.

4.3 Attention to User Experience Details

Details determine success. Interaction design should focus on user experience details. For example, optimizing feedback mechanisms so users receive clear and timely responses during operations is crucial. Vision Pro’s use of high-lighting in the UI to provide cues can be emulated, and further exploration of visual, auditory, and haptic feedback is necessary. Additionally, considering user experience in different environments, such as ensuring gesture recognition and eye tracking accuracy in low-light conditions, like adding infrared LEDs for assistance, is essential.

4.4 Continuous Innovation and Improvement

Interaction design is an evolving field and requires ongoing innovation and refinement. Staying updated on the latest industry trends and user demand changes is essential. Products should be upgraded and optimized regularly based on user feedback and market research. Embracing new technologies and design concepts can offer users surprising and innovative experiences. For example, with the development of smart materials, we may see interfaces that automatically transform according to users' gestures and eye movements, further enhancing interaction naturalness and convenience.