For the first time in history, a company held a continuous 12-day product release event. When OpenAI announced this decision, the global tech community was filled with high expectations. But as the event neared its end, an AI practitioner expressed their thoughts: "Is this it? Is this really it?"

This seemed to represent a mainstream view: the OpenAI launch event had little to highlight and fell below expectations.

The first eleven days covered important updates on technology, product forms, business models, and industry ecosystems, including the complete inference model o1, reinforced fine-tuning, text-to-video Sora, stronger writing and programming tools Canvas, deep integration with the Apple ecosystem, speech and vision capabilities, the Projects feature, ChatGPT search, ChatGPT phone calls, WhatsApp chat, and more.

However, as the aforementioned AI professional expressed disappointment, “I thought GPT-5 would be released.” The day after the event, it was reported that OpenAI’s development of GPT-5 was facing obstacles.

But the o3 released on the final day was an exception. It is the next-generation inference model of o1, showing remarkable performance in math, coding, physics, and other tests. A technology expert from a domestic large-model company commented on the shock o3 brought, saying, “AGI is here.” Technology professionals highly praised o3.

Looking back on these 12 days, OpenAI showed off its technical "muscle" while continuously optimizing product forms and expanding the scope of real-world applications. Some joked that it felt like a "live-stream sales pitch," as OpenAI aimed to attract more users and developers to use ChatGPT. In the new year, OpenAI may see a leap in daily active users, revenue, and other data.

However, this process may not be smooth. Despite the stronger model capabilities, there remains a significant gap between powerful models and real-world application deployment due to constraints such as data limitations, packaging abilities, and high model costs.

The OpenAI event seemed to reveal a trend: the competition in the large-model industry is no longer just about model parameters and technological limits, but also about user experience and market scale. Both must progress together to maintain a lead.

After summarizing the main information from OpenAI's 12-day event and engaging with industry professionals from the domestic large-model sector, Geek Park identified the following key takeaways.

1. o3’s Intelligence Depth is Sufficient, But Whether It Can Be Called AGI Depends on Intelligence Breadth

"Crazy, too crazy." This was the first reaction from a leader of a domestic model company upon seeing o3.

In areas such as math, coding, and PhD-level scientific Q&A, o3 demonstrated performance surpassing some human experts. For example, in the GPQA Diamond exam for biology, physics, and chemistry, o3 achieved an accuracy of 87.7%, while PhD experts in these fields only reached 70%. In the American AIME math competition, o3 scored 96.7, missing only one question, equivalent to the level of top mathematicians.

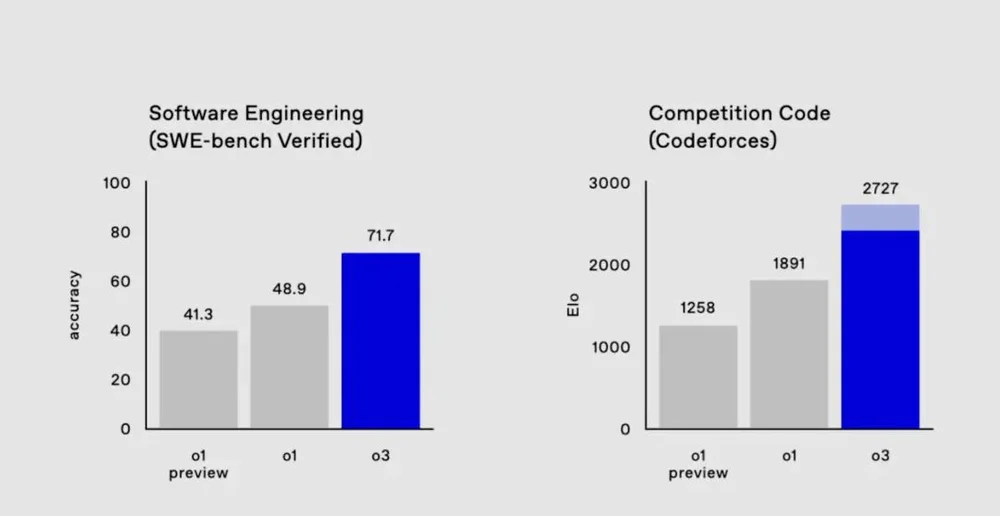

Its coding ability has also sparked widespread discussion. On Codeforces, the world’s largest algorithm practice and competition platform, o3 scored 2727, an improvement of over 800 points from o1, ranking among the top 175 human contestants. It even surpassed OpenAI's Senior Vice President of Research, Mark Chen, who scored 2500.

Comparison of coding capabilities among o1-preview, o1, and o3

Since the release of o1-preview in September, the o1 series models have made remarkable advancements in inference capabilities. The o1 full version, launched on the first day of the event, showed a 50% improvement in thinking speed compared to o1-preview, a 34% reduction in major errors for difficult real-world problems, and support for multimodal input (image recognition). Today, o3 has surpassed some human expert levels in complex tasks.

"From o1 to o3, the improvement in model capabilities was achieved by increasing the inference computing capacity. With the release of Deepseek-R1, Gemini 2.0 Flash Thinking, and others, it shows that large models are shifting from pre-training Scaling Law to inference Scaling Law," said Liu Zhiyuan, a tenured associate professor at Tsinghua University and founder of Mianbi Intelligence.

The paradigm of large models' technology has shifted from the original pre-training Scaling Law, which focused on expanding model parameters to increase intelligence limits, to the new paradigm of inference Scaling Law, which injects reinforcement learning during inference to improve complex reasoning abilities.

In the previous paradigm, models primarily gave answers through next token prediction, focusing on "fast thinking." But in the new paradigm, models "slow think" by introducing Chain-of-Thought (CoT), breaking down complex problems into simpler steps before producing a result. When one method doesn’t work, the model tries another, improving its complex reasoning abilities through reinforcement learning. As the model continues to "slow think" and refine its reasoning, its capabilities increase exponentially — this is the inference Scaling Law.

For Liu Zhiyuan, the impressive research reasoning ability of o3 indicates that it is moving toward becoming a “super-intelligent supercomputer.”

Many industry professionals believe this will have a profound impact on cutting-edge scientific fields. On the positive side, o3’s strong research reasoning ability could accelerate human progress in fundamental scientific research in fields such as mathematics, physics, biology, and chemistry. However, some worry that it could disrupt the work of researchers.

The astonishing intelligence depth brought by o3 seems to signal the dawn of AGI. However, Liu Zhiyuan believes that just as the information revolution's mark was not the arrival of large computers but the popularization of personal computers (PCs), true AGI will only be realized when it becomes democratized and accessible — allowing everyone to have their own large model to solve daily problems.

"After all, we don’t need Terence Tao or Hinton (both top scientists) to solve our daily problems," he said.

The critical question is whether the depth of intelligence in the o3 model can be generalized across various fields, providing sufficient breadth of intelligence. According to a technology expert from a domestic large-model company, only by breaking through both depth and breadth can it be called AGI. He is optimistic, saying, "It's like a new transfer student who hasn’t interacted with the class but scored the highest in math and programming. Do you think they would be bad at language and English?"

For domestic large-model companies, the key issue is how to catch up with o3. From aspects such as training architecture, data, training methods, and evaluation datasets, this seems to be an engineering problem that can be solved.

“How far do you think we are from having an open-source model at the o3 level?”

“One year,” responded the model leader.

2. Models Are Just Engines; the Key Is Helping Developers Use Them

Although o3’s model capabilities are strong, some application-level professionals believe that there is still a significant gap between models and real-world applications. “Today OpenAI trained Einstein, but if it wants to become the chief scientist of a listed company, there's still a distance,” said Zhou Jian, founder and CEO of Lanma Technology.

As an intermediary layer for large models, Lanma Technology is one of the early companies in China to explore the application of large models and build AI agents. Zhou Jian believes that large models are infrastructure that needs to be adapted to specific scenarios to be usable, and the biggest bottleneck currently is data.

In many scenarios, obtaining complete data is difficult, and much data is not even digitized. For example, headhunters may need resume data, but much of this data has not been digitized.

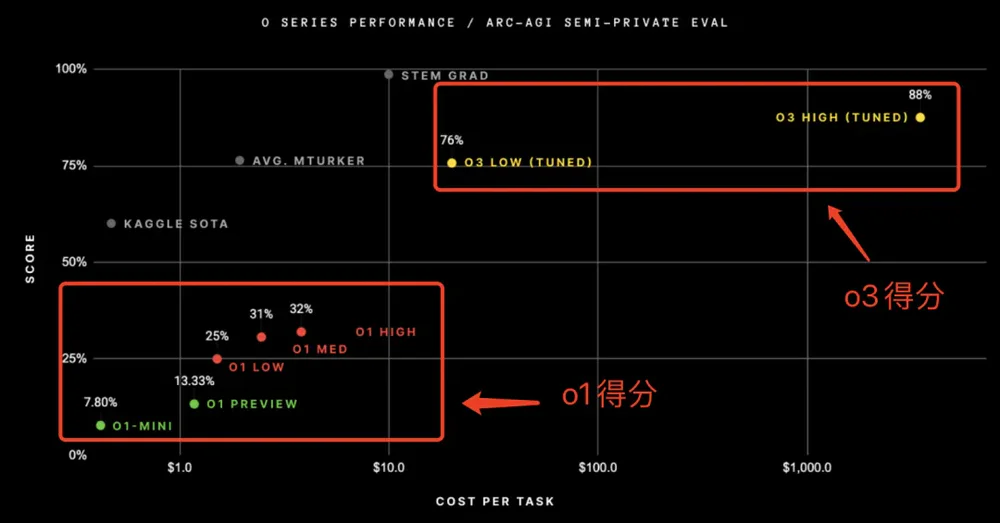

Cost is the most critical factor impacting the deployment of o-series models. According to the ARC-AGI test standard, o3-low (low computational mode) costs $20 per task, and o3-high (high computational mode) can cost thousands of dollars per task — even asking a simple question can cost nearly $20,000. The benefits do not offset the costs, and the deployment of o3 may take a long time.

OpenAI also announced solutions to help with model deployment. For example, on the second day, OpenAI released the AI Reinforcement Fine-Tuning feature for developers, which is of particular interest to Zhou Jian. This feature enables models to optimize reasoning abilities and improve performance using small amounts of data.

It is particularly useful for applications in specialized fields such as law, finance, engineering, and insurance. For example, Thomson Reuters recently used reinforcement fine-tuning to improve o1-mini, resulting in a useful AI legal assistant to help their legal professionals with "the most analytical workflows."

For developers with limited budgets, OpenAI introduced GPT-4o mini, which provides audio services at a quarter of the cost of 4o.

Zhou Jian is confident about the new year’s application business, saying that once OpenAI releases some leading technologies, domestic companies typically catch up within 6-12 months.

3. Sora’s Video Generation Was Below Expectations, but Product Openness Will Improve Its Physical Simulation Capabilities

When OpenAI released the demo of Sora earlier this year, it stunned the global tech community. However, throughout the year, domestic AI companies actively competed in the text-to-video field. By the time OpenAI officially launched Sora on the third day of its conference, many Chinese text-to-video companies felt relieved.

“There’s nothing significantly beyond expectations in terms of realism or physical characteristics compared to the February release,” said Tang Jiayu, co-founder and CEO of LifeData Technology, to GeekPark. “From the perspective of foundational model capabilities, it falls below expectations.”

Currently, companies like ByteDance, Kuaishou, MiniMax, Zhipu, LifeData, and AiShi have launched their own text-to-video products. “Sora’s effects and overall capabilities don’t show any clear leading advantage. It’s evident that we’re keeping pace with OpenAI,” Tang added.

In Tang’s view, Sora’s most notable features lie in its editing tools that enhance the video creation experience, beyond basic text-to-video or image-to-video capabilities. These tools suggest OpenAI is placing greater emphasis on product experience.

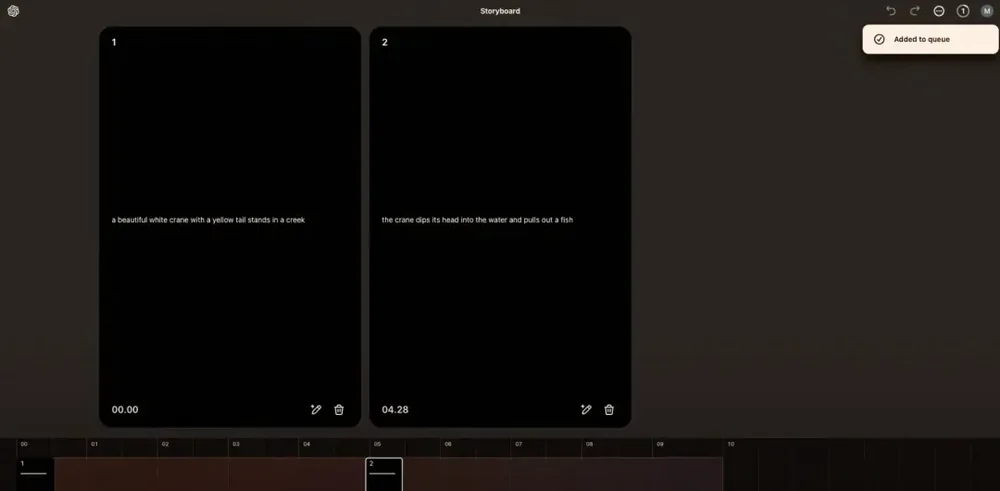

For instance, Sora includes a storyboard function. This tool organizes a story (video) into multiple distinct story cards (video frames) along a timeline. Users can design and adjust each card, and Sora automatically transforms them into a cohesive video—similar to film storyboards or animation drafts. Directors sketching storyboards or animators drafting their ideas can now turn them into finished productions with ease. This empowers creators to better express their vision.

Additionally, Sora offers features like text-based video editing, seamlessly merging different videos, and altering video styles—essentially adding “special effects” to videos. Conventional text-to-video products can’t directly modify the original video; they require users to continuously adjust prompts to generate new outputs.

Sora's Storyboard Function | Image Source: OpenAI

Tang believes these feature designs aim to give creators greater creative freedom. Similar features are already in the development pipeline for Vidu, LifeData Technology’s text-to-video product. “Implementing Sora’s functionalities isn’t challenging for us, and the paths to achieve them are already clear,” he noted.

During the conference, Sam Altman elaborated on the rationale behind developing Sora: (1) its utility as a creative tool, (2) expanding AI interactions beyond text to multimodal engagement, and (3) its alignment with OpenAI’s vision for AGI. Sora aims to learn more about the world’s laws and could eventually help build a “world model” that understands physical principles.

However, Tang pointed out that Sora’s videos still display many obvious violations of physical laws, with little improvement since February’s demo. He believes the release of Sora will invite more users to test and explore its physical simulation capabilities, providing valuable data for its enhancement.

4. Adding Features and Building Ecosystems: Can ChatGPT Become a Super App?

Beyond the o-series models, Sora, and developer services, OpenAI’s primary focus during the conference was on continuously adding new features to optimize user experience and deepening collaborations with companies like Apple. These efforts explore the integration of AI into terminal devices and operating systems.

From its feature updates, ChatGPT seems to be evolving into an “all-knowing, omnipresent, universally accessible” AI assistant. GeekPark has learned that OpenAI’s initial vision was to create an “omnipotent” agent capable of understanding human instructions, autonomously utilizing tools, and fulfilling user needs. In a way, the goal remains unchanged.

For instance, on Day 6, ChatGPT introduced video calling with screen-sharing and a Santa Claus voice mode. The former enables real-time video interactions where users can share their screens or surroundings, enabling multimodal interaction reminiscent of the movie Her.

On Day 8, ChatGPT made its search functionality available to all users. Beyond basic search, it introduced voice search and integrated with mapping services like Apple Maps and Google Maps. Users can view search result lists and check information like stock prices, sports scores, and weather forecasts, supported by partnerships with top news and data providers.

On Day 11, ChatGPT expanded its integration with desktop software, enabling connections with more coding applications like BBEdit, MatLab, and Nova. It also supports collaboration with apps like Warp (file sharing) and XCode, as well as voice-mode integration with tools like Notion and Apple Notes.

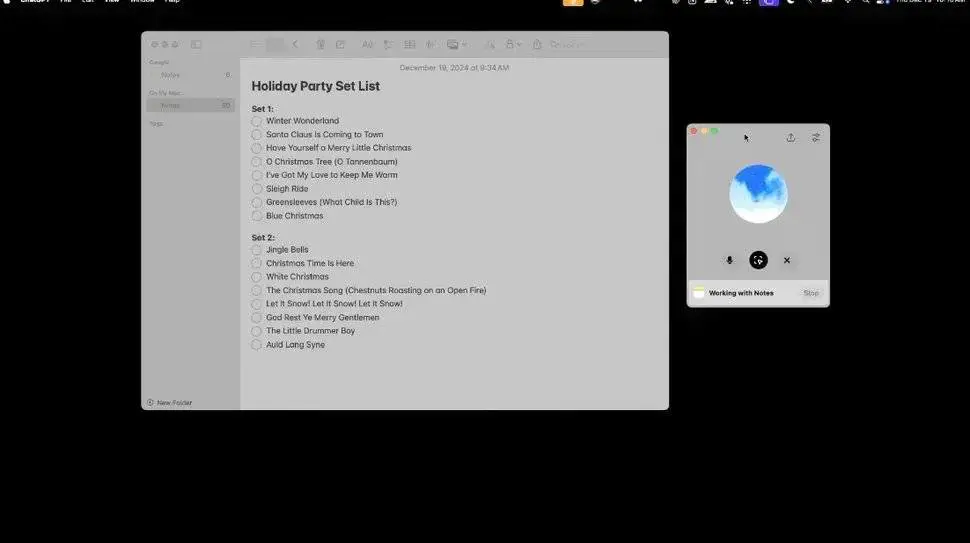

In one live demo, a user set up a “holiday party playlist” in Apple Notes and asked ChatGPT for suggestions via voice. ChatGPT corrected a typo where the user mistakenly listed the Christmas song “Frosty the Snowman” as “Freezy the Snowman.”

Sora's Storyboard Function | Image Source: OpenAI

“ChatGPT is transforming from a mere conversational assistant into a more powerful agent tool,” said Kevin Weil, OpenAI’s Chief Product Officer.

Simultaneously, OpenAI is expanding its ecosystem by embedding AI into widely used terminal devices, operating systems, and software platforms to reach more people.

For instance, on Day 5, ChatGPT announced integration with Apple’s smart ecosystem, supporting AI capabilities across iOS, macOS, and iPadOS. Users can now invoke AI features across platforms and applications, including Siri interaction, writing tools, and visual intelligence for scene recognition. This partnership allows ChatGPT to reach billions of Apple users globally, setting a precedent for collaboration between large models and terminal devices or operating systems.

On Day 10, ChatGPT launched its telephone contact service (1-800-242-8478), allowing U.S. users 15 minutes of free calls per month. It also launched a WhatsApp contact, enabling global users to send text messages to the same number.

In regions where smartphone and mobile internet penetration remains low, the telephone service allows ChatGPT to reach those populations. With WhatsApp, it taps into its nearly 3 billion users worldwide.

Whether by adding internal features or expanding its ecosystem, ChatGPT’s goal is to become a true Super App. However, some critics are skeptical about this approach of continuously adding features and extending business lines, describing it as “spreading the dough too thin.” They argue that many features require depth to create value, which may pose challenges for OpenAI.

While the o3 model showcases OpenAI’s astonishing technical capabilities, questions remain about the upper limits of its reasoning scaling law and the development delays of GPT-5. By focusing on product forms, ecosystem collaborations, and real-world applications during this conference, OpenAI seems to be exploring a pragmatic path forward. This combination of technical innovation and practical application could shape the industry’s next trajectory.

![[Thinking] Frontline technical experts share: working methods, knowledge accumulation and career planning](/static/upload/image/20240905/1725551650967918.jpeg)