This article introduces 14 commonly used machine learning algorithms and provides code examples to help readers understand and apply them effectively. As a significant branch of artificial intelligence, machine learning is increasingly being applied in various fields today. From simple linear regression to complex ensemble learning methods, each algorithm has its unique application scenarios. Below is a summary of these 14 algorithms, along with code examples to facilitate understanding.

1. Linear Regression

Linear regression is a method for predicting continuous values, such as predicting house prices based on the area.

Code Example:

import numpy as np

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

# Create dataset

X = np.array([[1], [2], [3], [4], [5], [6]])

y = np.array([2, 4, 5, 4, 5, 7])

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = LinearRegression()

model.fit(X_train, y_train)

# Predict and visualize the results

predictions = model.predict(X_test)

plt.scatter(X, y, color='blue', label='Actual')

plt.plot(X, model.predict(X), color='red', label='Predicted')

plt.xlabel('Area (sq ft)')

plt.ylabel('Price ($)')

plt.legend()

plt.show()This code demonstrates how to use the LinearRegression class to create a model. It fits a line to the data points, which best approximates the relationship between the variables.

2. Logistic Regression

Logistic regression is used for classification tasks, such as determining whether an email is spam or not.

Code Example:

from sklearn.datasets import load_iris

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = LogisticRegression()

model.fit(X_train, y_train)

# Predict and evaluate accuracy

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")This shows how to use the LogisticRegression class to classify data, with accuracy_score used to evaluate the model’s performance.

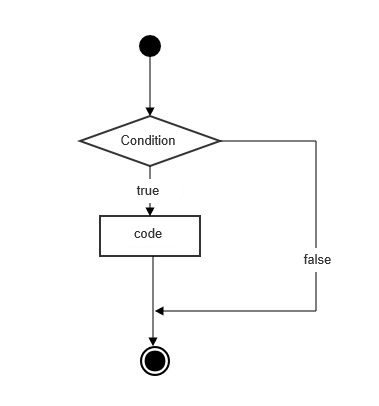

3. Decision Trees

Decision trees can solve both classification and regression problems, such as deciding whether to approve a loan.

Code Example:

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = DecisionTreeClassifier()

model.fit(X_train, y_train)

# Predict and evaluate accuracy

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")This code illustrates using DecisionTreeClassifier to create a model that makes decisions based on the data splits.

4. Support Vector Machines (SVM)

SVM is used for both classification and regression, for example, recognizing handwritten digits.

Code Example:

from sklearn.svm import SVC

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_digits

# Load dataset

data = load_digits()

X = data.data

y = data.target

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = SVC()

model.fit(X_train, y_train)

# Predict and evaluate accuracy

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")The SVC class helps create an SVM model that finds the optimal boundary to classify the data points.

5. K-Nearest Neighbors (KNN)

KNN is used for classification and regression, such as predicting the popularity of a game.

Code Example:

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = KNeighborsClassifier(n_neighbors=3)

model.fit(X_train, y_train)

# Predict and evaluate accuracy

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")The KNeighborsClassifier class classifies data points based on the proximity of neighboring points.

6. Random Forest

Random forest is an ensemble learning method for classification and regression, such as predicting stock prices.

Code Example:

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = RandomForestClassifier(n_estimators=100)

model.fit(X_train, y_train)

# Predict and evaluate accuracy

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")RandomForestClassifier builds multiple decision trees and aggregates their results for improved accuracy.

7. Principal Component Analysis (PCA)

PCA is used for dimensionality reduction, such as simplifying a high-dimensional dataset.

Code Example:

from sklearn.decomposition import PCA

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Create and apply PCA

pca = PCA(n_components=2)

X_pca = pca.fit_transform(X)

# Visualize the results

plt.scatter(X_pca[:, 0], X_pca[:, 1], c=y, cmap='viridis')

plt.xlabel('First Principal Component')

plt.ylabel('Second Principal Component')

plt.title('PCA of Iris Dataset')

plt.show()The PCA class reduces the dimensions of the dataset while retaining its most important features.

8. Clustering Algorithm (K-Means)

Clustering algorithms are used in unsupervised learning, such as grouping customers into different segments.

Code Example:

from sklearn.cluster import KMeans

from sklearn.datasets import make_blobs

import matplotlib.pyplot as plt

# Create dataset

X, _ = make_blobs(n_samples=300, centers=4, random_state=42)

# Create KMeans model

kmeans = KMeans(n_clusters=4)

# Train the model

kmeans.fit(X)

# Predict clusters

labels = kmeans.predict(X)

# Visualize the results

plt.scatter(X[:, 0], X[:, 1], c=labels, cmap='viridis')

centers = kmeans.cluster_centers_

plt.scatter(centers[:, 0], centers[:, 1], c='red', marker='x')

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.title('K-Means Clustering')

plt.show()This code demonstrates how to use the KMeans class for clustering. K-Means assigns data points to the nearest centroids, forming different clusters.

9. Gradient Boosting

Gradient Boosting is used for classification and regression tasks, such as predicting customer churn.

Code Example:

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split the dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create the model

model = GradientBoostingClassifier()

# Train the model

model.fit(X_train, y_train)

# Predict

predictions = model.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")This code demonstrates how to use the GradientBoostingClassifier class. Gradient Boosting improves model performance by combining multiple weak learners.

10. AdaBoost

AdaBoost is another ensemble learning method used for classification and regression tasks, such as detecting malware.

Code Example:

from sklearn.ensemble import AdaBoostClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split the dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create the model

model = AdaBoostClassifier()

# Train the model

model.fit(X_train, y_train)

# Predict

predictions = model.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")This code shows how to use the AdaBoostClassifier class. AdaBoost improves performance by giving more weight to misclassified data points.

11. XGBoost

XGBoost is a widely used gradient boosting framework for classification and regression tasks, such as predicting stock market trends.

Code Example:

import xgboost as xgb

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split the dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create the model

model = xgb.XGBClassifier()

# Train the model

model.fit(X_train, y_train)

# Predict

predictions = model.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")This code demonstrates how to use the XGBClassifier class. XGBoost improves model performance by optimizing the objective function.

12. LightGBM

LightGBM is another efficient gradient boosting framework suitable for large-scale datasets, such as recommendation systems.

Code Example:

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split the dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create the model

model = lgb.LGBMClassifier()

# Train the model

model.fit(X_train, y_train)

# Predict

predictions = model.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")This code shows how to use the LGBMClassifier class. LightGBM accelerates training by efficiently processing large datasets.

13. CatBoost

CatBoost is another powerful gradient boosting framework that excels at handling categorical features, such as predicting user behavior.

Code Example:

import catboost as cb

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_iris

# Load dataset

data = load_iris()

X = data.data

y = data.target

# Split the dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create the model

model = cb.CatBoostClassifier()

# Train the model

model.fit(X_train, y_train)

# Predict

predictions = model.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, predictions)

print(f"Accuracy: {accuracy * 100:.2f}%")This code demonstrates how to use the CatBoostClassifier class. CatBoost enhances performance by efficiently processing categorical features.

14. DBSCAN

DBSCAN is a density-based clustering algorithm used to discover clusters of arbitrary shapes, such as anomaly detection.

Code Example:

from sklearn.cluster import DBSCAN

from sklearn.datasets import make_moons

import matplotlib.pyplot as plt

# Create dataset

X, _ = make_moons(n_samples=300, noise=0.1, random_state=42)

# Create DBSCAN model

dbscan = DBSCAN(eps=0.2, min_samples=5)

# Train model

labels = dbscan.fit_predict(X)

# Visualize the results

plt.scatter(X[:, 0], X[:, 1], c=labels, cmap='viridis')

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.title('DBSCAN Clustering')

plt.show()This code demonstrates how to use the DBSCAN class for clustering. DBSCAN finds clusters by identifying density-connected points.

Conclusion

This article introduced 14 commonly used machine learning algorithms with practical code examples to demonstrate their basic usage. From simple linear regression to complex ensemble learning methods, each algorithm has its unique application scenarios. By understanding how these algorithms work, readers can better select the right tools to solve real-world problems. We hope this article helps readers gain deeper insights into core machine learning techniques.