Master These 12 SQL Optimization Methods and You'll Be Practically Unstoppable

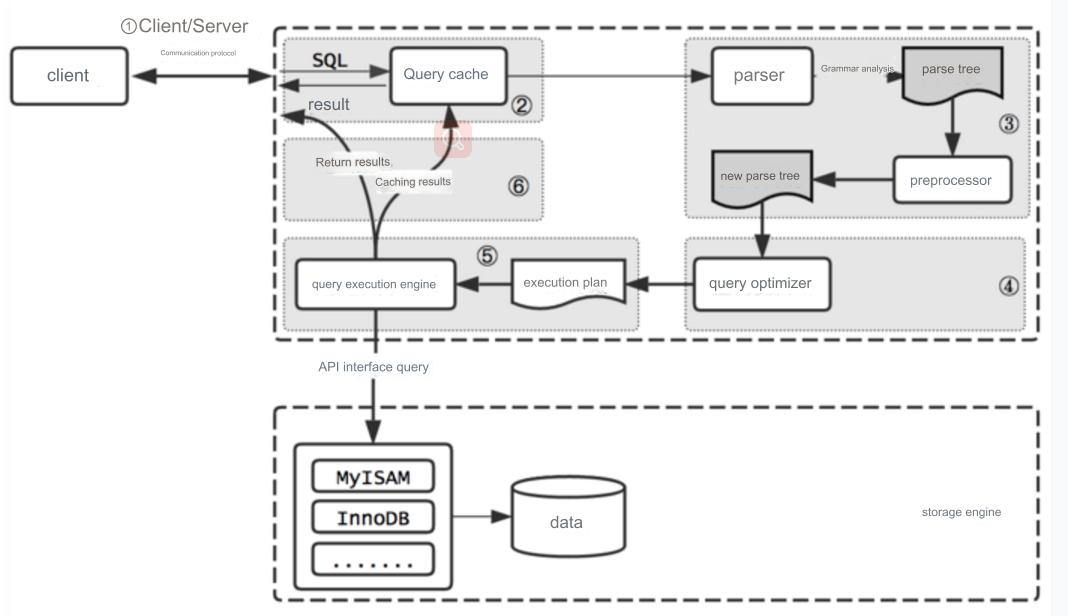

Before diving into SQL optimization techniques, let's first provide an overview of MySQL's internal architecture for better understanding:

Connector: Handles the connection with the client, retrieves permissions, and manages the connection.

Query Cache: First attempts to retrieve data from the cache. If the data is found, it is returned directly; if not, it queries the database.

Note that MySQL query caching is disabled by default, and it is not recommended to use caching. In MySQL 8.0, the entire query cache feature has been removed due to its limited use cases.

Data in the cache is stored in a key-value format, where the key is the SQL statement, and the value is the result set. A slight difference in the SQL query can cause a new database query. Since data in the table frequently changes, any updates require clearing related cache data.

Parser/Analyzer: The parser analyzes the SQL statement to generate an abstract syntax tree. The preprocessor performs semantic checks, such as verifying the existence of the tables and checking if the selected columns exist in those tables.

Optimizer: The optimizer takes the parsed syntax tree and, using data dictionary and statistical information, produces an execution plan, including which index to use.

During index selection, dynamic data sampling is used for statistical analysis. Since this is a statistical process, there's a possibility of errors. If an index is not used during SQL execution, this factor should be considered.

Executor: Executes the SQL query based on the execution plan by calling the storage engine’s API to operate on the data.

SQL Query Optimization

1. Avoid Using Subqueries

Example:

SELECT * FROM t1 WHERE id IN (SELECT id FROM t2 WHERE name = 'chackca');

In MySQL 5.5, the execution plan first queries the outer table and then matches the inner table, making the query slow when the outer table has large amounts of data.

In MariaDB 10 and MySQL 5.6, the query is optimized by converting it to a join:

SELECT t1.* FROM t1 JOIN t2 ON t1.id = t2.id;

However, this optimization only applies to SELECT statements, not UPDATE or DELETE. It's best to avoid subqueries in production environments. Since MySQL's optimizer is weak in handling subqueries, consider rewriting them as INNER JOIN queries. Joins are faster because MySQL doesn’t need to create temporary tables in memory.

2. Use IN Instead of OR

Inefficient query:

SELECT * FROM t WHERE id = 10 OR id = 20 OR id = 30;

Efficient query:

SELECT * FROM t WHERE id IN (10, 20, 30);

MySQL optimizes IN by storing the constants in a sorted array. However, if the list is too long, performance might degrade.

3. Use LIMIT M,N Wisely

SELECT id, name FROM t LIMIT 866613, 20;

As data grows, queries using LIMIT for pagination can become slower. This happens because MySQL retrieves offset + N rows and discards the first offset rows. As offset increases, performance decreases.

A better way to paginate is to use the last record’s ID from the previous page and query the next page like this:

SELECT id, name FROM table_name WHERE id > 866612 LIMIT 20;

4. Avoid Unnecessary ORDER BY Clauses

Avoid sorting results if it's not necessary, especially if the sorting column is not indexed. For GROUP BY queries, MySQL sorts the results by default. Disable sorting with ORDER BY NULL:

SELECT goods_id, count(*) FROM t GROUP BY goods_id ORDER BY NULL;

5. Use UNION ALL Instead of UNION

UNION performs a duplicate elimination process, involving sorting and high CPU usage. Use UNION ALL if you know the result sets don’t have duplicates:

SELECT ... UNION ALL SELECT ...;

6. Avoid Random Record Selection

Avoid using ORDER BY RAND() for random record selection as it doesn’t utilize indexes. Use an alternative like:

SELECT * FROM t1 WHERE id >= CEIL(RAND() * 1000) LIMIT 4;

7. Use Batch Insert Instead of Multiple Inserts

Inefficient:

INSERT INTO t(id, name) VALUES(1, 'aaa'); INSERT INTO t(id, name) VALUES(2, 'bbb'); INSERT INTO t(id, name) VALUES(3, 'ccc');

Efficient:

INSERT INTO t(id, name) VALUES(1, 'aaa'), (2, 'bbb'), (3, 'ccc');

8. Return Only Necessary Columns

Avoid SELECT * as it incurs unnecessary I/O and memory usage. Explicitly list the columns needed:

SELECT column1, column2 FROM table_name;

This increases the likelihood of using a covering index, reducing I/O operations.

9. Understand IN vs EXISTS

IN is more efficient when the outer table is large and the inner table is small, whereas EXISTS works better when the outer table is small and the inner table is large.

Example of IN:

SELECT * FROM A WHERE id IN (SELECT id FROM B);

Example of EXISTS:

SELECT * FROM A WHERE EXISTS (SELECT * FROM B WHERE B.id = A.id);

10. Optimize GROUP BY Statements

If GROUP BY results don’t need sorting, disable it with ORDER BY NULL. Ensure indexes are used to avoid temporary tables and file sorting. Increase tmp_table_size to avoid disk-based temporary tables.

Use WHERE instead of HAVING whenever possible to filter records before grouping.

11. Prefer Numeric Fields

Use numeric fields instead of character fields for purely numeric data. This reduces the processing time as numbers are compared faster than strings.

By applying these SQL optimization techniques, you can significantly improve query performance, reducing execution time and resource consumption.

12. Optimizing Join Statements

When we execute a Join between two tables, there is a comparison process involved. Comparing the statements of the two tables row by row can be slow. Therefore, we can read the data from both tables into a memory block sequentially. In MySQL, you can execute:

SHOW VARIABLES LIKE 'join_buffer_size';

This shows the size of the join buffer in memory, which will affect the performance of join statements. When executing a join, the database chooses one table to place the data it needs to return and the data that needs to be compared with other tables into the join buffer.

What are the driver table and the driven table? These two concepts can sometimes be confusing in queries. Here are some situations you need to understand:

When the join query has no WHERE conditions:

In a left join, the table on the left is the driver table, and the table on the right is the driven table.

In a right join, the table on the right is the driver table, and the table on the left is the driven table.

An inner join / join will automatically select the table with fewer data as the driver table.

Straight_join (≈ join) directly selects the left table as the driver table (semantically similar to join, but removes the feature of join automatically selecting the smaller table as the driver table).

When the join query has WHERE conditions: The table with the WHERE conditions is the driver table; otherwise, it is the driven table.

Assuming we have tables like this: t1 and t2 are identical, with an index on column 'a' and no index on column 'b'. t1 has 100 rows, and t2 has 1000 rows.

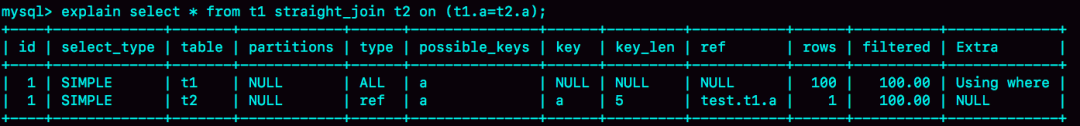

If the driven table has an index, then the execution algorithm will be: Index Nested-Loop Join (NLJ). Here’s an example:

Execution statement:

SELECT * FROM t1 STRAIGHT_JOIN t2 ON (t1.a = t2.a);

Since the driven table t2.a has an index, its execution logic is as follows:

Read a row of data R from table t1.

Extract the 'a' field from row R to search in table t2.

Retrieve the rows from table t2 that satisfy the condition and combine them with R as part of the result set.

Repeat steps 1 to 3 until reaching the end of table t1.

If the Extra field of a join statement is empty, it indicates that the NLJ algorithm is being used.

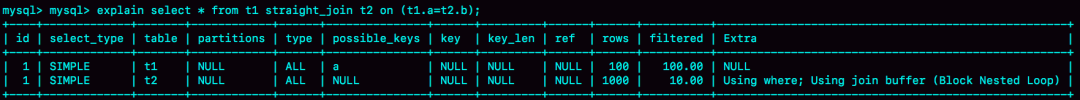

If the driven table has no index, then the execution algorithm will be: Block Nested-Loop Join (BLJ). (Block: each time, a chunk of data is read into memory to reduce I/O overhead.) Here's an example:

Execution statement:

SELECT * FROM t1 STRAIGHT_JOIN t2 ON (t1.a = t2.b);

Since the driven table t2.b has no index, its execution logic is as follows:

Read the data from the driver table t1 into the thread's memory join_buffer (unordered array). Since the statement uses

SELECT *, the entire table t1 is loaded into memory.Sequentially traverse table t2, extracting each row and comparing it with the data in join_buffer. Rows that satisfy the join condition are returned as part of the result set.

Another algorithm is Simple Nested-Loop Join (SLJ), which works as follows: sequentially extract each row of data from the driver table and perform a full table scan match on the driven table. Successful matches are returned as part of the result set.

Additionally, InnoDB allocates a disk storage file named table_name.ibd for each data table. If too many associated tables are involved, it can lead to excessive disk head movements during queries, thus affecting performance.

In practice, minimize the number of Nested Loop iterations in Join statements: "Always use a small result set to drive a large result set."

By using a small result set to drive a large one, filter the smaller table first (when deciding which table to use as the driver, both tables should be filtered according to their respective conditions, and after filtering, the total data volume of each field involved in the join is calculated; the table with the smaller data volume is the "small table"). Then connect it to the larger result set table to minimize the total number of Nested Loop iterations in the join statement.

Prioritize optimizing the inner loop of the Nested Loop (which is the outermost Join connection) because the inner loop executes the most times in the iteration. Improving performance even slightly in each loop can greatly enhance overall performance.

Create indexes on the join fields of the driven table.

If it is not possible to create an index on the join fields of the driven table, set an adequate Join Buffer Size.

Try to use inner joins (as they will automatically select the smaller table to drive the larger table). Avoid LEFT JOINs (generally, we use Left Join when a large table drives a small table) and NULL. How can we optimize Left Joins?

Try to filter some rows in the conditions to make the driver table smaller, using a small table to drive a large table.

Ensure that the condition columns in the right table have indexes (primary key, unique index, prefix index, etc.), preferably ensuring the type reaches at least range (ref, eq_ref, const, system).

Appropriately add redundant information to the tables to reduce the number of joins.

Use faster solid-state drives (SSDs).

For performance optimization, the left join is determined by the left side, which always has values, so the right side is crucial. Indexes should be created on the right side. Of course, if the index is on the left side, we can consider using a right join as follows:

SELECT * FROM atable LEFT JOIN btable ON atable.aid = btable.bid; -- It's best to create an index on btable.bid.

Tips: Create indexes for left joins on the right side; in composite indexes, try to place larger data volumes on the left and create indexes there.

Index Optimization / How to Avoid Index Failure

Best Left Prefix Rule

If an index includes multiple columns, you need to follow the "Best Left Prefix" rule, meaning that queries should start from the leftmost column of the index and not skip any indexed columns. MySQL's query optimizer improves the query by determining which combination of columns makes the query faster. For instance, if an index is created on (a, b) and the query is (b, a), the query optimizer will adjust it to (a, b) to use the index.

Avoid Performing Operations on Indexed Columns

SELECT * FROM t_user WHERE LENGTH(name) = 6;

Using a function on a column in this query leads to index failure.

Starting with MySQL 8.0, function-based indexes are supported, meaning you can create an index on the result of a function, and the index value is the result of that function. This allows you to scan the index while querying the data.

ALTER TABLE t_user ADD KEY idx_name_length ((LENGTH(name)));

(Automatic/Manual) Type Conversion

(String types must be enclosed in quotes to make the index work.) If a field is

VARCHARand you query using an integer, the index will not be used. For example:SELECT * FROM user WHERE phone = 13030303030;

MySQL will convert the column to a number for comparison. This query is equivalent to:

SELECT * FROM user WHERE CAST(phone AS signed int) = 13030303030;

The

CASTfunction is applied to thephonefield, and since the field is indexed, this function causes index failure.When the field is

INTand queried with a string, MySQL will automatically convert the value and still use the index. For example:SELECT * FROM user WHERE id = '1';

MySQL automatically converts the string to a number, allowing the query to use the index scan since the

CASTfunction is applied to the input parameter, not the index field.Calculations: Performing calculations on an index can cause index failure. For example, the condition

WHERE id + 1 = 10can be rewritten asWHERE id = 10 - 1to make use of the index.Functions:

Storage Engine Cannot Use Index on Columns to the Right of Range Conditions

For example, in the query:

SELECT * FROM user WHERE username = '123' AND age > 20 AND phone = '1390012345';

If

username,age, andphoneall have indexes, only the indexes onusernameandagewill be used. Thephoneindex will not be utilized.Use Covering Indexes Whenever Possible (queries that only access the index, meaning the indexed columns and the queried columns are the same).

For example:

SELECT age FROM user;

Reduces the need for

SELECT *.Negative Query Conditions (

!=,<>,NOT IN,NOT EXISTS,NOT LIKE) prevent the use of indexes and lead to a full table scan.Consider a B+ tree with 40 as the root node. If your condition is

= 20, it searches to the left; if= 50, it searches to the right. But if the condition is!= 66, how should the index work? It has to traverse the entire tree to find all results.IS NULLandIS NOT NULLconditions also prevent index use. It is best to avoid usingNULLin WHERE clauses, although higher versions of MySQL have optimized this to allow index usage.Checking for

NULLforces the engine to forego using the index and perform a full table scan.Using a wildcard at the start of a

LIKEpattern (%abc…) causes MySQL to disable the index and perform a full table scan.It’s better to use:

LIKE 'abc%'

If wildcards must be on both sides, use:

SELECT username FROM user WHERE username LIKE '%abc%';

In this case,

usernamemust be an indexed column for the index to be effective.For an index

(a, b, c)with a query like:WHERE a = 3 AND b LIKE 'abc%' AND c = 4;

Indexes on

aandbare used, but not onc, similar to the case where columns after a range condition are not used.In a B+ tree index, if the root node is the string "def", a query like

abc%will search the left subtree, andefg%will search the right subtree. But if the query is%abc, the database doesn’t know which side to search and must scan the entire tree.Avoid Using

OR: If a condition before theORuses an index and a condition after theORdoes not, the index will not be used.For example:

SELECT * FROM t_user WHERE id = 1 OR age = 18;

If

idhas an index butagedoes not, the query will not use the index.Both conditions must have indexes for the query to utilize them (using separate indexes and merging the results with

type = index_merge).Place High Selectivity Indexes First in a Composite/Combined Index

If the index has low selectivity, such as indexing gender, it only divides the table into two parts, and a half-table scan is still required, rendering the index ineffective.

Use Prefix Indexes

Shorter indexes can improve query performance, save disk space, and reduce I/O operations. However, prefix indexes cannot be used for

ORDER BYorGROUP BYoperations and cannot be used as covering indexes.For example, if a column is

VARCHAR(255)and the first 10 or 20 characters have high selectivity close to a full-column index, you don’t need to index the entire column. To reducekey_len, consider creating a prefix index by specifying a prefix length. You can calculate the selectivity of a prefix index with:COUNT(DISTINCT LEFT(column_name, index_length)) / COUNT(*)

SQL Performance Optimization –

EXPLAIN'stype: At Leastrange, Ideallyref, andconstis BestWhen

type = index, it means the index file is fully scanned, which is very slow.const: There is at most one matching row in the table (primary key or unique index). Data can be read during the optimization phase.

ref: A regular index is used.

range: A range scan on the index is performed.