In our daily work, we often use MySQL databases because they are open-source, free, and offer decent performance.

However, it’s also easy to fall into some common pitfalls when using MySQL. If you don’t believe it, keep reading.

This article will focus on 15 common pitfalls when using MySQL, hoping it will be helpful to you.

1. Query Without a WHERE Clause

Some developers might want to retrieve all data from a table in one go and then process the business logic in memory, thinking this approach will improve performance.

Example of a bad practice:

SELECT * FROM users;

This query retrieves all the data without any filtering condition.

This approach works fine when the data volume is small.

However, if the dataset is large, and every business operation requires fetching all the data from the table, it can lead to Out of Memory (OOM) issues.

As the data size grows, the query processing time also increases.

Correct approach:

SELECT * FROM users WHERE code = '1001';

Use specific WHERE conditions, such as the code field, to filter the data before processing it.

2. Not Using Indexes

Sometimes, when our application is newly launched, the dataset is small, and performance is fine without indexes.

But as the user base grows and the table data increases exponentially, you may suddenly notice that queries become slower.

For example:

SELECT * FROM orders WHERE customer_id = 123;

You can add an index on the customer_id field:

CREATE INDEX idx_customer ON orders(customer_id);

This can greatly improve query performance!

3. Not Handling NULL Values

Problem: Forgetting to account for the impact of NULL values during statistics calculation, leading to inaccurate results.

Example of a bad practice:

SELECT COUNT(name) FROM users;

This only counts the non-NULL values in the name field, not the total number of rows.

To count all rows, use:

SELECT COUNT(*) FROM users;

This will count all rows, including those with NULL values.

4. Choosing the Wrong Data Types

Some developers may casually choose VARCHAR(255) when creating a table, which can lead to poor performance and wasted storage.

Example of a bad practice:

CREATE TABLE products ( id INT, status VARCHAR(255) );

This can lead to performance issues.

A better practice would be to change the status field to a tinyint type:

CREATE TABLE products ( id INT, status tinyint(1) DEFAULT '0' COMMENT 'Status 1: Active, 0: Inactive');

This is more space-efficient.

5. Deep Pagination Issues

In our daily work, we often encounter scenarios where we need to paginate query results.

We typically use the LIMIT keyword.

For example:

SELECT * FROM users LIMIT 0,10;

When the data volume is small, the first, second, or third pages of results might perform okay.

But when querying the 100,000th page, query performance can become very poor. This is a deep pagination issue.

How to solve deep pagination?

5.1 Record the Last ID

The main problem with pagination queries is that when querying, for example, the 100,000th page, you need to scan through the data from the previous 99,999 pages.

But if we record the ID from the last query, we can start the next query from that point, rather than scanning previous pages.

For example:

SELECT id, name FROM orders WHERE id > 1000000 LIMIT 100000, 10;

If the largest ID from the previous query was 1,000,000, then the current query starts from the next position (ID 1,000,001).

This way, you don’t have to query the previous data, significantly improving query performance.

Note:

You need to record the ID from the last query. This approach works for previous and next page navigation but is not suitable for random page access.

The ID field should be auto-incremented.

5.2 Use Subqueries

You can first use a subquery to get the primary keys that meet the conditions, and then use those primary keys to fetch the actual data.

SELECT * FROM orders WHERE id IN ( SELECT id FROM ( SELECT id FROM orders WHERE time > '2024-08-11' LIMIT 100000, 10 ) t );

In this case, the subquery can utilize covering indexes.

In the original query, 10 rows of data are retrieved, but it might involve 100,010 lookups.

By using a subquery, we reduce the number of table lookups. This can optimize deep pagination.

5.3 Use Inner Join for Query Optimization

Similarly to subqueries:

SELECT * FROM orders o1 INNER JOIN ( SELECT id FROM orders WHERE create_time > '2024-08-11' LIMIT 100000,10 ) AS o2 ON o1.id = o2.id;

In the inner join subquery, we first filter the data based on the conditions and pagination, returning the IDs.

Then we use the IDs to perform the actual query.

This reduces the number of lookups and speeds up the query.

6. Not Using EXPLAIN to Analyze Queries

Some SQL queries are slow, but developers don’t analyze the execution plan, leading to blind optimization.

Correct approach:

EXPLAIN SELECT * FROM users WHERE email = 'test@example.com';

EXPLAIN will show you how the query is executed and help you identify bottlenecks.

If you want to learn more about the EXPLAIN keyword, you can check out my other article, "SQL Performance Optimization Tools," which provides detailed insights.

7. Incorrect Character Set Configuration

Some developers prefer to set the MySQL character set to utf8.

I used to like doing this a few years ago too.

But then problems started to arise. For example, if a user inputs emojis in a feedback form, it might cause issues when the program saves the data.

Incorrect character set configuration can also lead to garbled characters for Chinese text, severely affecting user experience.

Correct approach:

CREATE TABLE messages ( id INT, content TEXT ) CHARACTER SET utf8mb4;

It's recommended to use utf8mb4 as the character set when creating tables. It supports a wider range of characters, including common Chinese characters and emojis.

8. SQL Injection Risks

Using SQL string concatenation can make your code vulnerable to SQL injection attacks, posing a major security risk.

For example, when dynamically constructing a SELECT query with an ORDER BY clause or sorting order (e.g., ascending or descending), if not handled carefully, it could lead to an SQL injection vulnerability.

Bad example:

String query = "SELECT * FROM users WHERE email = '" + userInput + "';";

It’s best to avoid direct string concatenation in SQL queries and use prepared statements instead.

Good example:

PreparedStatement stmt = connection.prepareStatement("SELECT * FROM users WHERE email = ?");

stmt.setString(1, userInput);In MyBatis, when using the $ symbol for value substitution, it's better to use the # symbol for parameterized values.

If you're interested in SQL injection issues, check out my other article "Oh No, SQL Injection Took Down Our System!" which provides a detailed explanation.

9. Transaction Issues

Sometimes, developers forget to use transactions when writing code.

Particularly when updating multiple tables, not using transactions can result in data inconsistency.

Bad example:

UPDATE accounts SET balance = balance - 100 WHERE id = 1; UPDATE accounts SET balance = balance + 100 WHERE id = 2;

In this case, if user 1 transfers 100 to user 2, without a transaction, user 1 may see the deduction, but user 2 may not receive the money.

We should use the START TRANSACTION command to begin a transaction and COMMIT to commit the changes.

Good example:

START TRANSACTION; UPDATE accounts SET balance = balance - 100 WHERE id = 1; UPDATE accounts SET balance = balance + 100 WHERE id = 2; COMMIT;

This way, if user 1's transfer succeeds but user 2's transfer fails, the data for user 1 will be rolled back.

In Spring, you can use the @Transactional annotation for declarative transactions or TransactionTemplate for programmatic transactions.

It’s recommended to use TransactionTemplate for programmatic transactions.

10. Collation Issues

MySQL tables and fields have a COLLATE parameter to configure collation rules.

There are three main types:

Those ending with

_ci(case-insensitive)Those ending with

_bin(binary)Those ending with

_cs(case-sensitive)_cistands for "case insensitive," meaning it ignores case._csstands for "case sensitive," meaning it distinguishes between uppercase and lowercase._binstores characters as binary data, distinguishing case as well.

The most common collations are utf8mb4_general_ci (default) and utf8mb4_bin.

For example, the brand table might be created with the COLLATE=utf8mb4_general_ci, which is case-insensitive:

CREATE TABLE `brand` ( `id` bigint NOT NULL AUTO_INCREMENT COMMENT 'ID', `name` varchar(30) NOT NULL COMMENT 'Brand Name', `create_user_id` bigint NOT NULL COMMENT 'Created by User ID', `create_user_name` varchar(30) NOT NULL COMMENT 'Created by User Name', `create_time` datetime(3) DEFAULT NULL COMMENT 'Creation Date', `update_user_id` bigint DEFAULT NULL COMMENT 'Updated by User ID', `update_user_name` varchar(30) DEFAULT NULL COMMENT 'Updated by User Name', `update_time` datetime(3) DEFAULT NULL COMMENT 'Update Time', `is_del` tinyint(1) DEFAULT '0' COMMENT 'Deleted 1: Deleted 0: Not Deleted', PRIMARY KEY (`id`) USING BTREE ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci COMMENT='Brand Table';

With this collation, if you run the following query:

SELECT * FROM brand WHERE `name`='yoyo';

It will return the result even if the name is stored as YOYO.

However, if the table is set to case-insensitive collation but the business code differentiates between case, there could be issues. In such cases, you should use equalsIgnoreCase instead of equals to compare strings.

11. Overusing SELECT *

Some developers have the habit of using SELECT * to retrieve all columns in a table.

Bad example:

SELECT * FROM orders;

This will retrieve many unnecessary columns, wasting bandwidth and adding overhead to the query.

The best practice is to select only the columns you actually need.

Good example:

SELECT id, total FROM orders;

In our case, if we only need id and total columns, there is no need to fetch other columns.

12. Index Inefficiency

Have you ever encountered a situation where, even though indexes are created in the production environment, the indexes fail to be used during SQL execution?

Index inefficiency can result in previously fast operations becoming much slower, affecting the performance of the system.

You can use the EXPLAIN keyword to check the execution plan of a query and confirm whether an index is being used.

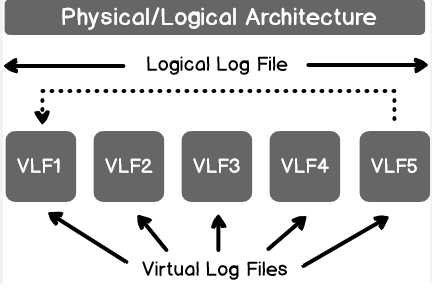

If the index is not being used, there could be several reasons for this, as shown in the diagram below:

For a more in-depth understanding of index inefficiency, check out my article "10 Scenarios of Index Inefficiency, It's a Trap!".

13. Frequently Modifying Tables or Data

In high-concurrency environments, frequent additions or modifications to table fields, or bulk updates to data, can degrade system performance.

When performing operations like ALTER to add or modify table fields, or bulk updates or deletions, the table may get locked.

If a large number of user requests arrive at the same time, this can slow down system responses.

In high-concurrency environments, avoid large UPDATE or DELETE operations. Try to batch them and execute them multiple times.

For operations like ALTER or DROP that modify table structure, avoid performing them during peak hours. It’s better to schedule them during off-peak times, like early in the morning.

Also, tools like Percona Toolkit or gh-ost can be used for online schema changes without locking the table.

14. Not Backing Up Regularly

One of the worst situations in work is when a careless teammate deletes data.

I’ve encountered this multiple times.

They accidentally deleted all the data in the test environment.

Once the data is lost, it’s too late to regret.

It’s recommended to back up data regularly using mysqldump:

mysqldump -u root -p database_name > backup.sql

You can set up a scheduled task to back up data periodically, for example, once a day.

If data is deleted by mistake in the future, you can easily restore it using MySQL commands.

15. Forgetting to Archive Historical Data

Some developers often complain that the historical data in tables is too large, making queries very slow.

In such cases, historical data should be archived.

Users generally care about data from the past month, three months, six months, or one year. They rarely query data older than that.

Therefore, it’s recommended to archive historical data.

In MySQL, only keep the latest data in the main database, while migrating older data to an archive database.