Why Use ETCD

Let’s start by discussing why we use ETCD, particularly in the context of our API gateway, Easegress (source code).

Easegress is an API gateway product developed and open-sourced by us. Unlike Nginx, which primarily serves as a reverse proxy, this gateway can handle many tasks, such as API orchestration, service discovery, resilience design (circuit breaking, rate limiting, retries, etc.), authentication and authorization (JWT, OAuth2, HMAC, etc.), and supports various Cloud Native architectures like microservices, service mesh, and serverless/FaaS integration. It can also be employed for high concurrency, gray releases, full-link stress testing, IoT, and other advanced enterprise solutions. To achieve these goals, in 2017 we realized that existing gateways like Nginx couldn’t evolve into such software, prompting us to rewrite it (others, like Lyft, shared this view, leading them to create Envoy, which is written in C++, while we opted for the more accessible Go language).

The core design of Easegress centers around three main aspects:

The ability to form leaderless clusters without third-party dependencies.

A pipeline-style plugin system for stream processing, similar to Linux command-line piping (supporting Go/WebAssembly).

An embedded data store for cluster control and data sharing.

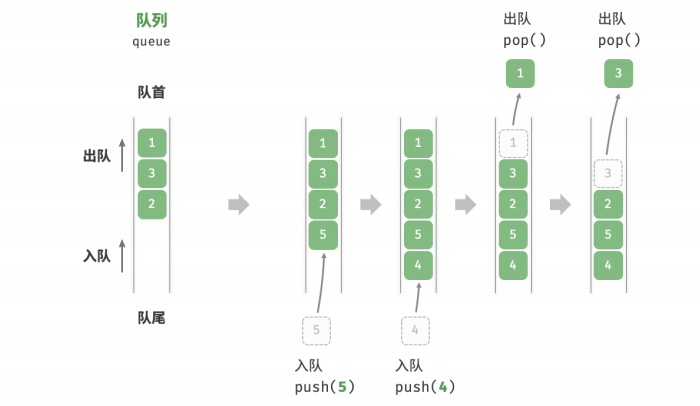

For any distributed system, a robust leader election mechanism based on Paxos/Raft is essential, along with the synchronization of key control/configuration data and shared information across the cluster to ensure consistent behavior. Without this, distributed systems cannot function effectively. This is why components like Zookeeper and ETCD have emerged and gained popularity; it's important to note that Zookeeper is primarily for cluster management rather than data storage.

Zookeeper is a well-known open-source tool used in production by many companies, including for some open-source software like Kafka. However, this introduces dependencies and adds operational complexity. As a result, Kafka's latest versions have integrated leader election algorithms, moving away from external Zookeeper dependencies. ETCD, a key component of the Go community, is also crucial for Kubernetes cluster management. Initially, Easegress used a gossip protocol for state synchronization (which was ambitious, aiming for wide-area network clusters), but we found it too complex and challenging to debug, especially since there were no corresponding use cases for a wide-area API Gateway. Therefore, three years ago, for stability reasons, we switched to an embedded version of ETCD, a design choice that has been maintained to this day.

Easegress stores all configuration information in ETCD, along with monitoring statistics and user-defined data (allowing user plugins to share data not just within a pipeline but across the entire cluster), making it very convenient for user extensions. Extensibility has always been our primary goal, especially for open-source software, which aims to lower the technical barrier for easier expansion. This is one reason why many of Google's open-source projects choose Go, and why Go is increasingly replacing C/C++ for PaaS foundational components.

Background Issue

Now that we've covered why we use ETCD, let’s delve into a specific issue. One user configured over a thousand pipelines in Easegress, causing significant memory consumption—over 10 GB, which persisted for an extended period.

The user reported the following issue:

In Easegress 1.4.1, creating an HTTP object with 1000 pipelines resulted in memory usage of around 400 MB at initialization. After running for 80 minutes, it reached 2 GB, and after 200 minutes, it peaked at 4 GB, despite no requests being made to Easegress during this time.

Typically, even with numerous APIs, it's not common to configure such a large number of processing pipelines. It’s more reasonable to group related APIs into a single pipeline, akin to Nginx's location configuration, which usually doesn’t require excessive entries. However, in this case, the user configured over a thousand pipelines, which was unprecedented, likely aiming for more granular control.

Upon investigation, we found that the memory usage predominantly stemmed from ETCD. This was unexpected, as the data we were storing in ETCD was minimal, not exceeding 10 MB. We began to suspect a memory leak in ETCD and discovered that versions 3.2 and 3.3 had known memory leak issues, which had since been resolved. However, Easegress was using the latest version 3.5, and generally, memory leaks shouldn't result in such high usage. This led us to consider that we might have misused ETCD. To ascertain this, we committed to thoroughly reviewing ETCD’s design.

After spending about two days studying ETCD's design, I identified several memory-consuming aspects, which are notably expensive. I share these insights to help others avoid similar pitfalls.

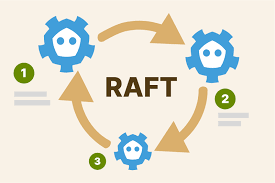

First and foremost is the RaftLog. ETCD uses Raft Logs primarily to assist followers in synchronizing data. This log’s underlying implementation relies on memory rather than files, and it retains at least 5000 of the most recent requests. If a key is large, this results in substantial memory overhead. For instance, continually updating a 1 MB key, even if it’s the same key, would lead to 5000 logs equating to 5 GB of memory consumption. This issue has been raised in ETCD’s issue list (#12548) but seems unresolved. The 5000 entry limit is hardcoded and cannot be modified (refer to the DefaultSnapshotCatchUpEntries related source code).

// DefaultSnapshotCatchUpEntries is the number of entries for a slow follower // to catch-up after compacting the raft storage entries. // We expect the follower has a millisecond level latency with the leader. // The max throughput is around 10K. Keep a 5K entries is enough for helping // follower to catch up. DefaultSnapshotCatchUpEntries uint64 = 5000

Moreover, we noticed that the official ETCD team had previously reduced this default value from 10000 to 5000, likely recognizing that 10000 consumed excessive memory while still wanting to ensure followers could keep up, resulting in the current 5000 limit. Personally, I believe there are better approaches than storing everything in memory.

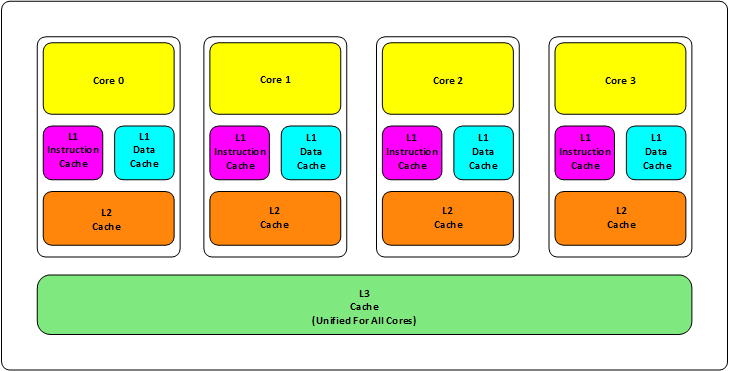

Additionally, several other factors can lead to increased memory consumption in ETCD:

Indexes: Each key-value pair in ETCD has a B-tree index in memory. The memory cost depends on the length of the key and the historical versioning, increasing the B-tree's memory requirements.

Mmap: ETCD employs mmap, an ancient Unix technique for file mapping, which maps its boltdb memory into virtual memory. Therefore, a larger database size results in increased memory consumption.

Watchers: Having numerous watchers and connections can also significantly impact memory usage.

(Evidently, ETCD adopts these strategies for performance considerations.)

In Easegress, the issue primarily stemmed from the Raft Log. We didn’t believe the other three factors were relevant to the user's issue. For indexes and mmap, using ETCD's compact and defrag (compression and defragmentation) should mitigate memory usage, but these weren’t likely the core reasons in this case.

The user had about 1000 pipelines, and Easegress performs statistical analysis on each (e.g., M1, M5, M15, P99, P90, P50). Each statistic may consume 1-2 KB, but Easegress combines the statistics from these 1000 pipelines into a single key. This merging led to an average key size of 2 MB, resulting in the 5000 in-memory Raft Logs consuming 10 GB of memory. Previously, there hadn't been a scenario with so many pipelines, so this memory issue hadn’t surfaced.

Ultimately, our solution was straightforward: we revised our strategy to avoid writing excessively large values. Although we previously stored everything in a single key, we split this large key into multiple smaller keys. Consequently, the actual data stored remained unchanged, but the size of each Raft Log entry decreased. Instead of 5000 entries of 2 MB (10 GB), we now had 5000 entries of 1 KB (500 MB), effectively resolving the problem. The related PR can be found here: PR#542.

Conclusion

To effectively use ETCD, consider the following practices:

Avoid large keys and values, as they consume significant memory via Raft Logs and multi-version B-tree indexing.

Prevent excessive database sizes and use compacting and defragmentation to reduce memory usage.

Limit the number of Watch Clients and Watches, as this can also incur considerable overhead.

Finally, strive to use the latest versions of both Go and ETCD to minimize memory-related issues. For instance, Go 1.12 introduced the MADV_FREE memory reclamation mechanism, which changed to MADV_DONTNEED in 1.16. The latter immediately reclaims memory, resulting in observable RSS changes, while the former retains memory until more is needed. ETCD 3.4 was compiled with Go 1.12, which can lead to issues.