To provide some context, EaseProbe is a lightweight, standalone tool for health-checking services. It supports HTTP, TCP, shell, SSH, TLS, hosts, and various middleware checks. It can directly send notifications to mainstream messaging platforms like Slack, Telegram, Discord, Email, and Team. It's very user-friendly, and users generally have positive feedback. 😏

This health-check tool must establish a new network connection from scratch each time it performs a check. This means conducting a fresh DNS query, building a TCP connection, communicating, and then closing the connection. We don’t set TCP’s KeepAlive to reuse connections because the tool needs to check not only the remote service but also the entire network status. Therefore, each check needs to start anew to capture the whole link status.

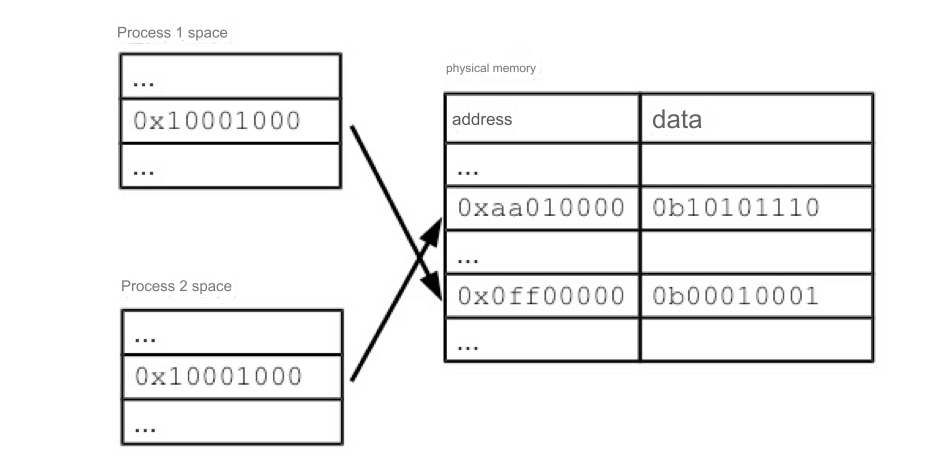

However, this constant opening and closing of connections leads to TIME_WAIT TCP connections on the probing end. According to TCP's state machine, this TIME_WAIT state requires waiting for twice the Maximum Segment Lifetime (MSL) time. Until the TCP connections are recycled by the system, they will occupy system resources, primarily two: file descriptors (which can be adjusted) and port numbers (which cannot be adjusted). As a client initiating requests, you theoretically only have 64K port numbers available for the same IP (in practice, the system defaults to about 30K, ranging from 32,768 to 60,999). If TIME_WAIT accumulates too many, it can prevent new TCP connections from being established, leading to resource exhaustion that may cause the entire application or system to malfunction.

For instance, if we probe 10,000 nodes every 10 seconds, and if the TIME_WAIT timeout is 120 seconds, then after the 60th second, we might run out of ports for a specific IP, potentially leading to system issues.

Why TIME_WAIT?

Why does TCP need to wait for 2MSL in TIME_WAIT?

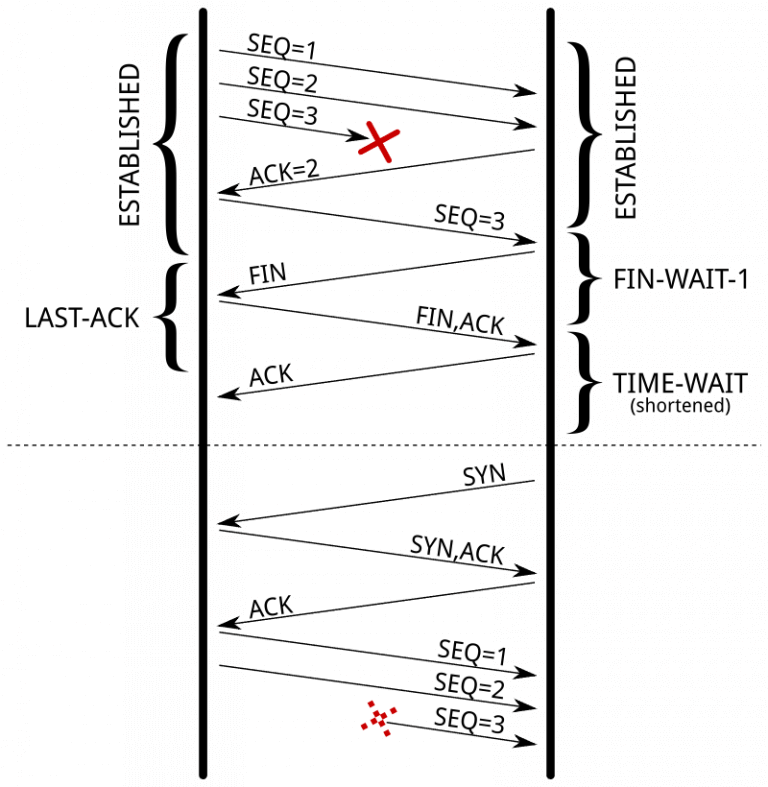

In a previous article, I discussed TCP’s connection termination process, which involves a back-and-forth communication. After entering the TIME_WAIT state, there’s no need to wait for the other end to acknowledge, as it enters a timeout state. This is primarily because, in a network, to confirm that our sent data has been received, we require an acknowledgment (Ack) from the other party. But how does the other party know that its Ack has been received? It would need to send another Ack back, leading to an endless cycle of acknowledgments—a classic dilemma known as the "Two Generals Problem."

To resolve this, we must wait for a maximum timeout to address two issues:

To prevent delayed segments from a connection that may be dependent on the same four-tuple (source address, source port, destination address, destination port) from being accepted by a later connection (which would immediately be terminated, causing confusion in the TCP state machine). While we could specify a range for the TCP sequence number to mitigate this, it only reduces the probability of the problem. High-throughput applications are still prone to issues, especially with large receive windows. RFC 1337 explains in detail what happens when the TIME-WAIT state is insufficient.

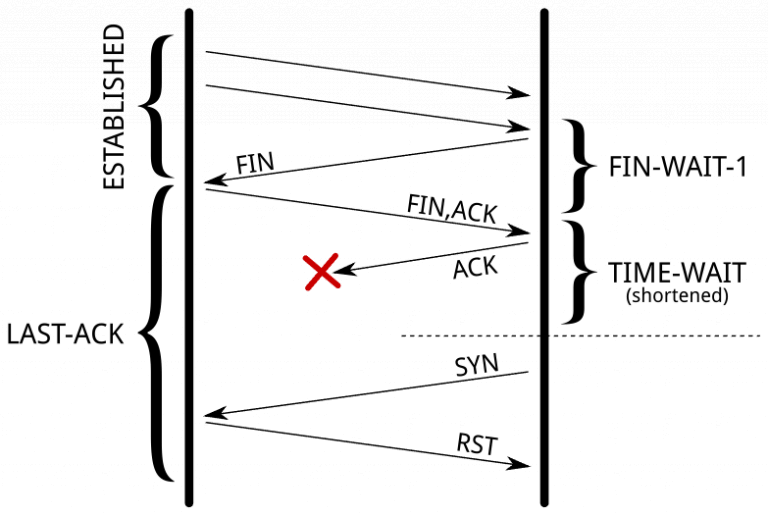

The second purpose is to ensure the remote end has closed the connection. If the last ACK is lost, the remote end remains in the LAST-ACK state. Without the TIME-WAIT state, a new connection could be reopened, but the remote end would still consider the previous connection valid. Upon receiving a SYN segment (with a matching sequence number), it would respond with a RST, leading to an error and aborting the new connection.

The timeout values for TIME_WAIT are as follows:

On macOS: 15 seconds

On Linux: 60 seconds

Solutions

To address the TIME_WAIT issue, common solutions include:

Reducing the TIME_WAIT timeout slightly to allow for quicker recovery of TCP port numbers. However, this cannot be too short, or it may still run out under high traffic conditions.

Setting

tcp_tw_reuse. RFC 1323 proposes a set of TCP extensions to enhance performance over high-bandwidth paths. It defines a new TCP option with two timestamp fields. If the new timestamp is greater than the previous one recorded, Linux will reuse existing TIME_WAIT connections for outgoing links. This setting does not benefit inbound connections.Setting

tcp_tw_recycle, which also relies on timestamps but affects both inbound and outbound connections. This setting influences NAT environments, such as when multiple employees share a single public IP. In this scenario, timestamp conditions may prevent devices behind that public IP from connecting within a minute if they don’t share the same timestamp clock. It’s advisable to disable this option as it complicates problem detection and diagnosis.

For servers, the above adjustments do not effectively resolve excessive TIME_WAIT states. The true solution is to avoid unnecessary disconnections—enabling KeepAlive allows the client to initiate disconnections, leaving the server in a CLOSE_WAIT state.

For EaseProbe, which establishes outbound connections, setting tcp_tw_reuse allows for the reuse of TIME_WAIT states, but it still doesn't completely eliminate the TIME_WAIT issue.

A few days later, I recalled a socket parameter I had seen in "UNIX Network Programming" called SO_LINGER. I had never used this setting, which is mainly for delaying closure. When you call the close() function, if there’s still data to send, it waits for a delay to allow for data transmission. However, if you set the delay to 0, the socket discards data and sends a RST to terminate the connection, thus avoiding TIME_WAIT altogether.

This option should never be set on servers, as clients would frequently see TCP connection errors like "connection reset by peer." However, for EaseProbe users, this setting is perfect. After a probe, EaseProbe can reset the connection, avoiding functional issues, server impact, and the troublesome TIME_WAIT problem.

Practical Operations in Go

In the standard Go library, the net.TCPConn has a method SetLinger() that accomplishes this easily:

conn, _ := net.DialTimeout("tcp", t.Host, t.Timeout())

if tcpCon, ok := conn.(*net.TCPConn); ok {

tcpCon.SetLinger(0)

}You need to type assert a net.Conn to net.TCPConn to call the method.

However, it's a bit tricky for the HTTP object in Go's standard library because the underlying connection objects are wrapped as private variables, making them inaccessible from outside. The article "How to Set Go net/http Socket Options – setsockopt() example" provides the following method:

dialer := &net.Dialer{

Control: func(network, address string, conn syscall.RawConn) error {

var operr error

if err := conn.Control(func(fd uintptr) {

operr = syscall.SetsockoptInt(int(fd), unix.SOL_SOCKET, unix.TCP_QUICKACK, 1)

}); err != nil {

return err

}

return operr

},

}

client := &http.Client{

Transport: &http.Transport{

DialContext: dialer.DialContext,

},

}This method is quite low-level and requires using system calls like setsockopt. I prefer using TCPConn.SetLinger(0) to avoid breaking encapsulation.

After reading the source code of the Go HTTP package, I used the following method:

client := &http.Client{

Timeout: h.Timeout(),

Transport: &http.Transport{

TLSClientConfig: tls,

DisableKeepAlives: true,

DialContext: func(ctx context.Context, network, addr string) (net.Conn, error) {

d := net.Dialer{Timeout: h.Timeout()}

conn, err := d.DialContext(ctx, network, addr)

if err != nil {

return nil, err

}

tcpConn, ok := conn.(*net.TCPConn)

if ok {

tcpConn.SetLinger(0)

return tcpConn, nil

}

return conn, nil

},

},

}I then gathered a list of the top 1 million domains globally and launched a server on AWS, using a script to generate the top 10K and 20K websites, probing them at intervals of 5, 10, 30, and 60 seconds. Cloudflare's 1.1.1.1 DNS occasionally blocked me, but the final test results were excellent—there were no TIME_WAIT connections. Related testing methods, data, and reports can be found in the Benchmark Report.

Summary

Here are a few key takeaways:

TIME_WAIT is a mechanism for TCP protocol integrity; despite its drawbacks, this design is crucial and should not be compromised.

Never use

tcp_tw_recycle; it is highly destructive.Servers should never use

SO_LINGER(0), andtcp_tw_reusehas limited significance for servers since it only benefits outbound traffic.On the server side, avoid initiating disconnections; configure KeepAlive to reuse connections and let clients handle disconnections.

On the client side, using

tcp_tw_reuseandSO_LINGER(0)can be beneficial.I strongly recommend reading the article "Coping with the TCP TIME-WAIT state on busy Linux servers."

Finally, I strongly recommend reading this article – Coping with the TCP TIME-WAIT state on busy Linux servers