Round Robin is the default load balancing strategy in Nginx. It distributes client requests to backend servers in a sequential, round-robin fashion. If a backend server goes down, Nginx will automatically remove it from the queue until the server is back up.

Regarding Nginx configuration, Song Ge has previously written several articles shared with colleagues, most of which were configured from a global perspective. Today, we’ll focus solely on the configuration of Nginx for load balancing.

1. What is Load Balancing?

Load balancing is a computer network technology used to distribute network traffic or requests across multiple servers, optimizing resource use, maximizing throughput, minimizing response times, and preventing any single point of overload. The purpose of load balancing is to ensure the high availability and reliability of web applications while improving user experience.

Common scenarios for using load balancing include:

Server load balancing: Distributing network traffic across multiple servers to prevent any one server from being overwhelmed by too many requests.

Data center load balancing: Distributing traffic between different locations within a data center or across multiple data centers to optimize resource utilization and improve reliability.

Cloud service load balancing: In cloud environments, load balancing distributes traffic across multiple virtual machines or container services.

Although Nginx is commonly used for load balancing, for the sake of completeness, we’ll also mention other ways load balancing can be implemented at different network layers, such as:

DNS load balancing: Distributing traffic by resolving a domain name into multiple IP addresses and directing traffic to different servers.

Hardware load balancing: Using specialized hardware devices (e.g., F5 BIG-IP) to distribute traffic.

Software load balancing: Using software solutions (e.g., Nginx, HAProxy) to perform load balancing.

Application-layer load balancing: Distributing requests to different servers at the application layer (e.g., HTTP/HTTPS).

Transport-layer load balancing: Distributing connections at the transport layer (e.g., TCP/UDP).

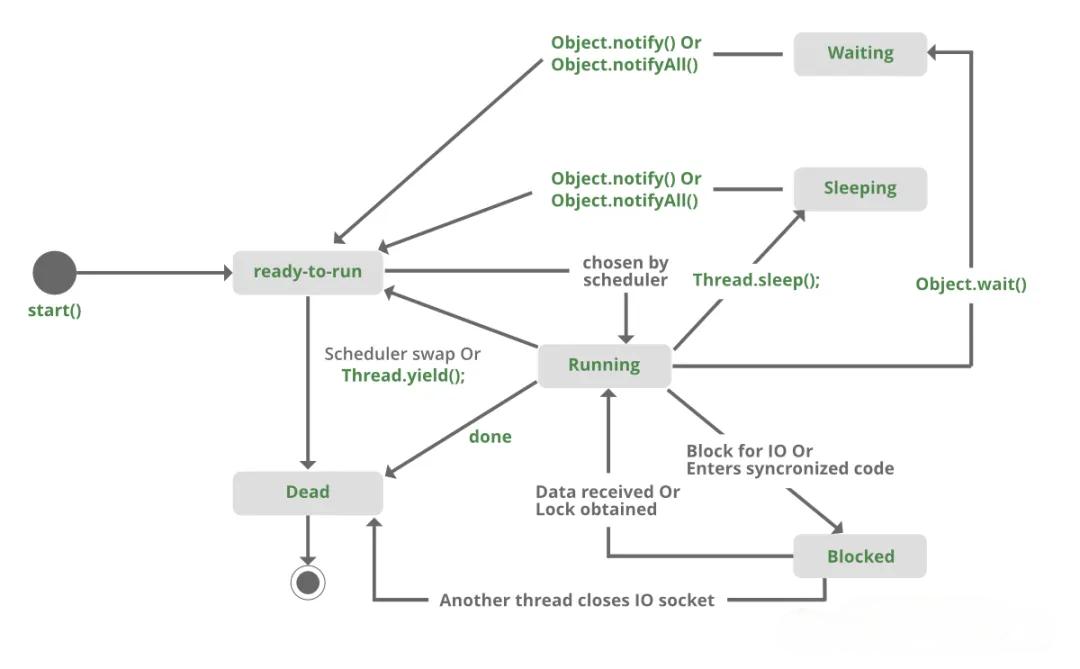

2. Common Load Balancing Algorithms

No matter which tool you use or where you implement load balancing, here are the main load balancing algorithms:

Round Robin: Distributes requests in a rotating sequence to each server.

Least Connections: Distributes requests to the server with the fewest active connections.

Weighted Round Robin: Distributes requests based on server performance weights.

Weighted Least Connections: Distributes requests based on server performance weights and the current number of connections.

IP Hash: Distributes requests based on the hash of the client’s IP address, ensuring requests from the same IP always go to the same server.

3. Nginx Configuration

3.1 Round Robin

Round Robin is the default load balancing strategy in Nginx. It sequentially distributes client requests to backend servers. If a backend server fails, Nginx automatically removes it from the queue until it is back online.

Example configuration:

upstream backend {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

server {

...

location / {

proxy_pass http://backend;

}

...

}In the configuration above, Nginx will assign requests sequentially to backend1, backend2, and backend3 in a rotating manner.

3.2 Weighted Round Robin

The Weighted Round Robin strategy allows you to assign different weights to backend servers. Servers with higher weights will receive more requests. This can be configured based on factors like server hardware, processing capacity, etc.

Example configuration:

http {

upstream myapp1 {

server backend1.example.com weight=5;

server backend2.example.com;

server backend3.example.com down;

server backup1.example.com backup;

}

server {

listen 80;

location / {

proxy_pass http://myapp1;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

}In the above configuration, the backend servers are assigned weights, and Nginx will distribute traffic accordingly. The down directive marks a server as unavailable, and the backup directive designates a server as a backup.

3.3 IP Hash

The IP Hash strategy hashes the client’s IP address to determine which backend server will handle the request. This strategy is useful for scenarios where session persistence is required, as it ensures requests from the same client go to the same backend server, preventing session data loss.

Example configuration:

upstream backend {

ip_hash;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

server {

...

location / {

proxy_pass http://backend;

}

...

}In this configuration, Nginx will hash the client’s IP address and assign requests to the corresponding backend server.

3.4 Least Connections

The Least Connections strategy assigns new requests to the backend server with the fewest active connections. This ensures load is distributed more evenly across servers and avoids overloading any one server.

Note: Nginx’s native Stream module supports the Least Connections strategy, but in the HTTP module, this usually requires third-party plugins or scripts.

Example configuration for the Stream module:

upstream backend {

least_conn;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

stream {

server {

listen 12345;

proxy_pass backend;

}

}In the above configuration, Nginx distributes traffic based on the number of active connections on each backend server.

3.5 Health Checks

In Nginx, you can configure load balancing health checks using either active health checks or passive health checks.

Active Health Checks: These periodically send requests to upstream servers to check their health. If a server fails to respond correctly, Nginx will consider it unhealthy and stop sending traffic to it until it recovers.

Example configuration for active health checks:

http {

upstream backend {

server backend1.example.com;

server backend2.example.com;

check interval=3000 rise=2 fall=5 timeout=1000 type=http;

check_http_send "HEAD /health HTTP/1.1\r\nHost: localhost\r\nConnection: close\r\n\r\n";

check_http_expect_alive http_2xx http_3xx;

}

server {

location / {

proxy_pass http://backend;

}

}

}In this configuration, Nginx will send a HEAD request to the /health endpoint every 3 seconds. If the server returns HTTP status codes 2xx or 3xx twice in a row (rise=2), it’s considered healthy. If it fails to respond correctly five times in a row (fall=5), it’s considered unhealthy.

Passive Health Checks: These analyze real-time traffic. If a backend server returns a specific error status code, Nginx will consider it unhealthy and stop routing traffic to it for a specified period.

Example configuration for passive health checks:

http {

upstream backend {

server backend1.example.com;

server backend2.example.com max_fails=2 fail_timeout=30s;

}

server {

listen 80;

location / {

proxy_pass backend;

proxy_next_upstream error timeout http_500 http_502 http_503 http_504;

}

}

}In this configuration, if a backend server fails to respond correctly within 30 seconds (max_fails=2), it will be temporarily removed from the server pool. The proxy_next_upstream directive specifies which errors will trigger retries with a different server.