BG2 Tech Podcast Hosts Bill Gurley and Brad Gerstner recently conducted an 85-minute video interview with Microsoft CEO Satya Nadella, which covered an array of fascinating topics:

00:00 Introduction

01:31 Nadella’s Journey to Becoming Microsoft CEO

06:42 Nadella’s Memo to the CEO Council

10:42 Nadella’s Strengths as a CEO

11:34 Advice for CEOs

15:01 Microsoft’s Investment in OpenAI

19:42 The AI Arms Race

23:55 Traditional Search and Consumer AI

28:07 The Future of AI Agents

38:32 Virtually Unlimited Memory Capacity

39:47 The Copilot Approach to AI Applications

50:26 Internal AI Utilization at Microsoft

56:03 CapX (Capital Expenditure)

01:00:20 Scaling Models and Inference Costs

01:15:15 OpenAI’s Evolving Profit Model

01:18:05 OpenAI’s Next Steps

01:19:43 Open vs. Closed Systems and Safe AI

Interview Video:

https://www.youtube.com/watch?v=9NtsnzRFJ_o&t=2677s

Both interviewers are experienced venture capitalists, and their questions were highly insightful. Nadella responded with candor and wisdom. Below are 12 key takeaways from the interview:1. Foundry: The AI Application Server

Azure is designed differently from other cloud services. It’s built for enterprise workloads, supporting extensive data residency, and spans over 60 regions—more than any other provider. Our cloud isn’t built for a single large application but for diverse enterprise workloads. This design will become the core of inference demands, revolving around data and application servers.

Every modern application, including Copilot, is essentially a multi-model application. This shift brings a new type of application server, much like the evolution of mobile and web application servers. Now, we have AI application servers. For us, this is Foundry.

2. Stateful Tools

“‘Conversational answers’ are at the heart of ChatGPT. From branding to product, it’s transforming into a stateful tool. Traditional search, despite having search histories, lacks deeper state management. These AI agents are becoming increasingly stateful.”

(Note: The term “stateful” here emphasizes the evolution of AI tools from stateless systems to those capable of retaining context dynamically, providing smarter and more human-like interactions.)

3. The Collapse of Traditional Search

“The moment queries with commercial intent begin to shift, that’s when the ‘dam breaks’ for traditional search. For now, traditional search survives because commercial intent queries haven’t migrated en masse. When that migration happens, the change will be swift.”

4. The Battle for Browser Dominance

“We have a chance to reclaim browser dominance. We once defeated Netscape but lost to Google’s Chrome, which was a huge regret. Now, with Edge and Copilot, we’re reclaiming market share in an exciting way.”

5. Winning Back Market Share from Google

“I often say Google makes more money on Windows than all of Microsoft’s businesses combined. From a shareholder’s perspective, this is a wake-up call. We’ve lost too much market share, and now we can fight to win some of it back.”

6. Agents Superseding Applications

“If one agent needs to operate in another agent’s space or access its schema, there may be a need for a licensed interface. For instance, when I use Microsoft Copilot, I can access Adobe, SAP, and Dynamics CRM instances through connectors. This eliminates the need to interact directly with SaaS applications, as AI integrates and operates on their data.”

7. Word and Excel as Agents

“With Excel, you might ask, ‘Do we still need Excel?’ But the exciting part is its integration with Python, just like GitHub Copilot. Excel is no longer just a spreadsheet tool; it’s a visualization tool for data analysts. Copilot generates and executes plans within Excel, turning it into a draft book for analysis.

Our approach positions Copilot as the UI layer for AI, integrating all agents, including specialized tools like Excel and Word. Excel is an agent. Word is an agent. They are task-specific ‘canvases’ designed for particular functions. Whether it’s legal documents or data analysis, Copilot collaborates seamlessly. It’s a new paradigm for work and workflows.”

8. AI as the Lean Tool for Knowledge Work

“I’ve been studying how industrial companies use lean practices to drive growth. We’re learning to redesign business processes, improve efficiency, and automate workflows. This reminds me of the ‘business process re-engineering’ trend in the 1990s, which is now making a comeback in a modern AI-driven context.”

9. Leveraging AI for Operational Efficiency

“Our goal is to use AI to achieve operational leverage. I foresee our total headcount costs declining while per capita costs rise. Simultaneously, researchers will have more GPUs at their disposal. That’s how I envision the future.”

10. The Power of Scaling Laws

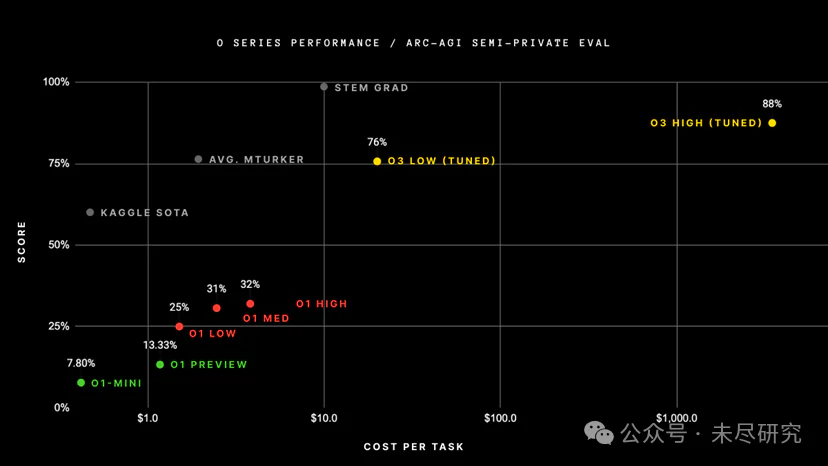

“As cluster sizes grow, distributed computing becomes increasingly complex. That’s one challenge. But I still believe training models is far from over. OpenAI’s advancements, such as chain-of-thought reasoning and auto-grading in GPT-4.1 (o1), are exciting. These approaches enhance model capabilities by utilizing test-time compute during the inference phase and feeding back into pre-training.”

11. Microsoft Won’t Compete in the Largest Model Training

“Our partnership with OpenAI has centralized our compute resources. There’s no need to replicate the same set of models because we already own the intellectual property. Our strategy is to focus on fine-tuning and validating models while developing specific weights and categories for different use cases.”

12. Chip Supply Is No Longer an Issue

“In 2024, we faced chip supply constraints. However, as we stated externally, we are optimistic about the first half of FY2025 and anticipate even better conditions in 2026 and beyond.”

Interview Video:

https://www.youtube.com/watch?v=9NtsnzRFJ_o&t=2677s