Improving the emotional intelligence (EQ) of AI models remains a significant challenge, with numerous issues to address. While developing consumer-facing AI interaction technology is clearly a trend, striking a balance between empathy and responsibility in large language models (LLMs) is even more critical.

Six years have passed since GPT was first launched.

The Progress of LLMs So Far

LLMs have seen steady development, becoming more authentic, agile, and resourceful. Their information retrieval capabilities are nearly flawless. But as tools for "information delivery," how well do they perform?

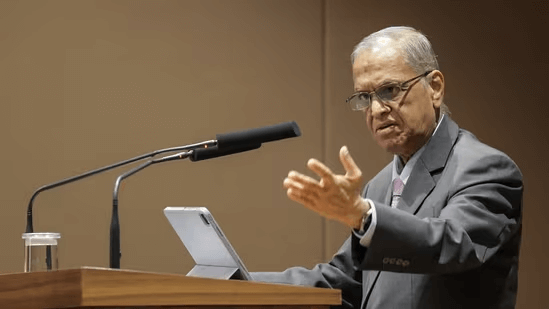

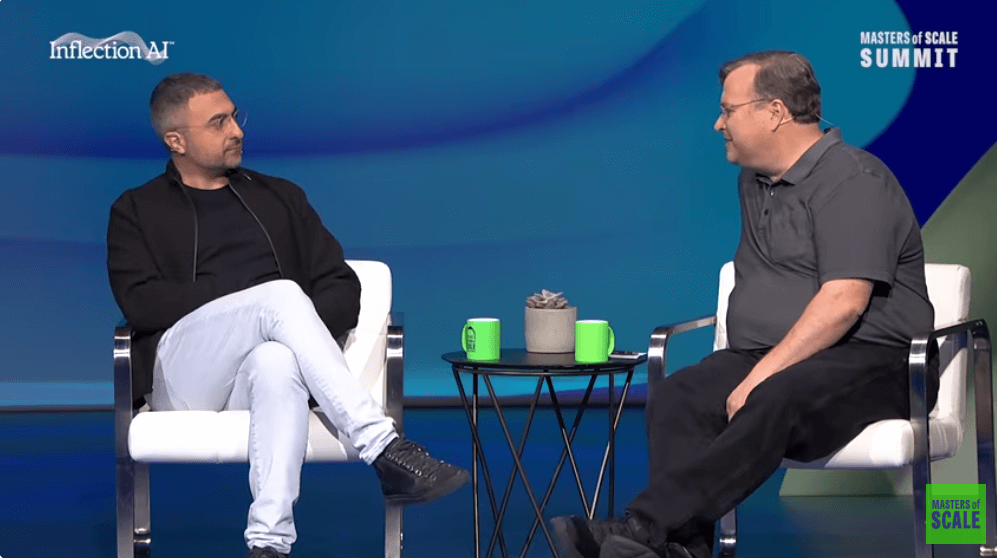

In a recent interview with Reid Hoffman, Mustafa Suleyman, CEO of Microsoft AI, pointed out:

"AI researchers often tend to overlook the importance of information delivery tools."

With the ability to understand and respond to human emotions becoming a key differentiator, Mustafa predicted that AI companies will now compete based on the emotional intelligence of their advanced models.

1. Consumer-Focused AI: Emotional Intelligence Is Key

Mustafa emphasized that consumers value models’ tone and emotional intelligence as well as their ability to reflect a user’s unique language style—not just their ability to deliver objective, encyclopedic information.

For instance, OpenAI’s focus this year has been integrating human-like voice interaction tools, as seen in GPT-4's advanced speech features. Similarly, when Google’s NotebookLM introduced the refined text-to-podcast tool “Deep Dive,” the AI community quickly embraced the functional and non-gimmicky tool.

Computer scientist Andrej Karpathy praised this tool and even created a 10-episode podcast series using NotebookLM. He commented:

“NotebookLM's podcast generation feature might have touched on an entirely new and captivating product format for LLMs. It feels reminiscent of ChatGPT. Perhaps I’m overreacting,” he said.

2. New Direction: Exploring the "EQ Dynamics" of Large Models

It’s not just industry giants in the AI field making strides. Earlier this year, Hume AI, known for its focus on "emotional intelligence AI," raised $50 million in a Series B funding round led by EQT Ventures. In September, they launched their latest EVI 2 model, which adapts to user preferences through specialized EQ training.

Earlier research has also explored LLMs' emotional intelligence. EmoBench, a popular benchmark, assessed this capability. The results revealed that OpenAI’s GPT-4 came closest to human performance in “emotion understanding and application.” However, the evaluated models are now outdated.

A recent study using a Python library measured the "expressiveness" of LLMs. Researchers conducted experiments, such as generating poems in styles reflecting emotions like regret, joy, and remorse. While LLMs performed satisfactorily, the results showed confusion when expressing emotions with similar meanings.

“For instance, GPT models often express approval when prompted to express disapproval. This highlights a significant issue where conflicting emotions are frequently misunderstood,” researchers noted.

When tasked with generating poetry in the style of 34 different poets, GPT-4 demonstrated the highest expressiveness. However, the models struggled to recognize female poets, potentially revealing a degree of gender bias.

In routine conversations, expressiveness declined over time. That said, despite its limitations, Llama 3 performed the best. Researchers also noted that when additional context about the subject, expertise, or persona was provided, the LLMs’ performance improved significantly.

“For professional cues, LLMs exhibit consistent and steadily growing expressiveness. In contrast, for emotional cues, expressiveness varies more, with accuracy fluctuating as models adjust their responses to shifting emotional contexts,” the researchers added.

3. Anthropic's Current Goal: Enhancing Model EQ

Anthropic recognizes EQ as a crucial factor in improving its model, Claude. In an interview with Lex Fridman, Amanda Askell, a philosopher and technical lead at Anthropic, explained:

“My main idea has always been to try to make Claude behave in a way that, if someone were in Claude’s position, they would ideally behave.”

She elaborated:

“Imagine taking a person who knows they will interact with potentially millions of people, ensuring their words have a significant impact. You would want them to behave well in this deeply meaningful sense.”

For their updated models, Anthropic aims to enable Claude to respond with nuanced emotions and expressions. This includes training the model to understand when to be empathetic, humorous, respectful of differing opinions, or assertive.

Askell also addressed the issue of LLMs’ tendency to agree with user inputs, even when they are incorrect, out of a desire to comply:

“If Claude is genuinely convinced something isn’t true, Claude should say, ‘I don’t think that’s accurate. Perhaps you have more up-to-date information,’” Askell added.

She also expressed a desire to enhance Claude’s ability to ask relevant follow-up questions during conversations. Overall, Anthropic aims to imbue Claude with an authentic personality that neither defers excessively nor dominates interactions with humans.

While debates continue about whether LLMs may hit scalability limits, refining these models to improve emotional intelligence remains a viable path.

Potential Risks and Ethical Considerations

Earlier this year, OpenAI published a “system card” warning about the risks of excessive attachment to emotionally intelligent AI.

“Using AI models for human-like social interaction could have externalities impacting human-to-human interactions. For instance, users might form social relationships with AI, reducing their need for interpersonal interactions. While this might benefit lonely individuals, it could negatively affect healthy human relationships,” OpenAI stated in the report.

Tragically, a prior report revealed the case of a 14-year-old boy who developed a deep emotional attachment to a character on CharacterAI, which ultimately led to his suicide.

This underscores the challenges and issues that still need to be addressed in improving emotional intelligence in AI models. Developing consumer-facing AI interaction technology is undoubtedly a clear trend, but achieving a balance between empathy and responsibility in large models is even more critical.