While Docker Machine provides useful features, it often doesn’t suit many established business teams. The real challenge in resource scheduling isn't just about machine creation and container deployment, but how to manage various clusters, unify permissions, manage costs, and initialize environments across different clusters.

Why is Microservice Containerized Operations a New Issue?

Before containerization, services were usually deployed on physical or virtual machines, with dedicated platforms for operations. For instance, in Weibo's operations platform, JPool, when a service needed to be deployed, JPool would determine which physical or virtual machine to use based on the service's cluster (usually corresponding to a business line) and service pool (a business line may have multiple service pools). Then, tools like Puppet would gradually release the latest application code to these machines, completing the deployment.

However, things have changed with containerization. Instead of physical or virtual machines, operations now deal with Docker containers, which may not have fixed IPs. How does one deploy services in such an environment? This is where a new type of operations platform, specifically for containers, is needed—one that can create and manage the lifecycle of containers on existing physical or virtual machines. This is often referred to as a "container operations platform."

In my experience, a container operations platform typically comprises the following key components: image repositories, resource scheduling, container orchestration, and service orchestration.

Image Repositories

Docker containers rely on Docker images, so to deploy services, images must first be available on machines. But where do these images go? How do they get distributed? This is where an image repository comes into play. It works similarly to a Git repository, providing a centralized storage space for images. When deploying a service, servers pull the images from this central repository and then start the containers.

For testing or small-scale businesses, Docker Hub (https://hub.docker.com/) may suffice. However, most business teams prefer to set up their private image repository for security and performance reasons.

Setting Up a Private Image Repository

Let’s take a look at how to set up a private image repository, based on Weibo's practice.

Permission Control

The first challenge in managing image repositories is permission control—who can pull or modify images. Typically, image repositories have two levels of access control.

Login-based Access: Only logged-in users can access the repository.

Project-based Access: Each project has its own repository directory, with three roles: admin, developer, and guest. Admins and developers can modify images, while guests only have access permissions.

Think of it like entering an office building. First, you need permission to enter the building (just as anyone working there would). Then, you need access to the specific office (just like project-specific repository access). New employees must be granted building access first, followed by office access granted by the office administrator. Isn’t permission control easier to understand now?

Image Synchronization

In production environments, images often need to be deployed across dozens or even hundreds of cluster nodes. A single image repository instance might not have the bandwidth to handle multiple nodes downloading images simultaneously. This requires setting up multiple instances to balance the load and manage image synchronization across instances. There are two common approaches:

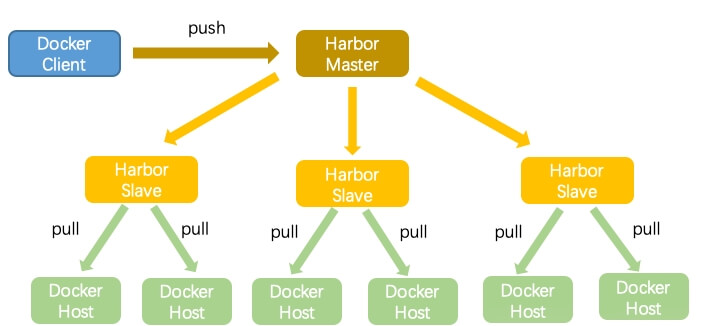

Master-Slave Replication: An example is the open-source repository Harbor, which supports master-slave replication.

P2P Distribution: Alibaba's Dragonfly uses P2P distribution.

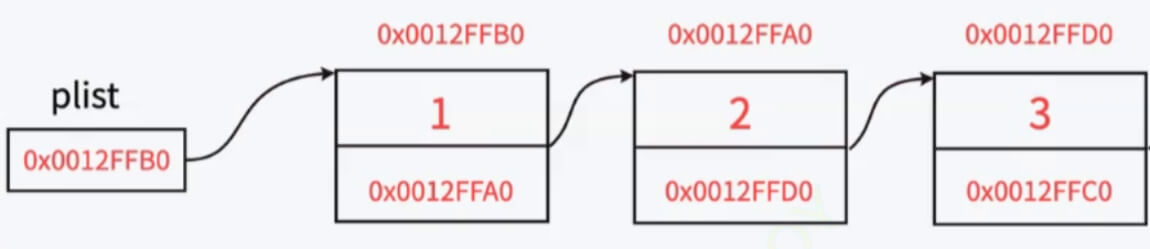

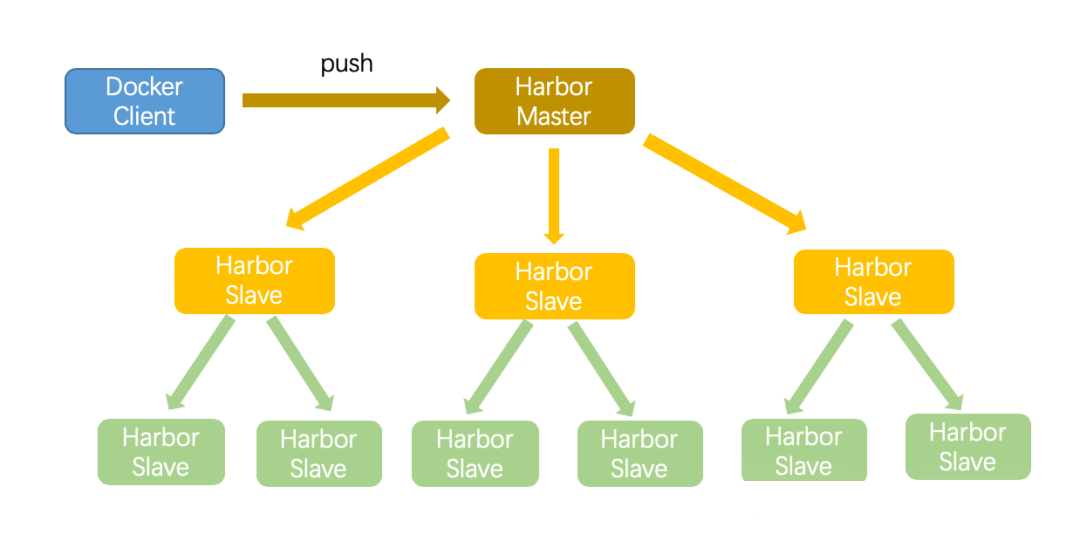

Weibo's image repository is built on Harbor. Here's how the synchronization mechanism works: images are uploaded to a master instance, and other instances replicate the images from this master. The architecture looks like this:

Additionally, Harbor supports hierarchical publishing. If clusters are deployed in multiple IDCs, you can synchronize images from a master IDC to other IDCs, and further distribute them to sub-IDCs.

High Availability

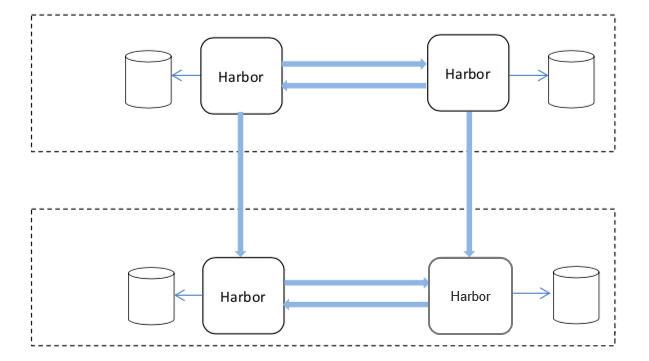

Since Docker containers depend on Docker images, high availability of the image repository is crucial. High availability generally involves deploying services across multiple IDCs, so even if one IDC fails, services can continue from another. In Weibo’s case, the image repository is deployed across two IDCs, with bi-directional replication between them. If one IDC encounters an issue, the other IDC can continue to serve without data loss.

Resource Scheduling

Once you've solved image storage and access, the next challenge is resource scheduling—deciding which machines to distribute Docker images to and where these machines come from.

Physical Machine Clusters

Most small to mid-sized teams maintain their own physical machine clusters, typically managed by a cluster-service pool-server model. However, physical machine clusters often have heterogeneous configurations, especially with computing nodes. Older machines might have fewer cores and less memory, while newer ones are more powerful. Older machines may be used for non-critical services, while newer ones are assigned to more critical, resource-intensive tasks.

Virtual Machine Clusters

Some teams, after dealing with the low utilization and inflexibility of physical clusters, transition to virtualized clusters or private clouds using technologies like OpenStack. The main benefit is the ability to consolidate resources and allocate them as needed, improving utilization and saving costs.

Public Cloud Clusters

Many teams, especially startups, turn to public cloud for its speed and flexibility. Public cloud allows for quick machine creation and offers standardized configurations, simplifying management.

To handle resource scheduling, Docker offers Docker Machine, which allows you to create machines and deploy containers on internal physical clusters, virtual clusters, or public clouds.

However, for most established teams, Docker Machine doesn’t fully meet their needs. The real challenge lies in integrating different clusters, unifying permission management, cost accounting, and environment initialization.

For example, Weibo developed a container operations platform called DCP to manage multiple clusters. DCP’s complexity stems from the need to integrate external clouds and manage machines across private clouds, including configuration initialization and lifecycle management. After creating a machine, for instance, DCP might need to install NTP, modify sysctl, and install Docker. Weibo continues to use Puppet for internal machines, while using Ansible for distributing configurations on Alibaba Cloud machines.

Microservice containerized operations present unique challenges, but with the right platform—whether it's for image repositories, resource scheduling, or container orchestration—teams can streamline and manage these complexities effectively.