Google DeepMind has launched Genie 2, a cutting-edge AI model that generates interactive 3D environments from images or text descriptions. With advanced capabilities like object interaction, physical simulations, and immersive world-building, Genie 2 is set to transform the gaming industry and AI research, offering unprecedented flexibility for game developers and researchers.

Following the release of Fei-Fei Li's AI system for "image-generated 3D worlds," on December 5th (local time), Google DeepMind unveiled its latest foundational world model—Genie 2. Like Li's system, Genie 2 generates interactive 3D scenes based on an image or text description that humans or AI agents can explore. Compared to Fei-Fei Li’s release, Genie 2 adds more complex interactive capabilities.

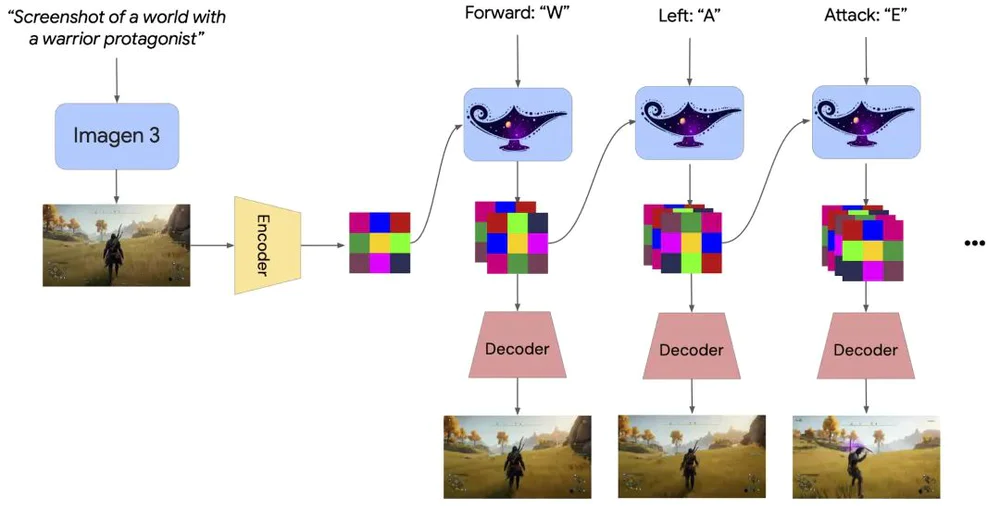

Google explained that users only need to provide an image generated by Imagen 3 and a corresponding text description, and Genie 2 can generate an interactive 3D environment. Users can explore the environment freely using a mouse and keyboard for up to one minute. The model has the ability to "expand scenes," maintaining consistency across generated environments and accurately rendering parts of the scene that disappear as the user moves.

Google DeepMind showcased a series of GIFs on their official website, demonstrating how Genie 2 can simulate object interactions, animations, realistic lighting, physical reflections, and NPC behavior. Many of the generated scenes are close to the quality of AAA games, even excelling in object perspective consistency and spatial memory, with the ability to simulate physical laws.

These capabilities are astonishing because currently, such effects would require significant time and collaboration between game developers and artists to achieve. Users have marveled at how this release further blurs the boundary between the physical and digital worlds, offering a glimpse into future world models similar to "Ready Player One."

Creating Infinite Interactive Worlds Through Games

For decades, games have been a cornerstone of artificial intelligence research. The immersion and controllability of games, along with the measurable challenges they provide, offer an ideal environment to test and advance AI. From mastering Atari games in the early stages of AI development to AlphaGo's world-changing victory in Go, and AlphaStar’s dominance in StarCraft II, DeepMind has continually demonstrated the potential of games as a testing ground for AI.

However, a major obstacle in training general embodied agents (AI systems capable of learning how to interact with both physical and virtual worlds in various ways) has always been the lack of diverse training environments.

Traditional training tools have not provided enough diversity and depth to allow AI agents to fully grasp the complexity of the real world. Genie 2 aims to address this problem by generating infinite interactive worlds through games.

What sets Genie 2 apart is its ability to create highly customizable games on demand. By simply inputting an image as a prompt, the system creates a playable world tailored to specific training or gaming needs. This flexibility enables AI researchers to use agents to face endless challenges, helping them develop skills that can be transferred to real-world scenarios. This could completely revolutionize the way developers test and improve AI systems, allowing people to use AI to unleash their creativity.

By quickly generating diverse environments using Genie 2, researchers can create evaluation tasks that were previously unseen during training. For instance, Google demonstrated an example involving an AI agent called SIMA, developed in collaboration with game developers. SIMA was able to generate and execute commands in previously unseen environments using a single image prompt.

Features of Genie 2:

Smartly responds to actions taken on the keyboard.

Generates different trajectories from the same initial frame.

Retains previously generated content and maintains spatial context.

Keeps the world consistent for up to one minute.

Creates worlds in various styles, such as first-person or cartoon-like.

Supports creating complex 3D visual scenes.

Simulates physical interactions, such as balloon explosions or shooting barrels.

Learns to animate various types of characters performing different activities.

Models interactions with other agents and their complex behaviors.

Simulates powerful physical properties such as fluids, smoke, gravity, lighting, and reflection.

Supports generation from real-world images.

One of the most exciting aspects of Genie 2 is its potential to facilitate training for general agents. Unlike specialized agents that excel at single tasks (like playing chess or answering trivia), general agents can adapt to various challenges, much like humans solving problems in the real world. By exposing these agents to new environments, Genie 2 allows them to tackle complex real-world scenarios where adaptability and versatility are essential.

Though this research is still in its early stages and there is much room for improvement in both agent and environment generation capabilities, Genie 2 undoubtedly represents a path toward solving structural challenges in training specific agents while also demonstrating the breadth and versatility needed for AGI.

Beyond AI Research: Revolutionizing Game Development and Interactive Prototyping

In addition to advancing AI research, Genie 2 offers new possibilities for game development and interactive prototype design. For game developers, especially independent ones, Genie 2 enables the rapid creation of unique, playable experiences, reducing the time and cost of traditional design processes. The value of Genie 2 for game development is so evident that, after its release, DeepMind’s CEO enthusiastically invited Elon Musk to collaborate on creating AI-driven games, to which Musk replied, "Cool."

For gamers, the technology behind Genie 2 suggests that future game environments will be more dynamic, personalized, and immersive than ever. Imagine video games that can adapt in real time to a player’s skill level or preferences, offering truly customized experiences. The future world of “Ready Player One” may be closer than we think.

The Impact of Genie 2 Extends Beyond Gaming

Genie 2’s impact goes far beyond the gaming industry. It can serve as a platform for innovations in virtual reality, simulation, and robotics. For example, robots can be trained in the game environments generated by Genie 2, learning how to navigate unfamiliar terrains or interact with objects in new ways. Similarly, virtual assistants can improve their understanding and responsiveness to real-world tasks by practicing in these environments. This is likely why Google DeepMind positioned Genie 2 as a "foundational world model" rather than just a "game generation model."

Unlocking 3D Storytelling: A New Era of Technological Revolution

When Fei-Fei Li unveiled the AI system for "image-generated 3D worlds" on X, there was no detailed explanation of the underlying technology. As a result, while netizens marveled at the technical prowess, they were left curious about the principles behind it.

On the Google DeepMind website, the company briefly described the underlying technology of Genie 2 as “an autoregressive latent diffusion model, trained on large video datasets,” with relevant papers linked for further reading. Here’s a simplified breakdown of the principle:

Genie 2 is an autoregressive diffusion model that learns to generate video content by analyzing large video datasets. Specifically, it works through a combination of an autoencoder and a large transformer dynamic model, allowing Genie 2 to extract key information from raw videos and use deep learning models to generate updated video scenes.

First, Genie 2 uses a tool called an autoencoder to extract important features from the video. The key features of each video frame are compressed into a simplified form called "latent frames." This process is akin to compressing each video frame into a smaller data package, retaining the most significant elements. These "latent frames" are not the complete video content but highly abstracted and simplified versions of the most important elements.

Next, these latent frames are fed into a large transformer dynamic model. The model learns the relationships between frames using a "causal masking" technique, which helps the system understand the sequence of frames, ensuring smooth transitions from one frame to the next.

During the video generation process, Genie 2 uses an autoregressive sampling method. This means it generates each frame one by one, with each frame depending on the previous one to determine the next. This ensures continuity and smooth transitions between frames, improving the realism and fluidity of the video.

Additionally, Genie 2 uses a technique called "uncategorized guidance" to enhance the controllability of generated actions, ensuring more precise control over actions and scenes in the video, reducing uncertainty and inconsistencies in the generation process.

As global tech giants increasingly focus on the fusion of AI with the physical world, we stand at the threshold of a new technological revolution. While it may seem slower in pace compared to conversational AI like ChatGPT, the development of 3D AI points to broader application prospects.

Just as Fei-Fei Li's ImageNet project sparked a wave of AI-driven entrepreneurship in computer vision, 3D AI technology may now be on the verge of triggering a larger revolution. It will not only advance technology but fundamentally change the way we interact with the world—impacting everything from robotics and autonomous vehicles to virtual reality and urban planning. The potential applications of 3D AI are limitless.

We can foresee that 3D AI will usher in a new era filled with innovation and opportunity. It will not just be an iteration of technology but a profound reshaping of human lifestyles, propelling us into a smarter, more connected world.