OpenAI kicks off its 12-day live stream event with the official release of the o1 model, which offers improved intelligence, faster response times, and image recognition. The event also introduces the $200 per month Pro membership, offering unlimited access to advanced models. This new subscription is tailored for power users and provides enhanced reliability and advanced features like high-level voice modes.

At 2 AM Beijing time on December 6, OpenAI officially launched its new egg-hunting project—a 12-day live stream event featuring 12 new features or surprise functions.

This is a new release format created by OpenAI, as no major tech companies have previously used live streaming for continuous feature releases. There has been widespread speculation that new models or the previously announced Sora video feature would be part of the 12-day release.

Today, the first surprise of the event was revealed: OpenAI launched the new o1 model on the first day of the live stream.

Three months ago, during a release event, OpenAI announced the o1 model with the version name o1-preview, a preview version. Today, OpenAI officially released the full version of o1.

Compared to o1-preview, the o1 model is more intelligent, with the ability to adjust response speed based on the difficulty of the questions. Additionally, the o1 model now includes image recognition capabilities. However, the functions of browsing the web and uploading files have not yet been added.

Moreover, the event confirmed a "rumor" from months ago—OpenAI has indeed launched a $200 model subscription, ChatGPT Pro.

In the ChatGPT Plus version, the model usage is still limited in terms of duration and number of queries, while in the $200 per month Pro version, all advanced models, including the high-level voice mode, will be available without restrictions.

The Pro version also offers a Pro version of the o1 model. Using the o1 Pro model will slightly increase the reliability of the results. For most users, this minor improvement might not have much impact, but for those willing to pay $200 a month for GPT, even a small boost in reliability could be valuable.

Event Details:

o1 Full Version: Faster, Supports Image Input

After today’s event, the o1 model will officially replace the o1-preview model, pushing it to ChatGPT Plus users and newly introduced ChatGPT Pro users.

The o1 model has several main features compared to o1-preview:

-

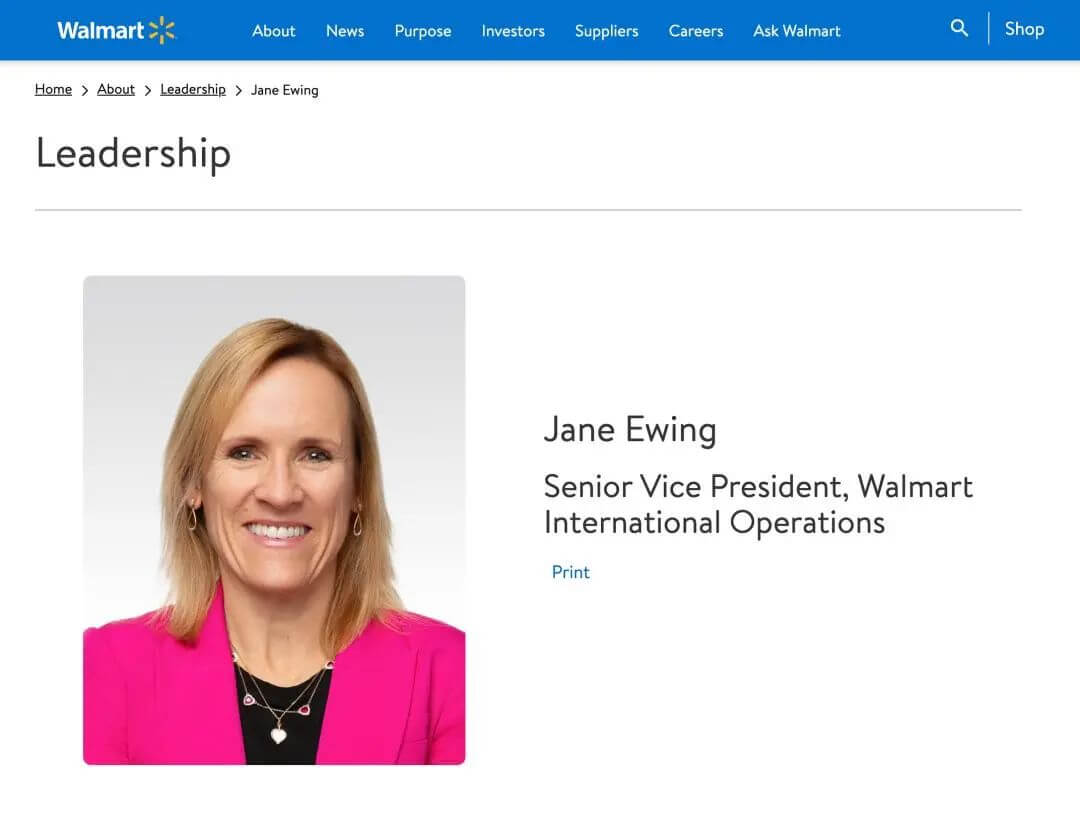

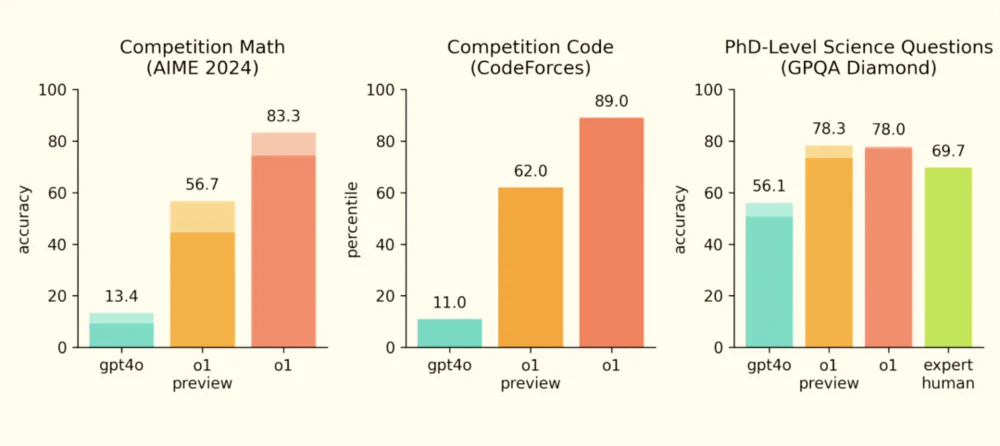

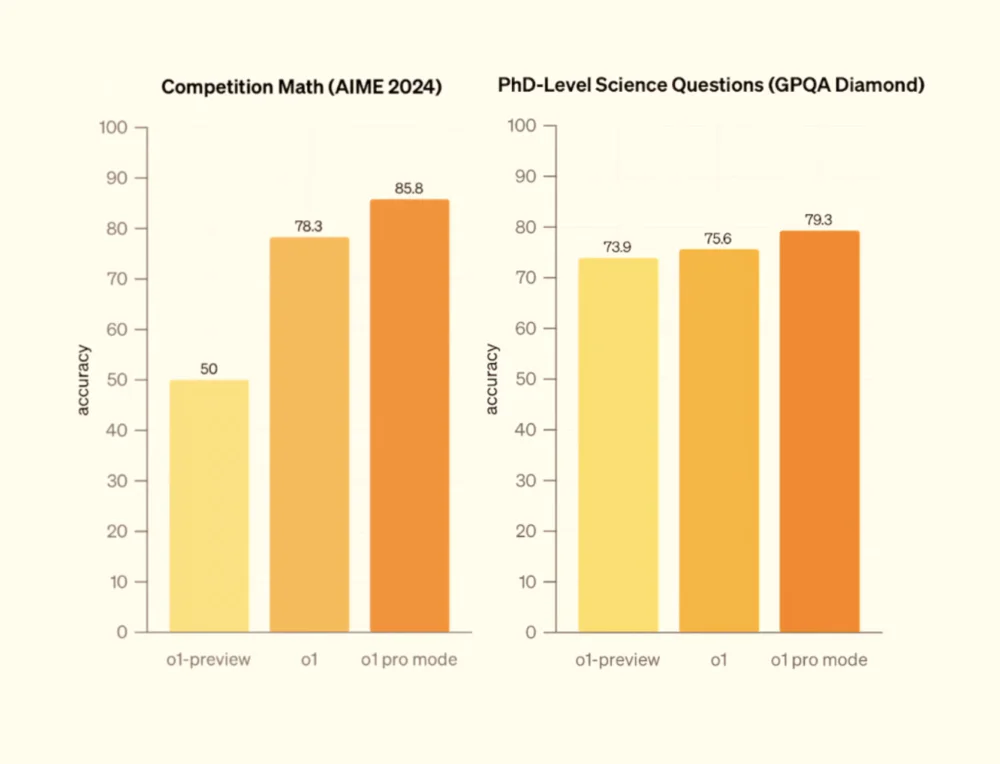

Improved Basic Intelligence, Especially in ProgrammingDuring the event, Sam Altman showcased the model’s ability to solve math competition problems, programming competition questions, and Ph.D.-level scientific problems to demonstrate the new model's capabilities.

The o1 model has made significant progress compared to o1-preview.

OpenAI emphasized that improvements in basic intelligence will enhance the model’s everyday performance, not just in solving very difficult math and programming problems.

OpenAI's testing shows that the o1 model has reduced the likelihood of making major errors by about 34% compared to o1-preview.

-

Smarter Response Speed, Faster Answers to Simple QuestionsAfter the release of o1-preview, many users gave feedback that "slow thinking" was still too slow. Even for simple questions, o1-preview took a long time to respond.

For instance, the author often ended up using the faster 4o model rather than the slower o1-preview model.

In the new model, OpenAI has worked to address this issue. Now, if you ask o1 a simple question, it will respond more quickly. Only for truly difficult questions will it take more time to think.

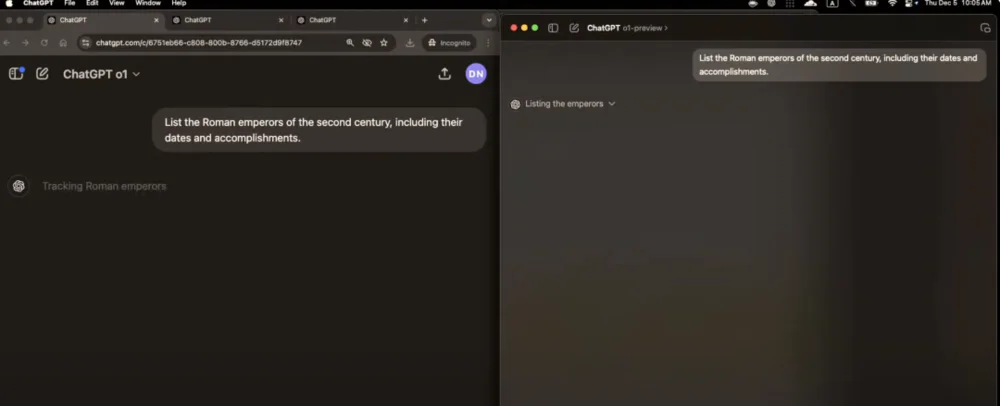

In the demonstration, OpenAI researchers asked the model to list the rulers of the Roman Empire in the 2nd century AD, including their reign periods and achievements.

Compared to the 4o model, which typically gives quick answers, the o1 model took about 18 seconds to answer the question, but it was 60% faster than o1-preview.

OpenAI’s data shows that, overall, o1’s thinking speed is about 50% faster than o1-preview.

-

Multimodal Image RecognitionOne major feature of o1-preview was its limited communication to text alone.

This issue has been partially addressed in o1, which can now recognize images.

In the demonstration, OpenAI researchers uploaded a hand-drawn thermodynamics diagram, and o1 was able to recognize specific data from the sketch and perform multimodal reasoning.

However, some features that might be important to advanced users, such as web browsing, file uploads, structured outputs, and function calls, are still under development.

-

o1 Model API Not Yet ReleasedThe API for the o1 model has not been released yet. However, it is expected that an API interface with image recognition functionality will be available in the future.

What Does the $200 Pro Mode Offer?

Previously, there were reports that OpenAI would launch a $200 per month Pro version. At the time, $200 seemed like an outlandish price.

However, it is now clear that OpenAI’s Pro version is not for regular users but for advanced users who heavily value model usage.

The $200 Pro mode allows unlimited access to various high-level models, including o1 and advanced voice modes. Currently, users can only use the advanced voice mode for one hour a day with the standard ChatGPT Plus version, and if they want 24-hour access, they would need multiple accounts. In contrast, the Pro mode seems more cost-effective.

Additionally, the Pro version offers the Pro version of the o1 model, which slightly increases the reliability of the model’s results.

OpenAI's data results are shown above. While the improvement might seem modest, it certainly gives users a sense of VIP treatment.

How many users will eventually pay $200 a month for AI tools? This is a question worth watching. It will reflect how much people are willing to pay for the world’s most intelligent large models. Does paying $200 a month mean the most intelligent model is worth the cost of a low-paid intern?

Looking ahead, it will be interesting to see what OpenAI will release over the next 12 days. After seeing day one’s release, the author isn’t expecting much from the Sora model.

The cost of computational power for models is still a massive issue. Even with OpenAI's substantial financial resources, the release of the $200 Pro model is necessary to cover the high computational cost of advanced models. If the Sora model is released, how much would the membership fee need to be to support its usage?