Google's new quantum chip, Willow, has made a historic achievement by completing a standard computation in under 5 minutes, a task that would take traditional supercomputers 10^25 years. This breakthrough in quantum error correction could revolutionize fields like drug discovery, battery design, and fusion energy.

In the early hours of today, Google announced a major breakthrough: its latest quantum chip, Willow, achieved stunning results in benchmark tests, completing a standard computation in less than 5 minutes.

Currently, the fastest supercomputer would take over 10^25 years to complete the same task, which is longer than the age of the universe!

Willow's breakthrough reduces errors exponentially by using more quantum bits, solving a quantum error correction challenge that has been studied for nearly 30 years.

After Alphabet and Google CEO Sundar Pichai officially announced this milestone, Elon Musk immediately commented “Wow.” The two continued their discussion in the comments, with Pichai suggesting building a quantum cluster in space using SpaceX's Starship, and Musk responding, "This is very likely to happen."

Even Sam Altman, co-founder and CEO of OpenAI, who just released the Sora large video model, took time to congratulate: "Huge congratulations!!" Pichai responded, "The quantum + AI of the multiverse future is coming soon, and congratulations on the o1 launch!"

Pichai said that Willow is an important step toward building useful quantum computers with practical applications in areas like drug discovery, fusion energy, and battery design.

"I don't think most people fully understand the significance of this breakthrough," emphasized David Marcus. "This breakthrough means that post-quantum cryptography and encryption technologies need further development."

For Charina Chou, Chief Operating Officer of Google Quantum AI, this achievement means that by the end of the 21st century, quantum computers will enable scientific discoveries that even the most powerful supercomputers cannot achieve.

Hartmut Neven, head of Google Quantum AI, noted that quantum algorithms have a fundamental scaling law, and many critical AI-related tasks also have similar scaling advantages. Thus, quantum computing is essential for collecting training data inaccessible to traditional machines, training and optimizing certain learning architectures, and modeling systems crucial for quantum effects. This includes helping to discover new drugs, designing more efficient batteries for electric vehicles, and accelerating nuclear fusion and renewable energy alternatives.

Many future game-changing applications are impossible on traditional computers and are waiting for quantum computing to unlock them.

The related paper has been published in the prestigious journal Nature.

Willow Chip: 105 Qubits, Solving a 30-Year-Old Challenge

Errors are one of the biggest challenges in quantum computing, as quantum bits often exchange information with their surrounding environment rapidly, making it difficult to protect the information needed for computation. Typically, the more quantum bits used, the more errors occur, making the system behave more like a classical one.

Current quantum computers are too small and prone to errors for most commercial or scientific applications. However, Google's experiments show that with the right error correction techniques, quantum computers can perform calculations with increasing accuracy as they scale, and this improvement surpasses a critical threshold.

"This has been our goal for 30 years," said Michael Newman, a Google research scientist, during the announcement.

According to Julian Kelly, head of Google's quantum hardware division, quantum computers encode information into states that can represent 0 or 1, but also exist as a superposition of many possible combinations of 0s and 1s.

However, these quantum states are very fragile. To perform useful computations, quantum information needs to be protected from environmental noise and operational errors.

To achieve this protection (without which quantum computing is impossible), theorists have developed clever schemes since 1995 that spread the information of one quantum bit across multiple "physical" quantum bits. The resulting "logical qubits" can, at least in theory, resist noise. To make this quantum error correction technique practical, it was necessary to demonstrate that spreading information across multiple qubits could reduce error rates effectively.

In recent years, companies like IBM and AWS, along with academic groups, have shown that error correction can slightly improve accuracy. In early 2023, Google announced a breakthrough using 49 qubits from its Sycamore quantum processor, encoding each physical qubit within superconducting circuits.

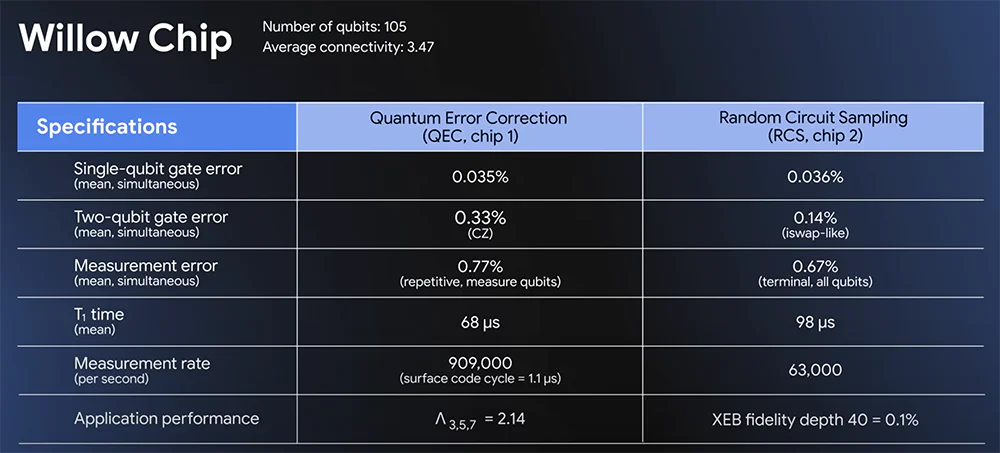

The new chip, Willow, is a larger and improved version with 105 physical qubits, developed in Google's quantum computing lab in Santa Barbara, California, which was built in 2021.

System engineering is crucial for designing and manufacturing quantum chips: all components must be carefully designed and integrated simultaneously. If any component lags or two components don't work well together, it will drag down system performance. Therefore, maximizing system performance is essential across the entire process, from chip architecture and manufacturing to gate development and calibration. The reported achievement evaluates the quantum computing system as a whole, rather than assessing one factor at a time.

Google prioritizes quality over quantity. Willow has top-tier performance in two system benchmark tests: quantum error correction and random circuit sampling. These algorithmic benchmarks are the best way to measure the chip's overall performance.

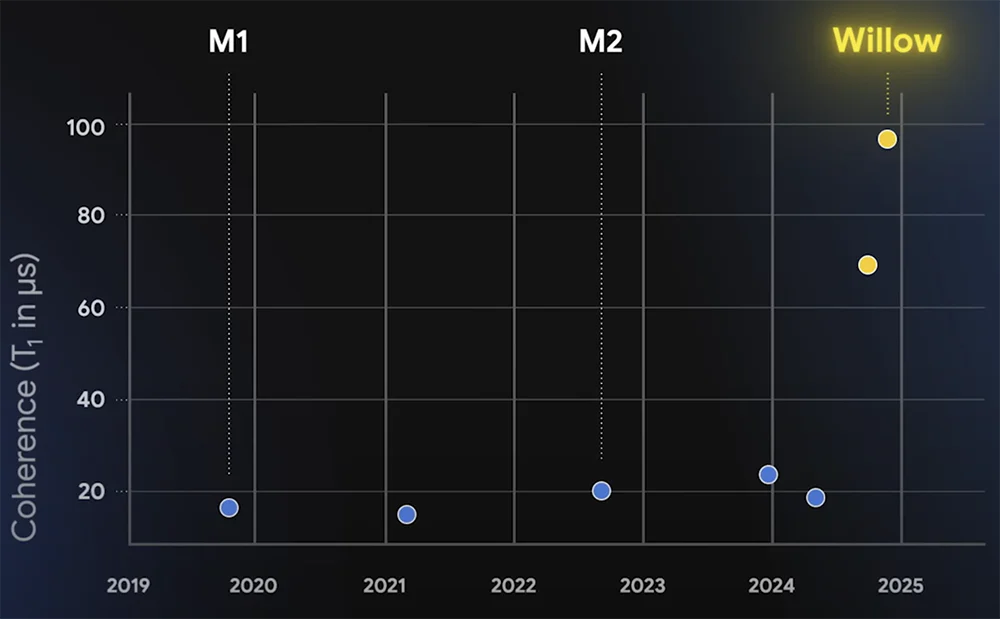

Other specific performance metrics are also important, such as T1 time (the duration for which a quantum bit can retain its excitation—a crucial quantum computing resource). Willow now achieves nearly 100 µs, a 5x improvement over Google’s previous generation chips.

Hartmut Neven, head of Google Quantum AI, said this is the latest example of quantum computers outperforming traditional computers.

Exponentially Reducing Error Rates, Surpassing Traditional Supercomputers

Google’s research, published in Nature, shows that the more quantum bits used in Willow, the more errors are reduced, and the system becomes more quantum-like.

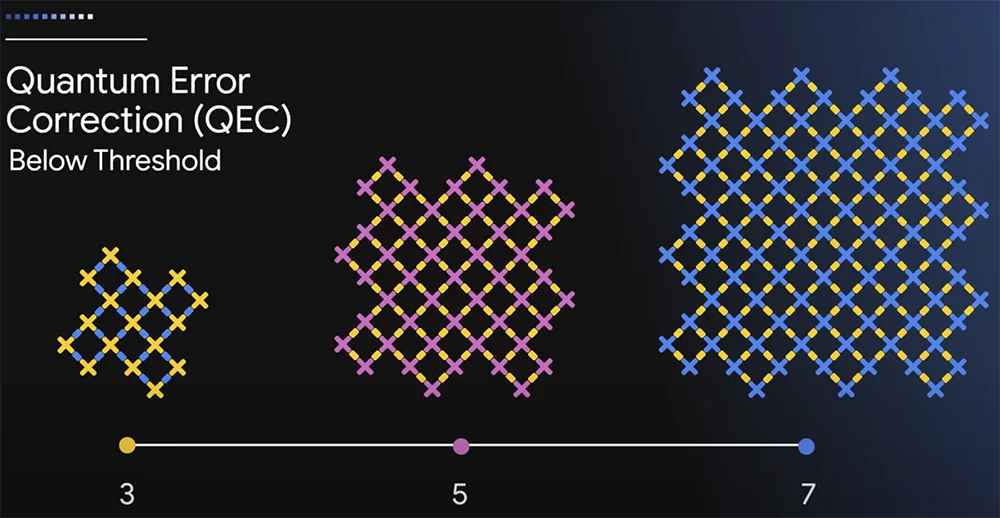

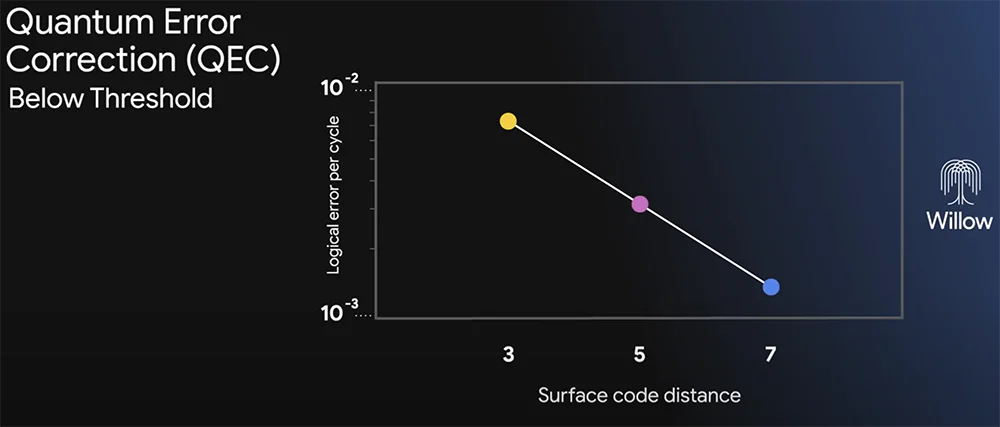

The research team tested increasingly larger arrays of physical qubits, expanding from 3x3, to 5x5, and then to 7x7 grids. With the latest advancements in quantum error correction, they were able to reduce the error rate by half each time.

In other words, they achieved an exponential reduction in error rates.

This historic achievement is referred to as "below threshold"—able to reduce errors as the number of qubits increases.

To prove "below threshold," it is necessary to show that significant progress in error correction has been made. Since Peter Shor introduced quantum error correction in 1995, this has been a challenging goal.

This result also includes other scientific "firsts."

For example, it is one of the first notable examples of real-time error correction in superconducting quantum systems. This is essential for any useful computation, as errors that aren't corrected quickly can destroy the computation before it’s completed.

The qubit array's lifetime is longer than that of a single physical qubit, indicating that error correction is improving the entire system.

As the first "below threshold" system, Willow is the most convincing prototype of scalable logical qubits built to date. This strongly suggests that practical, large-scale quantum computers can indeed be constructed. Willow brings us closer to running practical, commercially relevant algorithms that traditional computers cannot replicate.

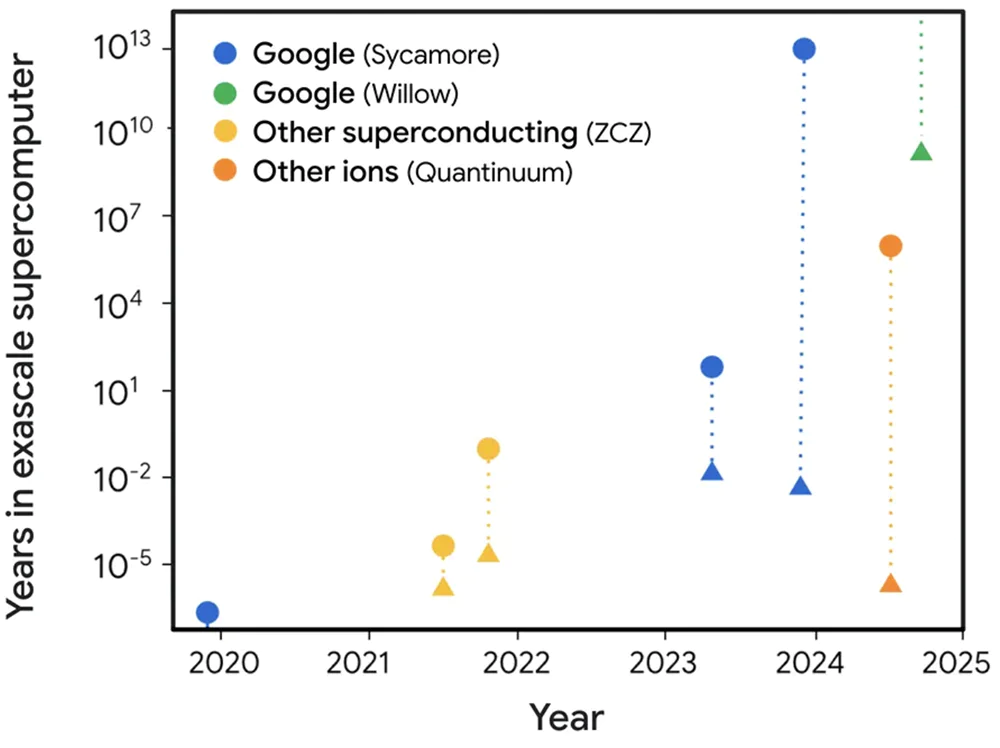

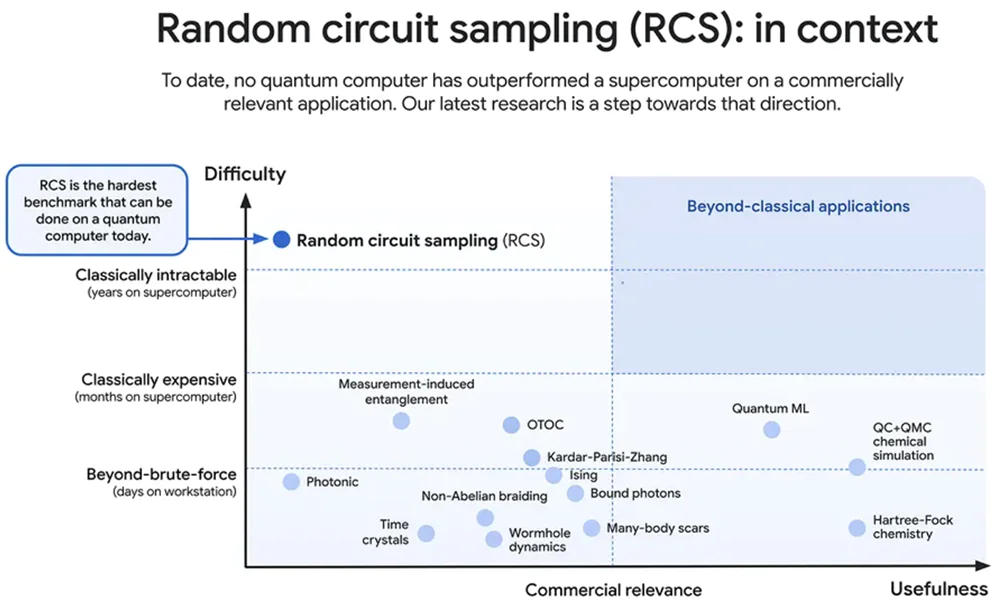

To measure Willow’s performance, the research team used their own random circuit sampling (RCS) benchmark. This is the most difficult traditional benchmark achievable on today’s quantum computers. It can be seen as the "entry point" for quantum computing, checking if quantum computers are doing things traditional computers can’t do.

Any team building a quantum computer should first test whether it can beat traditional computers on the RCS; otherwise, there is good reason to doubt its ability to handle more complex quantum tasks.

Google has always used this benchmark to evaluate the progress from one generation of chips to the next. They reported the Sycamore results in October 2019 and more recently in October 2024.

Willow’s performance in this benchmark is astonishing: it completed a computation in less than 5 minutes, while one of the fastest supercomputers today would take 10^25 years to complete the same task.

If written out, that’s: 10,000,000,000,000,000,000,000,000 years.

This incredible number exceeds the known time scales in physics, far surpassing the age of the universe.

It confirms the idea that quantum computing happens in many parallel universes, aligning with David Deutsch’s view that we live in a multiverse.

As shown, Willow's latest results represent Google's best so far.

Conclusion: Moving Toward Commercial Applications

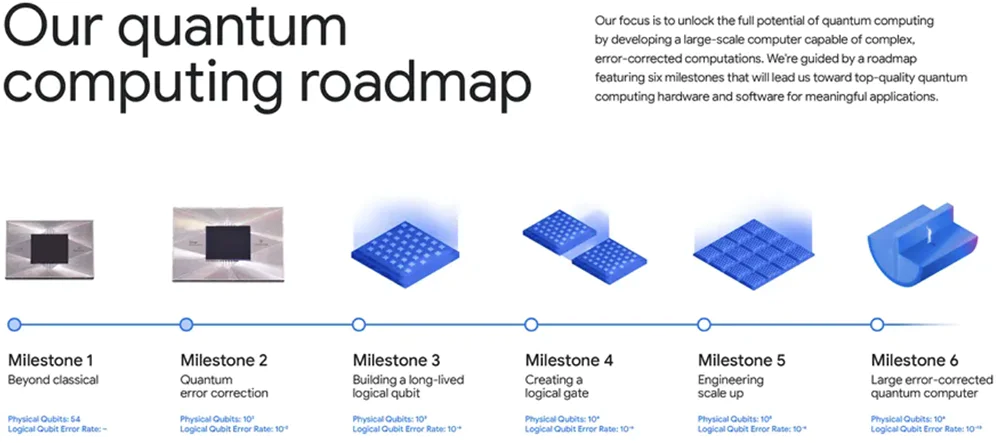

When Google Quantum AI was founded in 2012, its vision was to build a practical large-scale quantum computer that could leverage quantum mechanics to drive scientific discoveries, develop useful applications, and solve some of society's greatest challenges, ultimately benefiting society. As part of Google Research, the team has developed a long-term roadmap, and Willow is pushing them toward commercially relevant applications.

The next challenge in this field is to demonstrate the first "useful, beyond-classical" computation on today’s quantum chips, one that is relevant to real-world applications. Google’s team is optimistic that Willow’s generation of chips can help them achieve this goal. So far, they have conducted two different types of experiments: one running the RCS benchmark to measure performance against traditional computers, though with no direct commercial application, and the other simulating scientifically interesting quantum systems, pushing new scientific discoveries, but still within the realm of classical computing.

Their goal is to accomplish both at once: moving into the realm of algorithms that traditional computers cannot reach, algorithms that are useful for real-world, commercially relevant problems.