Nvidia's dominance in the AI chip market is under pressure as competitors like AMD, Amazon, and innovative startups introduce cost-effective and powerful alternatives. With key players such as Meta and Anthropic adopting new solutions, the AI chip industry is entering a new phase of diverse and intensified competition.

Nvidia's dominant position in the AI chip market is under unprecedented pressure.

New Players and Custom Chips Make Waves

Tech giants like AMD and Amazon, alongside startups such as SambaNova Systems and Groq, are focusing on large-scale model inference with custom chips that consistently improve reliability and cost-efficiency.

These new entrants not only provide more cost-effective alternatives but are also gaining recognition from key clients. For instance, Meta has started using AMD's MI300 chip to support inference for its new AI model Llama 3.1 405B, while Amazon’s Trainium 2 chip has received positive feedback from potential users, including Apple.

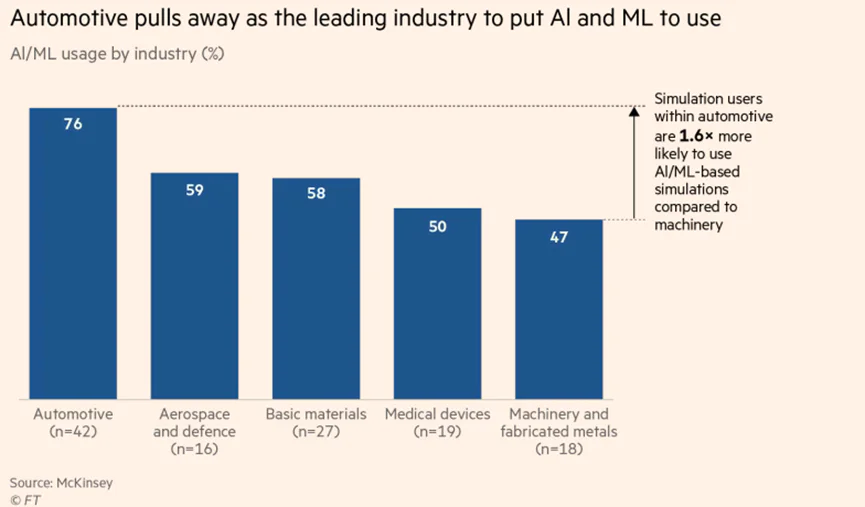

Market research firm Omdia forecasts that data center spending on non-Nvidia chips will grow by 49% in 2024, reaching $126 billion. It appears that market dynamics are shifting, and the AI chip sector is entering a new phase of diversified competition.

1. Tech Giants and Startups Target Inference as the Entry Point

AMD’s MI300 chip is expected to generate over $5 billion in sales during its first year of release. Meanwhile, Amazon has developed its next-generation AI chip, Trainium, in North Austin.

Startups like SambaNova Systems, Groq, and Cerebras Systems are also making strides. These companies claim to offer faster speeds, lower operating costs, and more affordable pricing for inference tasks compared to Nvidia.

Inference has become the critical phase where competitors are challenging Nvidia's monopoly.

"True commercial value comes from inference, and inference is starting to scale," said Qualcomm CEO Cristiano Amon. He noted that Qualcomm plans to utilize Amazon’s new chips for AI tasks, stating, "We are beginning to see the start of transformation."

In terms of cost, Nvidia’s current chips sell for up to $15,000, with its new Blackwell chips projected to cost tens of thousands of dollars.

By contrast, competitors offer more economical solutions. Dan Stanzione, executive director of the Texas Advanced Computing Center, highlighted that due to lower power consumption and pricing, the center plans to use SambaNova chips for inference tasks while employing Nvidia's Blackwell supercomputers.

2. Amazon's Ambitions in the AI Chip Space

Amazon has demonstrated significant ambition in the AI chip market, investing $75 billion this year in computing hardware, including AI chips. Its Trainium 2 chip delivers four times the performance of its predecessor, with plans for an even more powerful Trainium 3 already announced.

According to Eiso Kant, CTO of San Francisco-based AI startup Poolside, Trainium 2 offers 40% better compute performance per dollar compared to Nvidia’s hardware. More importantly, Amazon plans to provide Trainium-powered services globally, which is especially valuable for inference tasks.

Notably, Amazon has partnered with AI startup Anthropic to build a massive AI facility equipped with hundreds of thousands of Trainium chips, boasting five times the computing power of any system Anthropic has used before.

Nvidia Still Holding Strong

Despite intensified competition, Nvidia’s leadership is not expected to waver in the short term. CEO Jensen Huang emphasizes the company’s significant advantages in AI software and inference capabilities, with strong demand for its new Blackwell chips.

Speaking at Stanford University, Huang remarked:

"Our products' total cost of ownership is very economical. Even if competitors' chips were free, they wouldn't be cheap enough."