Last night, OpenAI's strongest competitor, Anthropic, open-sourced a revolutionary new protocol – MCP (Model Context Protocol), which is expected to completely solve the pain point of connecting LLM applications to external data! Since the beginning of this year, every developer has been focused on breaking into the big LLM application track, no matter what.

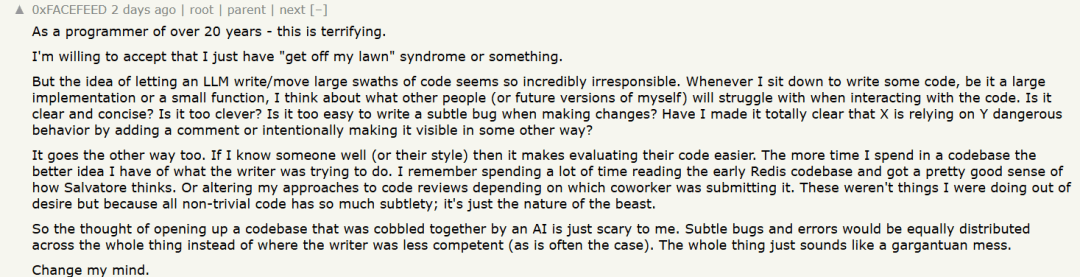

However, those who have entered the LLM application development world know that developing with large models is far from as simple as just "calling interfaces," as people outside the field often claim. The pain of developers is something no one understands.

For example, just customizing code can drive you up the wall. Many may not know this, but in order for an LLM application to access external data, developers have to write a bunch of custom code – it's troublesome, repetitive, and honestly, a nightmare! Every new data source requires a custom implementation, making it difficult to build truly interconnected AI systems that scale.

Fortunately, the model unicorns are very focused on the developer ecosystem, and this problem now has a solution!

Last night, Anthropic, OpenAI's biggest competitor, open-sourced a revolutionary new protocol – MCP (Model Context Protocol), which is expected to completely resolve the difficulty of connecting LLM applications to external data! Its goal is to make cutting-edge models generate better and more relevant responses. Developers will no longer need to write custom integration code for every data source—MCP will handle everything with one protocol!

1. One Configuration, One Prompt, Everything Done

Someone demonstrated how to configure MCP through Claude, and it was very easy to use. The demo effect was impressive!

Now, by simply configuring MCP on Claude Desktop, you can directly connect Claude to GitHub, create a repository, submit a PR, and finish everything in no time!

Example prompt:

Please do the following:

Make a simple HTML page

Create a repository called simple-page

Push the HTML page to the simple-page repo

Add a little CSS to the HTML page and then push it up

Make an issue suggesting we add some more content on the HTML page

Now make a branch called "feature" and make that fix, then push the change

Make a pull request against main with these changes

Video Source: AI Cambrian

According to the official website, MCP not only accesses local resources (databases, files, services), but also remote resources (such as Slack, GitHub API), all through the same protocol! In addition to data (files, documents, databases), MCP servers can also provide:

Tools: API integrations, operations, etc.

Prompts: Template-based interactions

Security mechanisms: MCP includes built-in security mechanisms, where the server controls its own resources and developers do not need to provide API keys to the LLM provider, making the security boundaries clear!

Currently, Anthropic provides developers with three main components: the Model Context Protocol specification, a software development kit (SDK), local MCP server support in the Claude desktop app, and an open-source MCP server repository.

Claude 3.5 Sonnet enables rapid implementation of MCP servers, making it easy for organizations and individuals to connect their most important datasets to various AI tools.

Anthropic has also shared pre-built MCP servers for common enterprise systems, such as Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer.

Early adopters like Block and Apollo have already integrated MCP into their systems, and developer tool companies like Zed, Replit, Codeium, and Sourcegraph are working with Anthropic to use MCP to enhance their platforms.

This allows AI agents to better retrieve relevant information, further understand the context of coding tasks, and generate more detailed and powerful code with fewer attempts.

2. Open Standard: Anthropic Invites You to Contribute Code!

Anthropic has high hopes for this open-source protocol and hopes that MCP can become the open standard for LLM integration!

Currently, MCP only supports local servers, but Anthropic is working on remote server support with enterprise-level authentication. In the future, teams will be able to securely share contextual resources across organizations!

Important Note: The MCP support in Claude Desktop is currently in developer preview and only supports connections to locally running MCP servers. Remote connections are not yet supported.

If you're interested, you might want to try it out and contribute your own code to the protocol: GitHub link

By the way, some believe that MCP can be seen as Anthropic's version of Function Calling. What do you think?

Reference Links: