Elon Musk and Mark Zuckerberg are leading a fierce competition for GPUs, with Musk's xAI aiming to build a supercomputer with 1 million GPUs, and Meta investing $10 billion in a new AI data center. Learn about the growing demand for GPUs and the major players vying for market share.

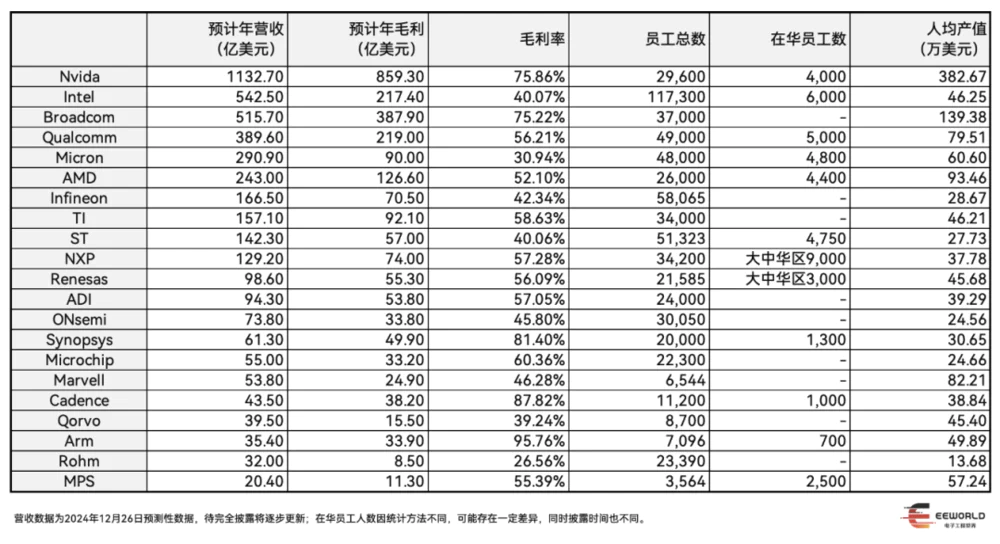

According to NVIDIA's data, in 2023, the company's sales revenue from its data center division, related to AI workloads, reached $18.4 billion, a 409% increase from the previous year. In 2023, NVIDIA held about 98% of the data center GPU market share, as its flagship H100 chip has virtually no competitors.

Entering 2024, NVIDIA's GPU sales continue to surge, and CEO Jensen Huang has remarked that the company's newly released Blackwell GPU has garnered significant attention in the market, with many customers already making purchases. According to Jon Peddie Research, the global GPU market is expected to exceed $98.5 billion this year. Huang also believes that data center operators will spend $1 trillion over the next four years upgrading their infrastructure to meet the needs of AI developers, presenting an opportunity large enough to support multiple GPU vendors.

In recent days, reports have surfaced that Elon Musk and Mark Zuckerberg are leading a new round of the GPU competition.

Elon Musk’s Plan for a 1 Million GPU Cluster

According to the Financial Times, Elon Musk’s AI startup, xAI, has pledged to scale its Colossus supercomputer by ten times, aiming to accommodate over one million GPUs to outpace competitors like Google, OpenAI, and Anthropic.

Colossus, which was built earlier this year in just three months, is considered the world’s largest supercomputer, operating a cluster of over 100,000 interconnected NVIDIA GPUs. Musk’s Memphis-based supercomputer is notable for how quickly his startup managed to assemble GPUs into an AI processing cluster. "From start to finish, it only took 122 days," Musk said. Supercomputers typically take years to build.

Musk’s company likely spent at least $3 billion to assemble this supercomputer, as it currently consists of 100,000 NVIDIA H100 GPUs, each priced around $30,000. Musk now wants to upgrade the supercomputer with H200 GPUs, which have larger memory, though each one costs nearly $40,000.

NVIDIA also revealed that the scale of xAI’s "Colossus" supercomputer is being doubled. Musk mentioned on Twitter that the supercomputer will soon integrate 200,000 H100 and H200 NVIDIA GPUs into a 785,000-square-foot building.

Dell's Chief Operating Officer, Jeff Clarke, said in an interview on Thursday, "We started from scratch and deployed tens of thousands of GPUs at scale in just a few months." "The cluster is still under construction, and we’re standing out."

As mentioned earlier, Musk’s startup xAI is developing a large facility to enhance its computing power in the AI tool competition. The Greater Memphis Chamber also announced on Wednesday that work has already begun to expand the Tennessee Memphis plant. The Chamber stated that NVIDIA, Dell, and Supermicro will establish operations in Memphis to support the expansion, and a "special xAI operations team" will be set up to "provide concierge services to the company."

Reports have pointed out that it’s unclear whether xAI plans to use the current-generation Hopper GPUs or the next-generation Blackwell GPUs during the expansion. The scalability of the Blackwell platform is expected to be better than Hopper, so using upcoming technology rather than existing technology makes more sense. However, obtaining 800,000–900,000 AI GPUs is challenging due to high demand for NVIDIA products. Another challenge will be coordinating one million GPUs to work together at maximum efficiency, where Blackwell again makes more sense.

As reported earlier by the Wall Street Journal, a sales executive at NVIDIA told colleagues that Musk's demand for chips is putting pressure on the company’s supply chain. A NVIDIA spokesperson stated that the company is working hard to meet all customer needs.

Naturally, the financial requirements for this expansion are enormous. Purchasing GPUs (each costing tens of thousands of dollars), along with the power and cooling infrastructure, could drive the investment into the tens of billions of dollars. xAI has raised $11 billion this year and recently secured another $5 billion. The company is currently valued at $45 billion.

Meta Spending Billions to Build AI Data Centers

While Elon Musk is racing to acquire GPUs, Mark Zuckerberg is not standing still.

Meta Platforms Inc. announced on Wednesday that the company plans to build a $10 billion AI data center campus in northeastern Louisiana, making it the largest data center the company has ever constructed. The campus will span 4 million square feet and will be located in Richland Parish, a rural area primarily consisting of farmland, near existing utility infrastructure. Groundbreaking is expected this month, with construction continuing until 2030.

The data center's infrastructure will house the networks and servers required to process massive amounts of data to support the increasing use of digital technologies, and it will be optimized for AI workloads, which particularly require data and computational power. Once operational, it will support all of Meta's services, including Facebook, Messenger, Instagram, WhatsApp, and Threads.

Before the announcement of this data center investment, other companies were also working to expand their data and computing capacities to meet the growing demand for AI and machine learning applications.

Kevin Janda, Meta’s Director of Data Center Strategy, said, "Meta is building the future of human connection and the technology to make it happen. This data center will be a key component of that mission."

Louisiana Governor Jeff Landry stated that the new data center will bring new technological opportunities to the region. The Louisiana Economic Development Agency, a government body dedicated to improving the state's business environment, estimates that the campus will create about 1,500 jobs.

"Meta's investment will make the region a pillar of Louisiana’s fast-growing tech industry, revitalize one of the state's beautiful rural areas, and create high-paying job opportunities for Louisiana workers," Landry said.

Meta has not disclosed how many GPUs the new facility will support, nor which company’s chips it plans to use. According to Entergy, the center will be powered by three natural gas plants with a total generating capacity of 2.2 gigawatts. They also emphasized that a significant portion of the cost will go toward accelerators and the supporting infrastructure like hosts, storage, and networks. If you assume that 90% of the cost of an AI facility goes toward IT equipment, the construction cost for this facility would be $1 billion, while equipment costs would reach $9 billion. Assuming that more than half of the IT equipment cost goes toward accelerators, the cost now comes to $5 billion. At an average price of $25,000 each, this translates to 200,000 AI accelerators. If the facility fills up with future self-developed MTIA accelerators, which are half the cost, the cost would reach $400 million.

Meta CEO Mark Zuckerberg previously stated that by the end of 2024, the company will be running 350,000 NVIDIA H100 chips in its data centers, though the company is also developing its own AI hardware.

Who Has the Most GPUs?

In addition to the above-mentioned companies, major players like Microsoft, Google, AWS, CoreWeave, and various domestic cloud providers are also chasing after NVIDIA GPUs. A recent report from Fortune highlighted a group of three particularly financially strong customers who, in the first nine months leading up to October, bought $10-11 billion worth of goods and services from NVIDIA. (For more details, refer to the article "Three Customers, NVIDIA’s Biggest Supporters").

According to estimates from the blog LessWrong, if all GPUs were converted to equivalent H100 computing power, the top five tech companies will have the following GPUs in 2024 and 2025:

Microsoft: 750,000 to 900,000 H100, expected to reach 2.5 to 3.1 million by next year.

Google: 1 million to 1.5 million H100, expected to reach 3.5 to 4.2 million by next year.

Meta: 550,000 to 650,000 H100, expected to reach 1.9 to 2.5 million by next year.

Amazon: 250,000 to 400,000 H100, expected to reach 1.3 to 1.6 million by next year.

xAI: 100,000 H100, expected to reach 550,000 to 1 million by next year.

The blog also mentions estimates of large cloud companies buying Blackwell chips—Microsoft is purchasing 700,000 to 1.4 million, Google 400,000, and AWS 360,000. It’s rumored that OpenAI owns at least 400,000 GB200 chips.

Clearly, the new generation Blackwell is in high demand. Data centers under construction are likely to adopt NVIDIA’s Blackwell chips, with the company expected to ship large quantities next year. However, the market is already anticipating the release of the next-generation Rubin chip, which will follow Blackwell.

NVIDIA CEO Jensen Huang said in an interview with CNBC's Closing Bell Overtime in early October that demand for Blackwell AI chips is "insane." He said, "Everyone wants to have the most. Everyone wants to be first."

In the same interview, Huang stated, "In such a fast-paced technological era, we have the opportunity to triple our investment, truly driving the innovation cycle, which will boost capacity, increase output, lower costs, and reduce energy consumption. We are working towards this direction, and everything is going according to plan."

Colette Kress, NVIDIA’s CFO, said in August that the company expects Blackwell revenue in Q4 to reach billions of dollars.

Huang stated that NVIDIA plans to update its AI platform every year, improving performance by two to three times.

Melius Research analyst Ben Reitzes wrote in a report, "There’s increasing speculation that NVIDIA’s next-generation GPU (after Blackwell)—named Rubin—may be ready six months earlier than most investors expect in 2026."

Reitzes cautioned that chip releases rarely come ahead of schedule, and he maintained the stock’s $195 target price, assuming Rubin will be deployed in the second half of 2026.

Conclusion

In the current GPU gold rush, NVIDIA is undoubtedly the biggest winner. However, AMD is not far behind and is striving to become another significant player in this market. Additionally, giants like Microsoft, Meta, Google, and AWS are working on developing their own AI chips, hoping to strike a balance between NVIDIA GPUs and their self-developed accelerators.

For example, AWS CEO Matt Garman recently said at a conference, "At the moment, there is really only one option for GPUs, and that’s NVIDIA. We think customers will appreciate having more choices."

Reference Links: