A deep dive into Google's journey through the AI landscape, from early struggles with Bard to its recent triumphs with Gemini 2.0. Explore how Google's advancements in large models, multimodal capabilities, and AI-powered applications are redefining its future amidst antitrust challenges and fierce competition.

"I believe 2025 will be crucial. We must recognize the urgency of this moment and accelerate as a company. The risks are high. These are disruptive times. In 2025, we need to persistently focus on unlocking the advantages of this technology and solving real user problems," said Sundar Pichai, CEO of Google, at the 2025 Strategy Meeting held on December 18.

It sounds like a moment of life or death for the company, but in reality, it’s far from that. Google just had a triumphant December, though it came after a period of gloom.

In 2023-2024, the event that caused such twists and turns in Google's fate was the new and highly publicized race of large models. Google has faced cold stares and mockery in this arena.

First, let’s note that Google's journey with large models or AI started very early—almost the earliest among the Mag-7. Even before OpenAI released version 3.5, Google launched its first-generation mature large model, Bard, in early 2023. However, instead of admiration, it was met with almost mocking reactions, and its stock price suffered. Even now, Google still has the lowest PE among the Mag-7.

As the absolute victor of the last mobile internet era, Google, which began researching machine learning in 2001, could not tolerate this.

Rocky Road for Large Models

1. Early Effort, Late Arrival

As the undisputed victor of the last mobile internet era, Google has always been a leader in technological reserves and innovation. Especially in artificial intelligence fields like deep learning and neural networks, where computing power and algorithms are crucial, Google has remained at the forefront.

In 2001, Google began using machine learning to help people correct spelling errors in keyword inputs.

In 2006, it launched Google Translate, powered by machine learning.

In 2015, the open-source machine learning framework TensorFlow was released, making AI more accessible, scalable, and efficient, thus enabling recommendation algorithms to enter mainstream mobile apps.

In 2016, DeepMind’s AlphaGo defeated the world Go champion, turning AI—a term once found only in sci-fi—into reality.

That same year, DeepMind launched the customized TPU chip optimized for TensorFlow, which allowed AI models to be trained and run faster and more efficiently. Google's next-generation large model, Gemini 2.0, released in December 2024, was trained using the sixth generation of these TPUs.

In 2017, Google introduced the Transformer neural network architecture, which laid the foundation for generative AI systems.

In February 2019, GPT-2 was released based on the Transformer architecture, followed by GPT-3.5, GPT-4.0, and GPT-1. Unfortunately, Google's own first-generation large model was not based on the Transformer architecture.

2. Too Fast, Too Urgent

To respond to the meteoric rise of GPT-3.5 by the end of 2022, Google launched its large model, Bard, on February 6, 2023, and rolled it out in the U.S. and UK in March.

The initial version of Bard was based on Google's LaMDA (Language Models for Dialog Applications), released in 2021. With 137 billion parameters, this model focused more on natural conversation but lacked strong data processing capabilities. It underperformed during its live-streamed release in Paris, leading to an 8% drop in Google's stock price.

Both internally at Google and in the media, Bard's capabilities were criticized and doubted. Our own tests showed that compared to ChatGPT, Bard seemed like a product of the previous era, with dialogue performance not much better than Apple's Siri.

On April 10, 2023, Bard's underlying model was upgraded to the more powerful PaLM (Pathways Language Model). Compared to the LaMDA model, PaLM showed significantly improved language understanding and generation capabilities, making conversations smoother and more natural.

On May 10, Bard was upgraded to the PaLM2 large model, which significantly enhanced its logical reasoning abilities to reduce errors in conversations. Google began integrating its large models into its products at this stage, providing generative AI functions for Gmail and Workspace, among others.

It wasn’t until December 2023 that Bard received a major upgrade again, with the official report showing that Gemini Pro outperformed GPT-3.5 across the board. The model switched from PaLM to Gemini Pro, which greatly improved performance in text understanding, summarization, reasoning, coding, and planning.

Despite continuous iterations throughout 2023, Google never entered the "top-tier large model" ranks and failed to expand its application outside of Google’s ecosystem. Meanwhile, many shell products relying on OpenAI's ChatGPT were already generating profits.

3. A Comeback from the Veteran Giant

In the face of fierce competition, Google made a comeback with its advancements in 2024. Here's what happened:

February 8, 2024: Bard was officially renamed Gemini, marking the start of Google's resurgence.

May 14, 2024: Gemini 1.5 Pro was launched, followed by Gemini 1.5 Pro.

December 6, 2024: Gemini 2.0 Flash was released.

In addition to vertical large model products, Google also expanded its peripheral offerings, such as the highly praised NotebookLM.

Released in September 2024, NotebookLM is an AI-powered note-taking app capable of understanding and summarizing inputs, generating conversational audio content. This tool is a natural match for podcast production. In December, NotebookLM received a major upgrade, including a new look and features like the ability to generate audio summaries of conversations.

We tested two podcasts made with this tool, and the conversational skills of the AI host surpassed the beginner level of human podcasters. The AI’s tone was natural, and the conversation had varied intonations that made it hard to tell whether it was human or AI. However, its understanding of current content was limited, as it couldn’t keep up with trending topics.

This AI audio tool has massive efficiency advantages over human podcasters, and it can also be applied to academic paper understanding, lowering the reading barrier for complex content. Spotify Wrapped fans even launched a Spotify Wrapped AI podcast entirely created with NotebookLM.

In the multimodal space, Google launched the text-to-image model Imagen 2 in February 2024, though it initially faced criticism for historical inaccuracies. After improvements, Imagen 3 was released in August, with enhanced detail accuracy, more artistic styles, and richer textures.

In May, Google unveiled Veo, a video generation model to compete with OpenAI's Sora. Early tests showed that Veo offered better image quality but struggled with realism, often feeling too "sci-fi."

DeepMind also developed GenCast, an AI weather prediction model, which could predict weather changes up to 15 days in advance, benefiting agricultural regions with early disaster warnings.

In October 2024, DeepMind won a Nobel Prize in Chemistry for its AlphaFold protein structure prediction model, highlighting Google's AI dominance in scientific research.

4. The Bumper Harvest Month 🍇

After a year of challenges and refinements in 2024, Google has found its rhythm and reaped the rewards in the final month of the year. Not only did Gemini 2.0 break OpenAI's streak of 12 consecutive product release events, but with the quantum chip Willow, Google also proved its irreplaceable position in the tech industry.

Before the December 11 release of Gemini 2.0, Google had already quietly launched the gemini-exp-1206 model. This experimental model quickly became a top contender in several LLM ranking lists, even surpassing the later release of Gemini 2.0 Flash. It is expected that this version will serve as a testbed for more advanced models in the future.

However, the most buzz-worthy release was undoubtedly Gemini 2.0 Flash on December 11. The term "flash" hints that this may not be the full version of Gemini 2.0, but the released features were powerful enough to help Google reclaim its position as a tech leader.

The model excels not only in reasoning capabilities but also in its one-step solution for multimodal support. Compared to OpenAI, this is a much more genuine approach. To be honest, OpenAI's recent releases feel like a slow and steady squeeze of toothpaste—improving with each release but with only minor advancements, especially in multimodal capabilities.

Gemini 2.0 Flash surpasses its predecessors with enhanced reasoning power and faster response times. According to Google, it performs even faster than Gemini 1.5 Pro in key benchmark tests, with speed up to twice as fast. As a native multimodal model, it can handle inputs and outputs across images, videos, and audio. Additionally, it natively integrates with Google Search, code execution tools, and third-party user-defined functions. In areas like mathematics and programming, Gemini 2.0 Flash outperforms OpenAI's models such as o1-preview and o1-mini.

Apart from performance and multimodal enhancements, Gemini 2.0 Flash is also pushing the evolution of AI agent products and applications. Alongside the model release, Google launched several related features, including updates to the multimodal AI assistant Project Astra, the browser assistant Project Mariner, and the code assistant Jules.

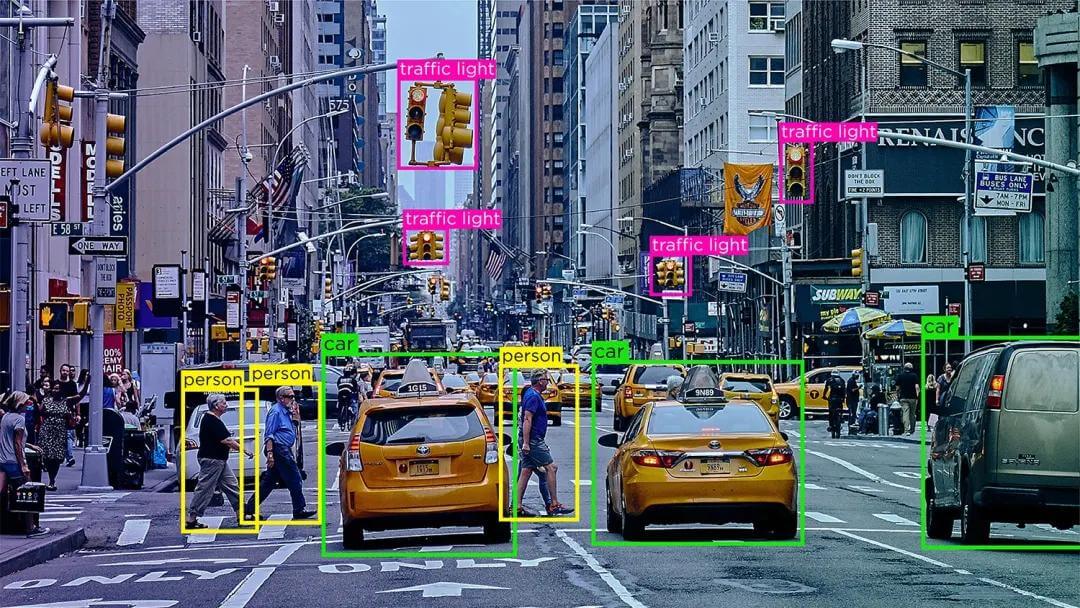

Project Astra, initially launched in May 2024, allows users to interact with AI via cameras and microphones for object recognition, voice interactions, and more. The update adds support for multiple languages, accents, and rare words. Integration with Google products like Search, Lens, and Maps has been improved, and it now includes a context memory function, storing up to 10 minutes of conversation memory with improved voice latency.

Currently in experimental form, Project Mariner is a browser extension that understands elements like pixels, text, code, images, and forms on a webpage, completing tasks like ordering items, filling forms, and browsing, based on user commands.

Jules, a code assistant, is integrated into GitHub workflows to help developers with code analysis and guidance.

This release also introduced the second generation of video and image generation models, Veo 2 and Imagen 3. Veo 2 boasts a better understanding of physical reality, resulting in high-quality video production with more intricate details and realism. Imagen 3 significantly improves image generation with increased texture richness and style variety.

For researchers, Google unveiled Deep Research, a tool that uses advanced reasoning modes to help with research exploration and report writing. Based on feedback from forums, many students, teachers, and professionals in complex technical fields have already adopted Deep Research as their preferred large model product.

This launch has firmly placed Google back in the AI elite, achieving an all-encompassing victory in the big model race. More importantly, with its comprehensive product ecosystem, Google is well-positioned to go further than its competitors in the next frontier of the AI race—AI Agent development and application.

Google’s advantage in the large model space not only lies in the performance and multimodal capabilities of its products but also in its holistic coverage of model chips, training platforms, and downstream application scenarios.

With the release of the 2.0 Flash model, the underlying core hardware has also surfaced—the sixth-generation TPU Trillium. Gemini 2.0’s training and reasoning are 100% powered by this chip.

Trillium TPU is a key component of Google’s AI supercomputing infrastructure, representing a breakthrough in supercomputing architecture. It integrates performance-optimized hardware, open-source software, leading ML frameworks, and flexible consumption models.

Compared to the previous-generation TPU v5e, Trillium TPU accelerates the training of intensive LLMs (like Llama-2-70b and GPT-3-175b) by up to 4 times and MoE model training speed by up to 3.8 times. Its host DRAM is three times that of v5e, maximizing performance and throughput.

Now in the practical application phase, Trillium TPU is available for purchase by any company to build their own large model products.

However, with NVIDIA’s strong competition, Trillium has only achieved a lead in terms of parameters and one successful large model case. Its compatibility with upstream and downstream hardware and broader industry acceptance will still need time to be tested.

Google’s Strengths and Concerns ⚖️

1. Strengths: Ecosystem and Money 💰

Google has always been a company that loves to experiment. One of the most famous examples is the former "20% Time Policy," which allowed employees to spend 20% of their work hours on projects they were passionate about. Under this innovative atmosphere, many projects were born within Google, and while many were quietly discontinued, some continue to generate massive revenue for the company. Gmail and AdSense, for instance, were products of this policy.

This policy, which has been in place for years, reflects Google's commitment to innovation. It's a fertile ground for new technologies and products.

In addition to fostering innovation, Google’s infrastructure in terms of computing power, cloud services, technology architecture, and talent reserve is unmatched by other companies, including Meta and Amazon, and it will take them a long time to catch up.

Beyond the hardware and software conditions necessary for large model development, Google excels in the downstream application ecosystem. For instance, YouTube, Google’s video platform, is a natural fit for multimodal applications. Google Search has launched AI Overview to compete with Preplexity AI. Its autonomous driving platform, Waymo, could potentially integrate voice model products in the future.

With such a rich product ecosystem, Google can explore large model applications in AI agents, AI hardware, and robotics. More importantly, Google has the financial backing to do so.

According to the Q3 earnings report, Google achieved a revenue of $88.3 billion, up 16% year-over-year, and a net profit of $26.3 billion, up 35%. Google Cloud Service revenue reached $11.4 billion, also up 35%. The company generated $17.6 billion in free cash flow, with cash reserves reaching $93 billion by the end of the quarter.

After two years in the large model competition, Google still holds nearly $100 billion in cash. With such a massive cash reserve, issues related to computing power, chips, and talent are hardly a concern.

Google is almost fully equipped with the necessary software and hardware to take large models from 0 to 1, and eventually to industrial-level applications. As long as management doesn’t lose its rhythm like it did in early 2023, the contribution of large models to Google’s revenue and stock price is an inevitable reality.

2. Concerns: Antitrust Risks ⚠️

The reason behind Google’s low stock price is the potential risks from antitrust lawsuits, which could lead to business breakups. After losing a recent antitrust trial, Google’s core business now faces uncertainties.

The U.S. Department of Justice (DOJ) is demanding that Google sell its Chrome browser, end its default search engine agreements with companies like Apple, and possibly even sell the Android operating system.

These requirements would have a huge impact on Google’s core search business, as these adjustments affect major traffic sources for search. Without these sources, Google’s search market share would inevitably decrease, affecting ad revenue. Selling the Android operating system could undermine the integrity of Google’s mobile ecosystem.

To counter the DOJ's demands, Google has proposed non-exclusive browser agreements and annual assessments of default search settings to reduce the perception of its "monopolistic" position.

Recently, the Japan Fair Trade Commission also ruled that Google’s search violates Japan’s Antimonopoly Act, which means Google’s operations in Japan will be impacted. This may trigger similar antitrust rulings in other countries.

Google’s once rock-solid advantages are now facing volatility, with strong competitors on the horizon and threats to its core business. It urgently needs a stable and determined management team. No wonder Sundar Pichai has openly stated that 2025 will be a crucial year, with high risks ahead.

Google is gradually regaining the industry’s attention and developers’ recognition in the large model race, but the antitrust hammer has not yet fully struck. Google now has a rare window to secure its foothold before the next wave of AI innovations fully arrives. 🌍