From architecture, process to energy efficiency, this review is enough to fully understand LLM hardware acceleration

The development of large language models (LLMs) is often accompanied by the evolution of hardware acceleration technology. This article gives a comprehensive overview of the model performance and energy efficiency using chips such as FPGA and ASIC. Large-scale modeling of human language is a complex process that took researchers decades to develop. The technology dates back to 1950, when Claude Shannon applied information theory to human language. Since then, tasks such as translation and speech recognition have made great progress.

In this process, artificial intelligence (AI) and machine learning (ML) are key to technological progress. ML, as a subset of AI, allows computers to learn from data. Generally speaking, ML models are either supervised or unsupervised.

In the next paper, "Hardware Acceleration of LLMs: A comprehensive survey and comparison", researchers from the University of West Attica focus on supervised models.

Paper address: https://arxiv.org/pdf/2409.03384

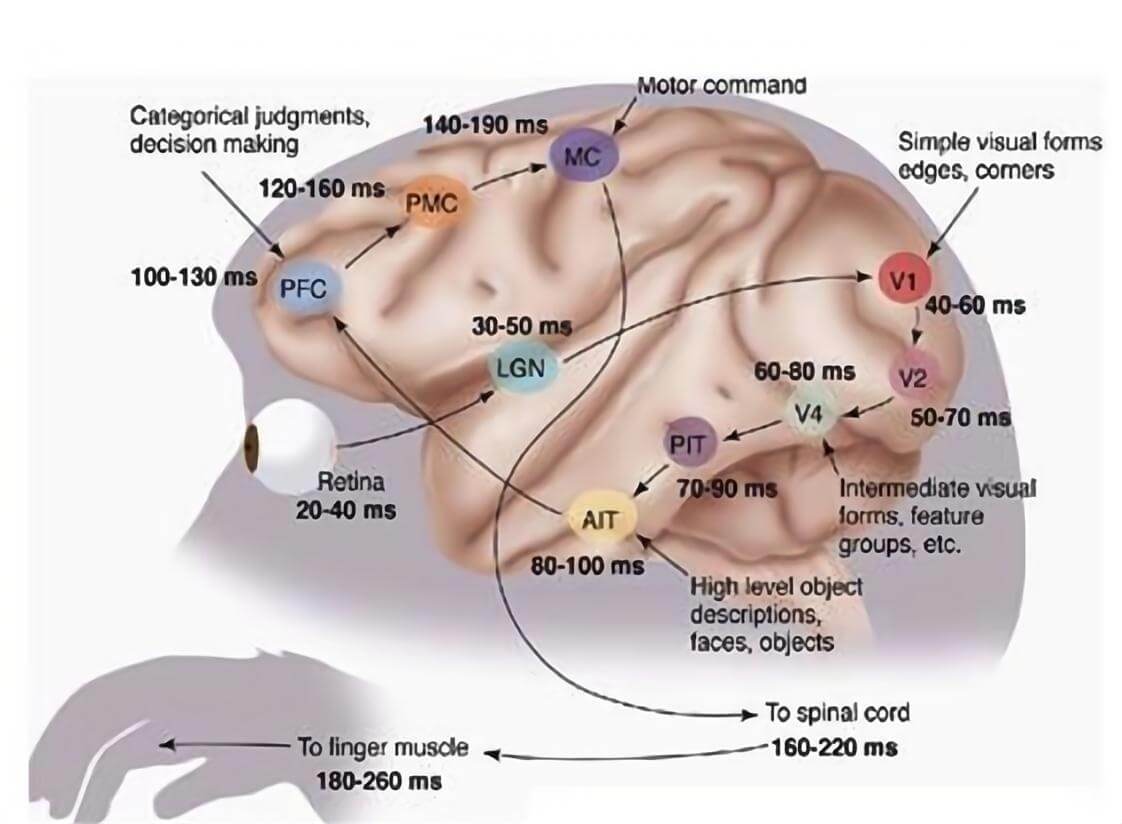

According to the paper, deep learning models are divided into generative and discriminative. Generative AI is a subset of deep learning that uses neural networks to process labeled and unlabeled data. Large language models (LLMs) help understand characters, words, and text.

In 2017, Transformer revolutionized language modeling. Transformer is a neural network that uses attention mechanisms to handle long-term text dependencies. Google created the first Transformer model for text translation in 2017. Transformer has continued to evolve since then, improving attention mechanisms and architectures. Today, ChatGPT released by OpenAI is a well-known LLM that can predict text and answer questions, summarize text, etc.

This article provides a comprehensive survey of some of the research work done on using hardware accelerators to accelerate Transformer networks. The survey introduces the proposed frameworks and then compares each framework qualitatively and quantitatively in terms of technology, processing platform (FPGA, ASIC, memory, GPU), acceleration, energy efficiency, performance (GOP), etc.

FPGA Accelerators

In this section, the authors list the research on FPGAs in an A-T numbered manner, which can be said to be a very detailed survey. Each study is summarized in a few short sentences, which is easy and clear to read. For example:

FTRANS. In 2020, Li et al. proposed a hardware acceleration framework FTRANS to accelerate large-scale language representation based on Transformer. FTRANS significantly improves speed and energy efficiency, surpassing CPU and GPU implementations. After a series of comparisons, FTRANS is shown to be 81 times faster and 9 times more energy efficient than other schemes, especially compared with the GPU processor RTX5000 using VCU118 (16nm). The accelerator has a performance rate of 170 GOP and an energy efficiency of 6.8 GOP/W.

Multi-head attention. In 2020, Lu et al. proposed an FPGA-based architecture for accelerating the most computationally intensive parts of the Transformer network. In their work, they proposed a new hardware accelerator for two key components, namely the Multi-Head Attention (MHA) ResBlock and the Position Feedforward Network (FFN) ResBlock, which are the two most complex layers in the Transformer. The proposed framework was implemented on a Xilinx FPGA. According to the performance evaluation, the proposed design achieved a 14.6x acceleration compared to the V100 GPU.

FPGA NPE. In 2021, Khan et al. proposed an FPGA accelerator for language models, called NPE. The energy efficiency of NPE is about 4 times higher than that of CPU (i7-8700k) and about 6 times higher than that of GPU (RTX 5000).

In addition, the article also introduces research such as ViA, FPGA DFX, and FPGA OPU, which will not be introduced in detail here.

CPU and GPU-based accelerators

TurboTransformer. In 2021, Jiarui Fang and Yang Yu introduced the TurboTransformers accelerator, a technology built for Transformer models on GPUs. TurboTransformers outperforms PyTorch and ONNXRuntime in latency and performance on variable-length inputs, with a speedup of 2.8 times.

Jaewan Choi. In 2022, researcher Jaewan Choi published a study titled "Accelerating Transformer Networks through Rewiring of Softmax Layers", which proposed a method to accelerate the Softmax layer in Transformer networks. The study introduced a rewiring technique to accelerate the Softmax layer in Transformer networks, which is becoming increasingly important as Transformer models process longer sequences to improve accuracy. The proposed technique divides the Softmax layer into multiple sublayers, changes the data access pattern, and then merges the decomposed Softmax sublayers with subsequent and previous processes. This method speeds up the inference of BERT, GPT-Neo, BigBird, and Longformer on current GPUs by 1.25 times, 1.12 times, 1.57 times, and 1.65 times, respectively, significantly reducing off-chip memory traffic.

SoftMax. In 2022, Choi et al. proposed a new framework to accelerate Transformer networks by reorganizing the Softmax layer. The Softmax layer normalizes the elements of the attention matrix to values between 0 and 1. This operation is performed along the row vectors of the attention matrix. According to analysis, the softmax layer in the scaled dot product attention (SDA) block uses 36%, 18%, 40%, and 42% of the total execution time of BERT, GPT-Neo, BigBird, and Longformer, respectively. Softmax reorganization achieves up to 1.25x, 1.12x, 1.57x, and 1.65x speedups for BERT, GPT-Neo, BigBird, and Longformer on A100 GPUs by significantly reducing off-chip memory traffic.

In addition, the paper also introduces research on LightSeq2, LLMA, vLLMs, etc.

ASIC accelerator

A3. In 2020, Hma et al. proposed an early study on Transformer network acceleration, called A3. However, the proposed scheme has not yet been implemented on FPGAs. Based on performance evaluation, the proposed scheme can achieve up to 7x speedup compared to the Intel Gold 6128 CPU implementation and 11x energy efficiency improvement compared to the CPU implementation.

ELSA. In 2021, Ham et al. proposed a hardware-software co-design method for accelerating Transformer networks, called Elsa. ELSA greatly reduces the computational waste in self-attention operations.

SpAtten. In 2021, Want et al. proposed a framework Spatten for accelerating large language models. SpAtten adopts a novel NLP acceleration scheme to reduce computation and memory access. SpAtten achieves 162 times and 347 times acceleration over GPU (TITAN Xp) and Xeon CPU, respectively. In terms of energy efficiency, SpAtten achieves 1193 times and 4059 times energy savings compared to GPU and CPU.

In this section, the author also lists a number of studies, including Sanger, a new method for accelerating transformer networks, and AccelTran, which is used to improve the efficiency of transformer models in natural language processing.

Memory hardware accelerator

ATT. In 2020, Guo et al. proposed an attention-based accelerator acceleration method called ATT, which is based on resistive RAM. According to performance evaluation, ATT can achieve 202 times acceleration compared with NVIDIA GTX 1080 Ti GPU.

ReTransformer. In 2020, Yang et al. proposed a memory framework for accelerating Transformer, called ReTransformer. ReTransformer is a ReRAM-based memory architecture for accelerating Transformer, which not only accelerates the scaled dot product attention of Transformer using ReRAM-based memory architecture, but also eliminates some data dependencies by avoiding writing intermediate results using the proposed matrix decomposition technique. Performance evaluation shows that ReTransformer can achieve up to 23.21 times acceleration compared with GPU, while the corresponding overall power is reduced by 1086 times.

iMCAT. In 2021, Laguna et al. proposed a new memory architecture for accelerating long sentence Transformer network, called iMCAT. The framework uses XBar and CAM in combination to accelerate Transformer network. Performance evaluation shows that for a sequence of length 4098, this approach achieves 200x speedup and 41x performance improvement.

In addition, this chapter also introduces research such as iMCAT, TransPIM, and iMTransformer.

Quantitative comparison

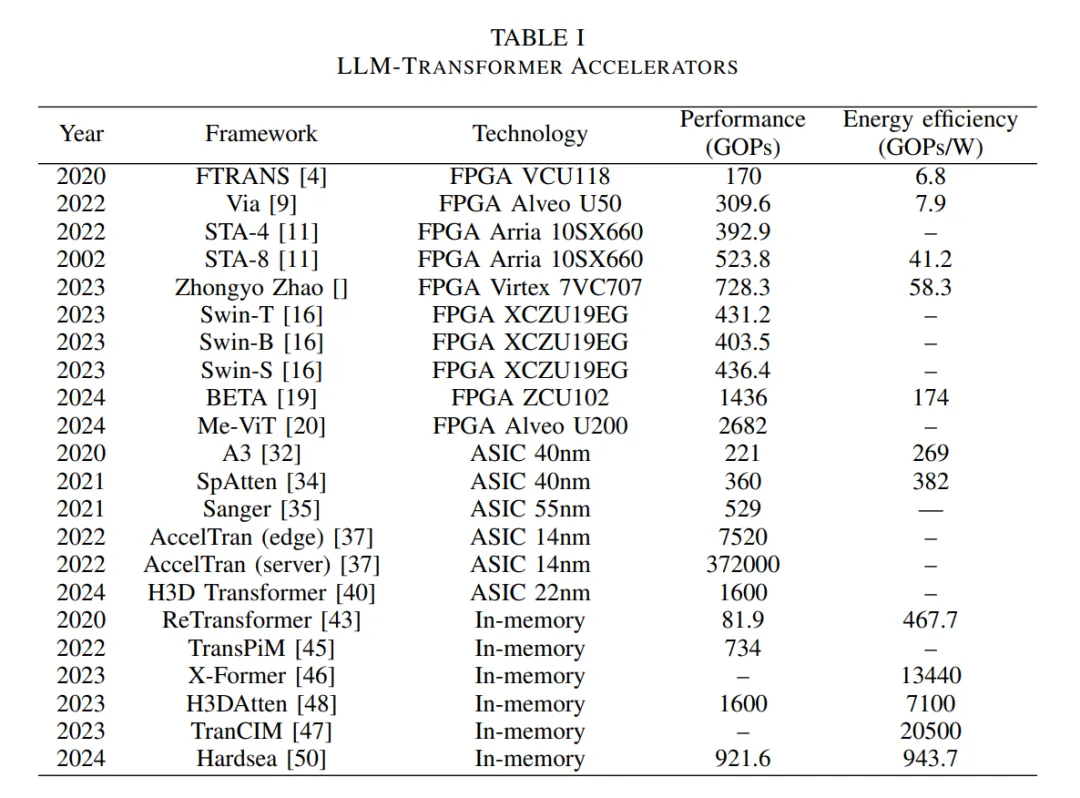

Table I below lists all current hardware accelerators and their main characteristics, including accelerator name, accelerator type (FPGA/ASIC/In-memory), performance, and energy efficiency.

In some cases, previous work also mentions acceleration when the proposed architecture is compared with CPU and GPU. However, since the baseline comparison of each architecture is different, this paper only shows their absolute performance and energy efficiency without involving acceleration.

Quantitative performance comparison

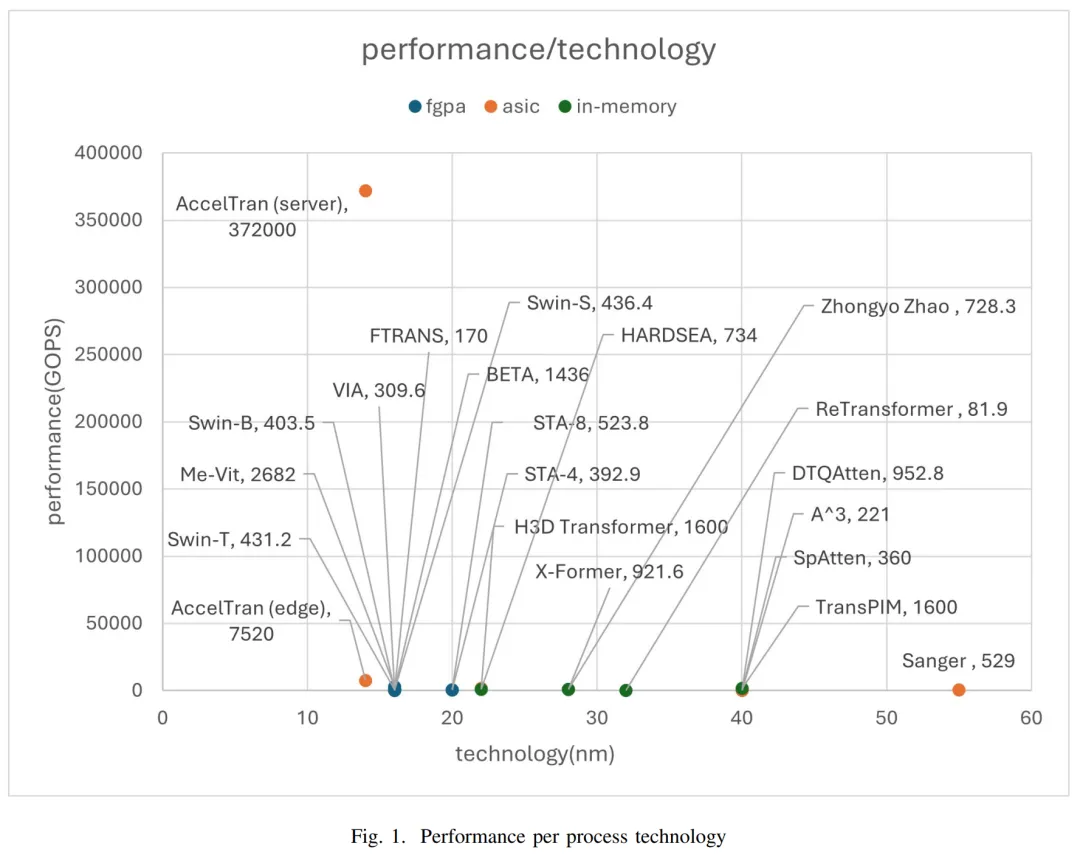

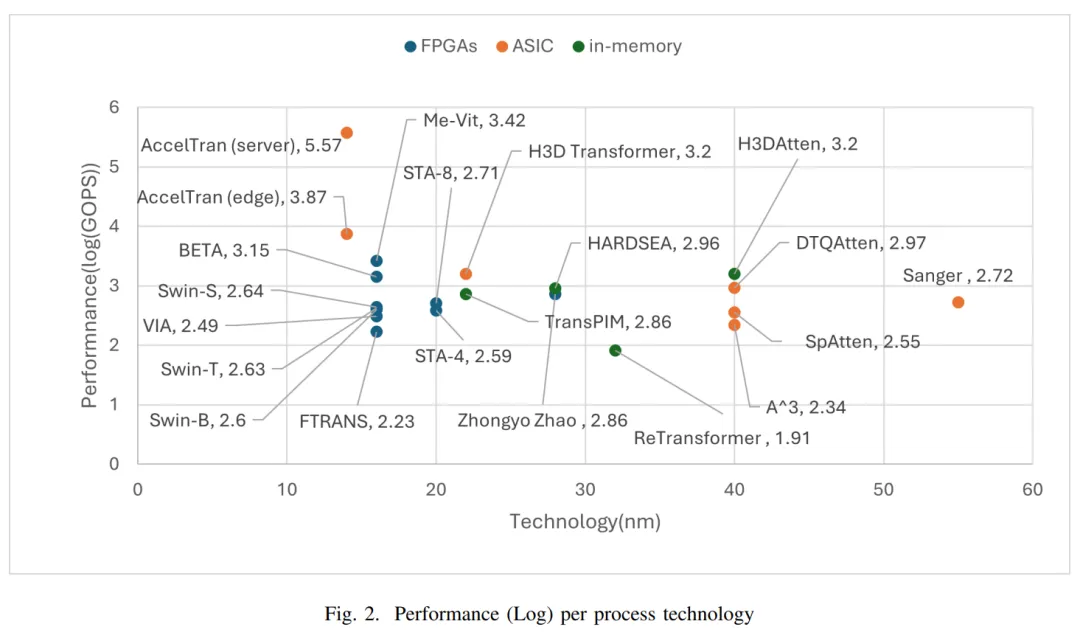

Figure 1 below shows the performance of each accelerator under different process technologies; Figure 2 shows a clearer logarithmic scale performance.

We can see that the highest performance is achieved by AccelTran (Server) using 14nm process, reaching 372,000 GOPs, while the ReTransformer model has the lowest performance. In addition, models using the same process technology, such as ViA, Me-ViT, and Ftrans, do not achieve similar performance.

However, it is difficult to make a fair comparison for accelerators that are not using the same process technology. After all, the process technology can have a significant impact on the performance of hardware accelerators.

Energy Efficiency vs. Process Technology

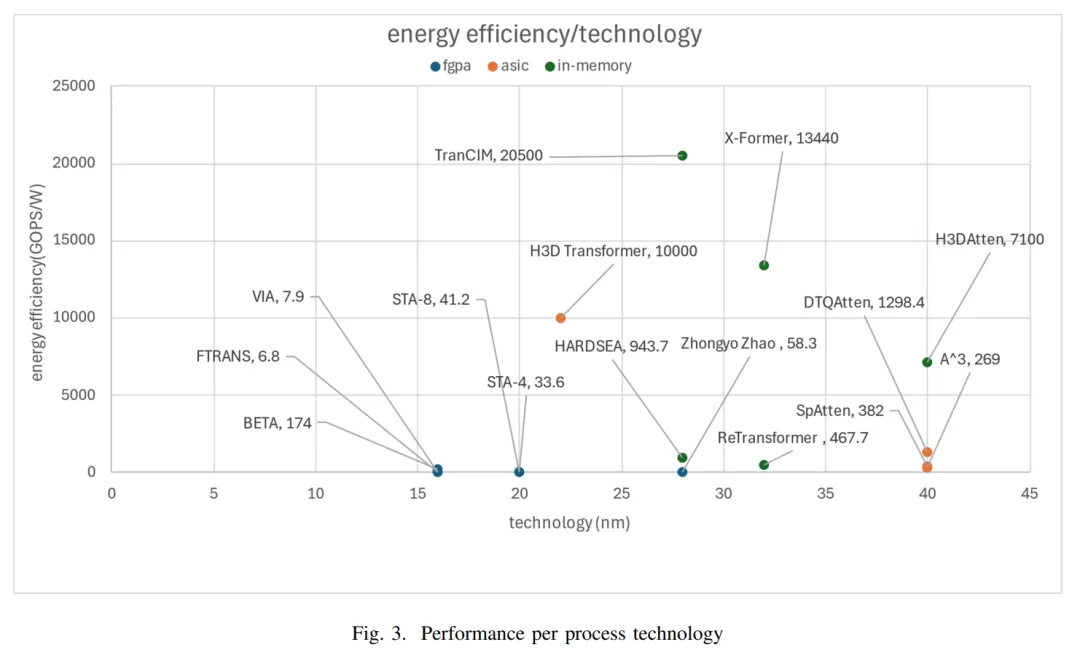

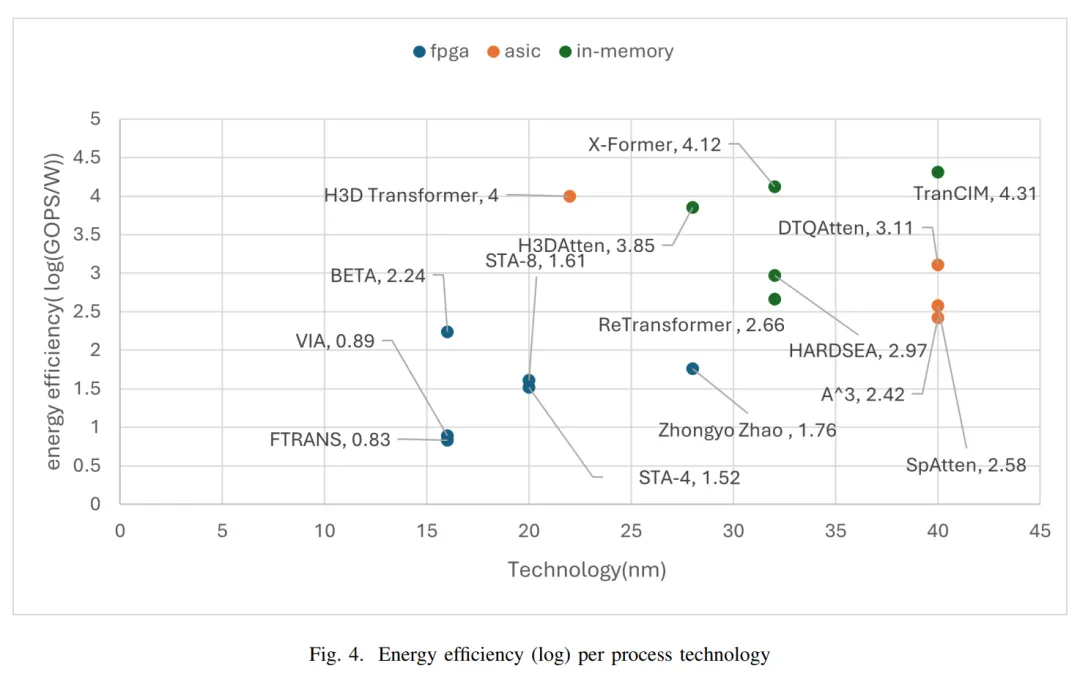

Figure 3 below shows the energy efficiency (GOPs/W) level of most hardware accelerators, and Figure 4 shows the energy efficiency at the logarithmic scale. Since many architectures do not measure energy efficiency, this article only lists accelerators that provide energy efficiency. Of course, many accelerators use different process technologies, so it is difficult to make a fair comparison.

The results show that the memory-based (In-Memory accelerator) model has better energy efficiency performance. The reason is that data transfer is reduced and this specific architecture allows data to be processed directly in memory without transferring from memory to the CPU.

Speedup Comparison at 16nm Process

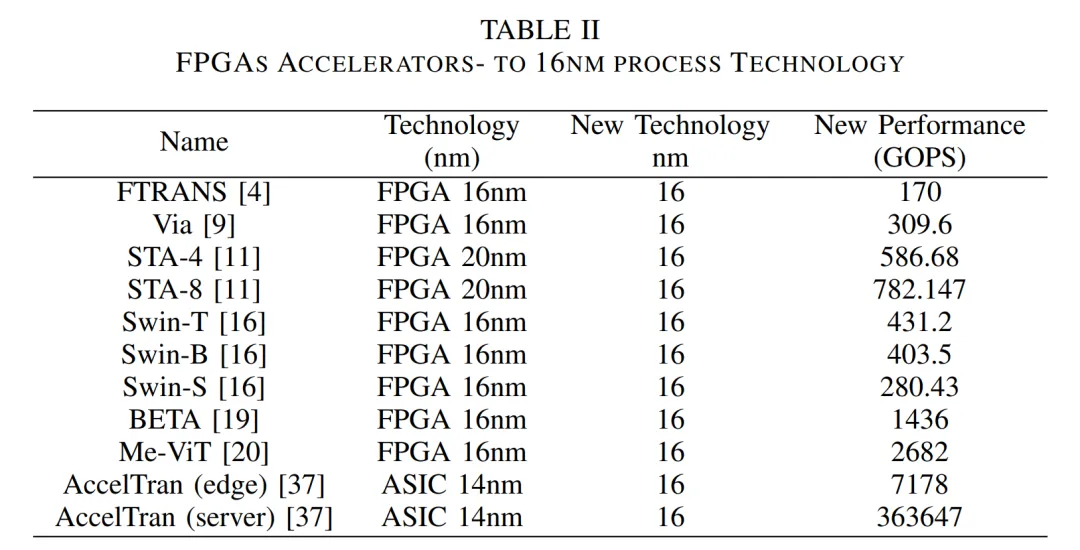

Table II below shows the extrapolated performance of different hardware accelerators at 16nm process.

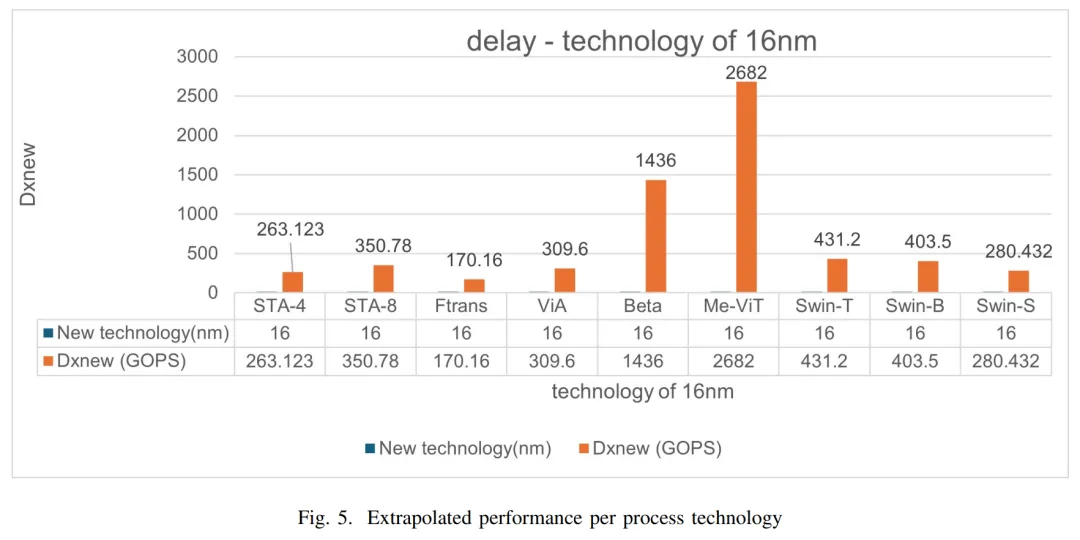

Figure 5 below shows the absolute performance of different hardware accelerators when extrapolating performance at the same 16nm process technology, with AccelTran having the highest performance level.

Experimental Extrapolation

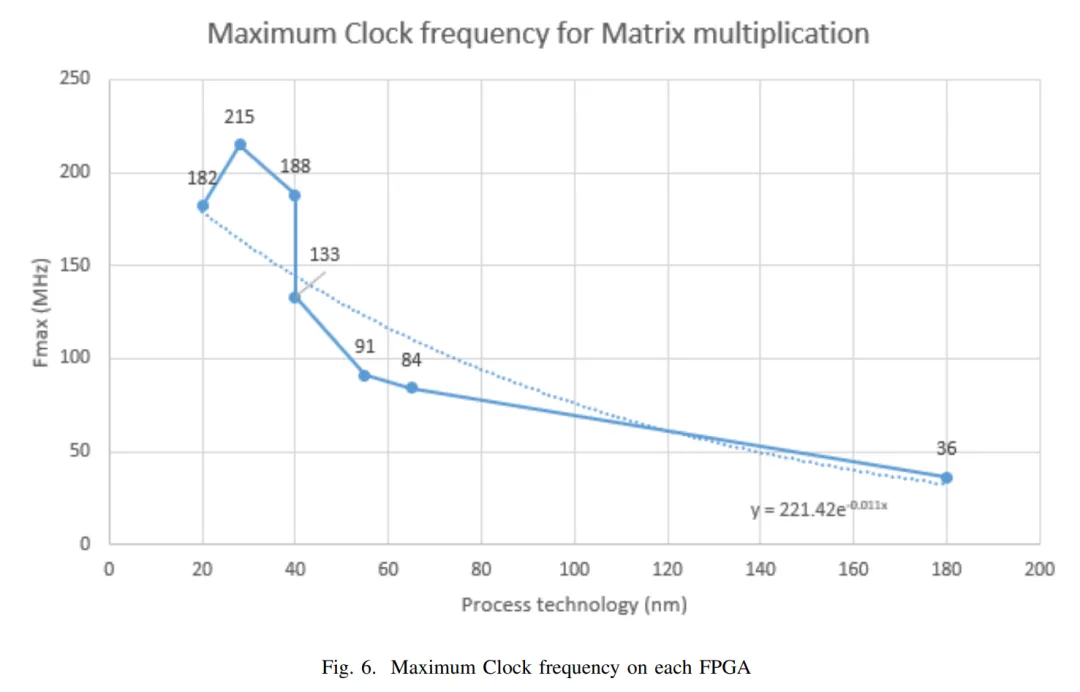

This paper experimentally extrapolates the FPGA architecture and tests matrix multiplication codes with different technologies at 20nm, 28nm, 40nm, 55nm, 65nm, and 180nm processes to verify the theoretical conversion effect of the 16nm process. The researchers said that the matrix multiplication results on FPGA technology help to extrapolate the results of different hardware accelerators on the same process technology.

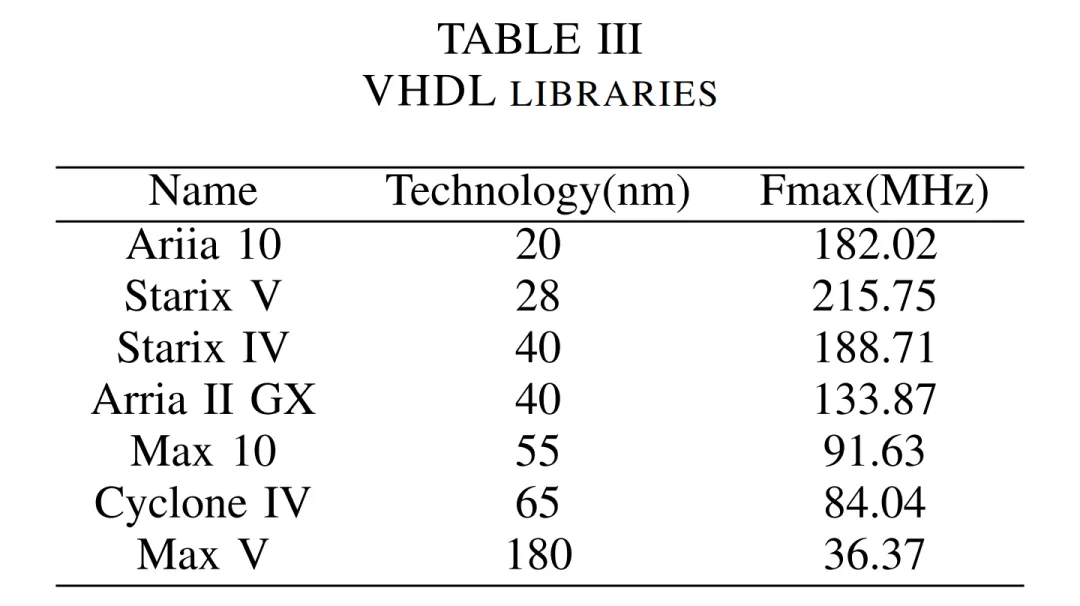

Table III below shows the results of different FPGA devices, process technologies, and matrix multiplication IP cores.

Figure 6 below shows the maximum clock frequency for each FPGA device and matrix multiplication process technology. Since the performance of FPGAs depends on the maximum clock frequency, extrapolating the performance enables fair comparison between architectures on different process technologies.

For more experimental details, please refer to the original paper.