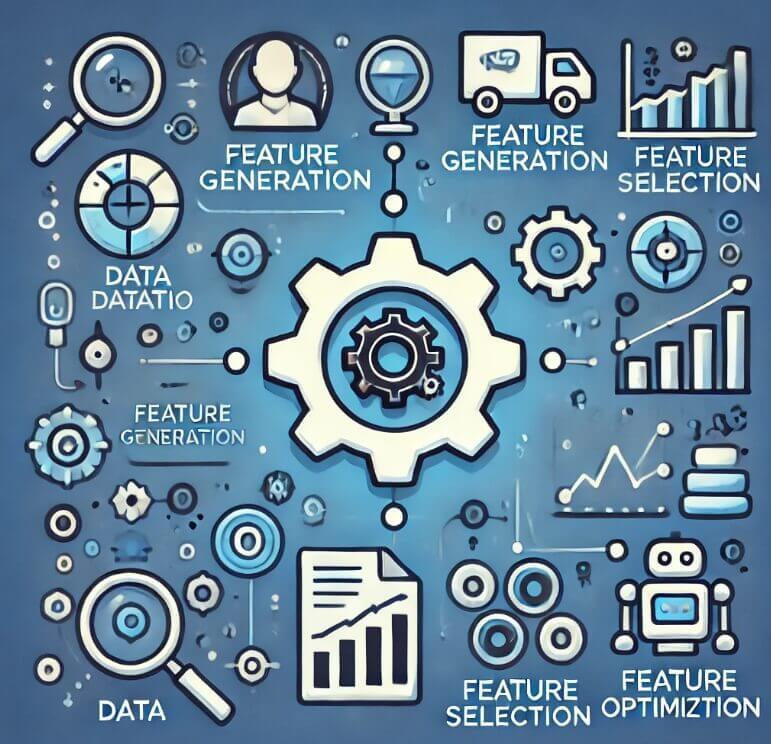

In machine learning projects, feature engineering is a critical step that directly impacts model performance. By extracting more useful features from raw data, it helps models better capture underlying patterns. However, traditional feature engineering often requires significant domain knowledge and iterative adjustments, making it a time-consuming and labor-intensive process.

In recent years, the rise of Automated Feature Engineering has provided a new solution to this problem. This technology aims to generate and select optimal features from data using automated methods, making the feature engineering process more efficient. This article provides an in-depth introduction to the fundamental concepts, common techniques, and tools for automated feature engineering, along with practical code examples to demonstrate its application.

1. What Is Automated Feature Engineering?

Feature engineering is a crucial part of the machine learning workflow. It involves extracting, transforming, and selecting features from raw data to improve model performance. In traditional feature engineering, data scientists manually construct and select features, which demands substantial experience and domain knowledge.

Automated Feature Engineering, on the other hand, uses algorithms and automation tools to perform feature generation, selection, and optimization. Its goal is to reduce human intervention, enabling models to quickly identify high-quality feature combinations across various datasets and ultimately improve performance.

2. Core Methods of Automated Feature Engineering

Automated Feature Engineering typically involves two main tasks: generating new features and selecting features. Below are some common methods along with corresponding code examples:

2.1 Automated Feature Generation

Automated feature generation constructs new features from raw data, such as creating composite features through arithmetic operations or logical transformations. Common methods include:

Feature Combination: Performing arithmetic operations like addition, subtraction, multiplication, or division on existing features. For instance, generating an "age-to-income ratio" feature from "age" and "income."

Aggregation Operations: Applying statistical aggregations such as averages, maxima, or counts to categorical features.

Code Example: Generating Features with Featuretools

Featuretools is a Python library for automated feature generation, capable of generating aggregation and transformation features from relational data.

import featuretools as ft

import pandas as pd

# Create a sample dataset

data = pd.DataFrame({

'customer_id': [1, 2, 1, 2, 3],

'amount': [100, 150, 200, 300, 500],

'timestamp': pd.date_range('2022-01-01', periods=5)

})

# Define entities and relationships

es = ft.EntitySet(id='transactions')

es = es.add_dataframe(dataframe_name='transactions', dataframe=data, index='index')

# Generate features automatically

feature_matrix, feature_defs = ft.dfs(

entityset=es,

target_dataframe_name='transactions',

agg_primitives=['mean', 'sum'],

trans_primitives=['month', 'day']

)

print(feature_matrix.head())This example defines a transaction dataset and uses Featuretools to generate features like "average transaction amount per customer" and "transaction month," which can enhance the model's understanding of the data.

2.2 Feature Selection

After generating new features, many redundant or irrelevant features may be included. Feature selection identifies the most useful features for the model. Common feature selection methods include:

Statistical Methods: Techniques like ANOVA or chi-square tests to select features significantly correlated with the target variable.

Model-Based Methods: Using models like Random Forest or Lasso regression to compute feature importance scores for selection.

Recursive Feature Elimination (RFE): Iteratively training the model, removing the least important features, and narrowing down the feature set.

Code Example: Feature Selection with Scikit-Learn

from sklearn.feature_selection import SelectKBest, f_classif

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# Load the example dataset

data = load_iris()

X, y = data.data, data.target

# Select the best features using ANOVA

selector = SelectKBest(score_func=f_classif, k=2)

X_selected = selector.fit_transform(X, y)

# Split into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X_selected, y, test_size=0.3, random_state=42)

# Train a Random Forest classifier

clf = RandomForestClassifier()

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print(f"Selected features shape: {X_selected.shape}")

print(f"Model accuracy with selected features: {accuracy_score(y_test, y_pred):.2f}")In this example, SelectKBest selects the top two features most correlated with the target variable. These selected features are used to train a Random Forest classifier, improving training efficiency and potentially enhancing the model's generalization ability.

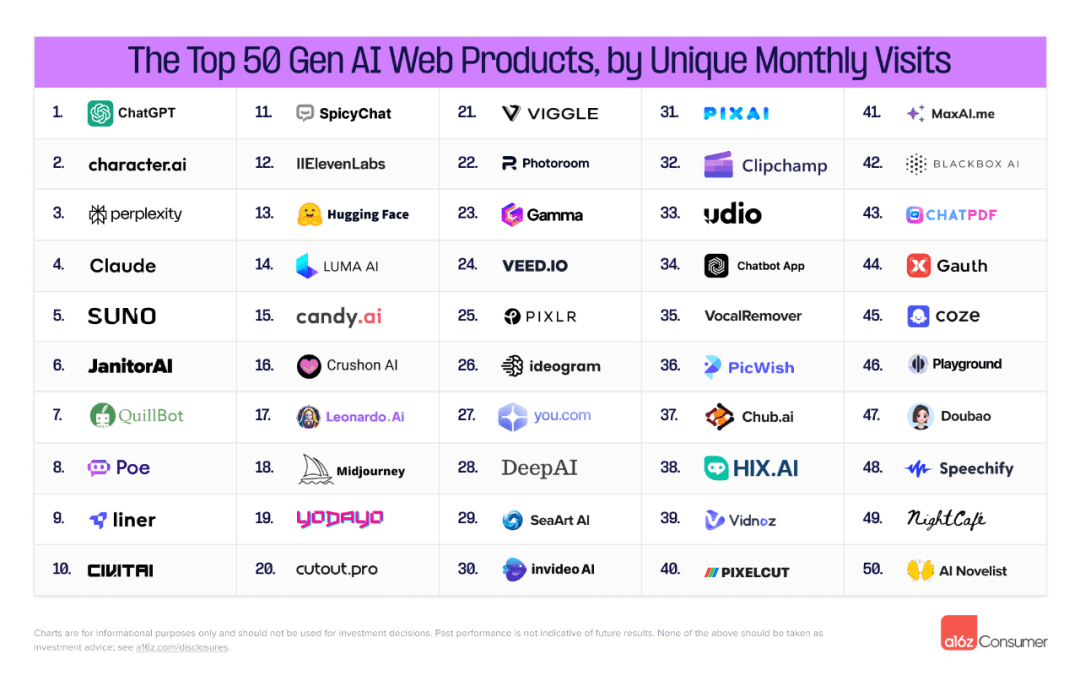

3. Popular Tools for Automated Feature Engineering

In practice, many tools facilitate automated feature engineering. Below are some commonly used open-source tools:

Featuretools: Specializes in generating aggregation and transformation features, ideal for structured data.

Auto-Sklearn: Integrates feature selection, model selection, and hyperparameter tuning to achieve fully automated modeling without manual feature engineering.

H2O AutoML: Supports feature generation and selection, suitable for large datasets and distributed environments.

TPOT: An automated machine learning tool based on genetic algorithms, capable of generating and selecting optimal features automatically.

4. Practical Application Scenarios

Automated feature engineering has broad applications in real-world scenarios. Here are a few examples:

4.1 Credit Scoring in Banking

In credit scoring models, a customer’s historical transaction data and account information are key predictive features. Automated feature generation can quickly create features like "average transaction amount in the past three months," improving model accuracy.

4.2 Medical Diagnostics

In medical data, automated feature generation helps extract valuable features from patient history, such as the frequency and duration of specific symptoms, enhancing diagnostic model performance.

4.3 Recommendation Systems

In recommendation systems, data like user browsing history and purchase records can be used to generate personalized features, such as "user preference level for a specific category," to improve recommendation accuracy.

5. Advantages and Challenges of Automated Feature Engineering

Advantages:

Efficiency: Reduces the time spent on manual feature construction, allowing data scientists to focus on model design and evaluation.

Adaptability: Handles diverse data types, including structured and time-series data, with greater flexibility.

Performance Improvement: Enhances model generalization, particularly when complex relationships exist between features.

Challenges:

Computational Cost: Generating numerous features can consume significant computational resources, especially for large datasets.

Interpretability Issues: Automatically generated features may lack interpretability, posing challenges in fields like finance or healthcare where transparency is crucial.

Need for Fine-Tuning: Despite automation, fine-tuning and manual adjustments are often necessary to ensure optimal performance.

6. Future Outlook

With the development of AutoML technologies, automated feature engineering will see broader applications and increasingly intelligent tools and algorithms. Future research directions may include:

Efficient Feature Generation Algorithms: Developing methods to generate useful features in less time.

Automated Interpretability Techniques: Making automated features more interpretable to meet transparency requirements in specific industries.

Deep Learning Integration: Leveraging unsupervised learning methods like autoencoders for feature extraction.

Summary

Automated Feature Engineering serves as a powerful "accelerator" for machine learning models, enabling rapid construction and optimization of features. Whether through generating new features or selecting the most relevant ones, automation reduces manual effort while improving model performance. By leveraging appropriate tools and methods, automated feature engineering can help us move faster and further in the journey of data discovery and model building.