In the fast-evolving field of machine learning, meta-learning has emerged as a promising methodology that is gaining increasing attention. The main goal of meta-learning is to enable models to quickly adapt to new tasks, usually with minimal samples. This ability is particularly important in real-world applications, such as image recognition, natural language processing, and healthcare. This article will provide a detailed exploration of the basic concepts of meta-learning, its main algorithms, and its wide-ranging applications, helping readers gain a deeper understanding of the principles and practices of meta-learning.

1. Basic Concepts of Meta-Learning

1.1 What is Meta-Learning?

Meta-learning, or "learning to learn," refers to the process where a model learns how to learn more effectively. It aims to make the model quickly adapt to new tasks by leveraging knowledge shared across multiple tasks. The fundamental components of meta-learning include:

Task Set: A set of tasks that share similar characteristics.

Learning Algorithm: The algorithm used to train the model on a specific task.

Meta-Learning Algorithm: The algorithm used to learn knowledge from the task set.

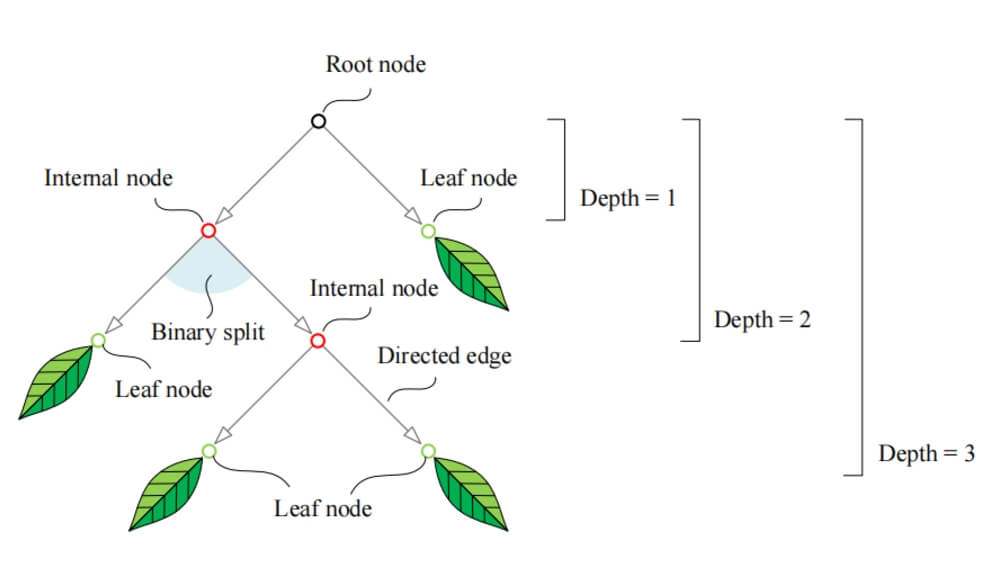

1.2 Classification of Meta-Learning

Meta-learning can be classified based on its implementation approach and application scenarios, with the main categories being:

Model-Based Meta-Learning: This approach builds special neural network architectures to help models better capture the relationships between tasks.

Optimization-Based Meta-Learning: This approach uses optimization algorithms to update model parameters, improving generalization to new tasks.

Memory-Based Meta-Learning: This method uses external memory components to enhance the model's ability to adapt to tasks.

2. Main Algorithms of Meta-Learning

2.1 Model-Agnostic Meta-Learning (MAML)

MAML (Model-Agnostic Meta-Learning) is one of the most representative meta-learning algorithms. It aims to find a good model initialization that allows the model to quickly adapt to new tasks after a few gradient updates.

Steps of the MAML Algorithm:

Task Sampling: Randomly select multiple tasks from a task distribution.

Task Training: For each task, train using the current model parameters and calculate gradients.

Parameter Update: Update the model parameters based on the gradients from each task.

Meta Update: Update the model's initial parameters by averaging the gradients from all tasks.

Advantages and Disadvantages of MAML:

Advantages:

Applicable to various types of models (e.g., neural networks, linear regression).

Performs well in few-shot learning tasks.

Disadvantages:

High computational cost, especially with a large number of tasks.

Requires a high level of similarity between tasks.

MAML Code Implementation:

import torch import torch.nn as nn import torch.optim as optim class MAML(nn.Module): def __init__(self, input_size, hidden_size, output_size): super(MAML, self).__init__() self.fc1 = nn.Linear(input_size, hidden_size) self.fc2 = nn.Linear(hidden_size, output_size) def forward(self, x): x = torch.relu(self.fc1(x)) return self.fc2(x) def maml_train(model, tasks, n_shots, n_updates, meta_lr, task_lr): optimizer = optim.Adam(model.parameters(), lr=meta_lr) for task in tasks: # Task training task_model = MAML(model.fc1.in_features, model.fc1.out_features, model.fc2.out_features) task_model.load_state_dict(model.state_dict()) # Train on each task for _ in range(n_updates): data, labels = task.sample(n_shots) # Get task data optimizer.zero_grad() output = task_model(data) loss = nn.MSELoss()(output, labels) loss.backward() for param in task_model.parameters(): param.data -= task_lr * param.grad.data # Task update # Meta update meta_optimizer = optim.Adam(model.parameters(), lr=meta_lr) meta_optimizer.zero_grad() meta_loss = calculate_meta_loss(model, tasks) # Calculate meta loss meta_loss.backward() meta_optimizer.step() def calculate_meta_loss(model, tasks): loss = 0 for task in tasks: data, labels = task.sample() # Get task data output = model(data) loss += nn.MSELoss()(output, labels) return loss / len(tasks)

2.2 Memory-Based Meta-Learning

Memory-Augmented Neural Networks (MANNs) use external memory components to store and retrieve information, making them particularly suitable for tasks involving sequential data and long-term memory. By enhancing the model's memory capacity, MANNs are better able to leverage existing knowledge when facing new tasks.

Key Components:

Memory Unit: Used to store information.

Read/Write Mechanism: An algorithm to control how memory is read and written.

MANNs Code Implementation:

class Memory(nn.Module): def __init__(self, memory_size, memory_dim): super(Memory, self).__init__() self.memory = torch.zeros(memory_size, memory_dim) def read(self, key): similarities = torch.matmul(self.memory, key.unsqueeze(1)).squeeze() return self.memory[torch.argmax(similarities)] def write(self, key, value): self.memory[torch.argmin(torch.norm(self.memory - key, dim=1))] = value class MANN(nn.Module): def __init__(self, input_size, hidden_size, memory_size, memory_dim): super(MANN, self).__init__() self.fc = nn.Linear(input_size, hidden_size) self.memory = Memory(memory_size, memory_dim) def forward(self, x): hidden = torch.relu(self.fc(x)) return self.memory.read(hidden)

2.3 Transfer Learning

Transfer learning is a common meta-learning strategy that improves learning efficiency by transferring knowledge from a previously learned task to a new one. It typically involves two phases: pretraining and fine-tuning. In the pretraining phase, the model is trained on a large dataset, and in the fine-tuning phase, it is adjusted for a new task.

Transfer Learning Code Implementation:

from torchvision import models # Pretrained model model = models.resnet50(pretrained=True) # Modify the last layer to adapt to the new task num_ftrs = model.fc.in_features model.fc = nn.Linear(num_ftrs, num_classes) # Freeze the earlier layers for param in model.parameters(): param.requires_grad = False # Only train the last layer for param in model.fc.parameters(): param.requires_grad = True # Train the model optimizer = optim.Adam(model.fc.parameters(), lr=0.001) # Training...

3. Applications of Meta-Learning

Meta-learning shows great potential in a variety of fields, including:

3.1 Natural Language Processing (NLP)

In NLP, meta-learning is widely applied to tasks such as text classification, named entity recognition, and machine translation. By training on multiple language tasks, models can quickly adjust parameters when faced with new text tasks, improving processing efficiency.

Specific Application Examples:

Text Classification: Meta-learning helps models quickly adapt to new categories with few labeled samples.

Machine Translation: By training on multiple language pairs, models can learn translation rules faster for new pairs.

3.2 Computer Vision

In computer vision, meta-learning is primarily applied to image classification and object detection tasks. By training on multiple image datasets, models can rapidly adapt to new image classification tasks. For example, Few-Shot Learning is a meta-learning-based vision task that aims to learn new categories with minimal samples.

Specific Application Examples:

Face Recognition: Meta-learning enables the recognition of new users with few samples.

Object Detection: Models can quickly adapt to object detection tasks in different scenes.

3.3 Reinforcement Learning

In reinforcement learning, meta-learning is used to improve the agent's learning speed in new environments. By training in multiple environments, the agent can transfer existing strategies to new settings, improving learning efficiency and outcomes.

Specific Application Examples:

Autonomous Driving: After training in simulated environments, the agent can quickly adapt to real-world driving scenarios.

Game AI: Training in multiple games allows AI to quickly learn new game rules and strategies.

3.4 Healthcare

In healthcare, meta-learning helps models quickly adapt to new patients and diseases. It can be applied to tasks like disease prediction and medical image analysis, enhancing the accuracy of medical decisions.

Specific Application Examples:

Disease Prediction: Models can quickly make predictions based on new patient data.

Image Analysis: Models adapt quickly to different types of medical images, such as X-rays or MRIs, for diagnosis.

4. Challenges and Future Directions in Meta-Learning

Despite its vast potential, meta-learning faces several challenges in practical applications:

4.1 Data Scarcity

In many applications, data scarcity remains a problem. The effectiveness of meta-learning heavily depends on the similarity between tasks, which may not be effective in data-scarce environments.

4.2 Computational Complexity

Many meta-learning algorithms, like MAML, are computationally expensive, particularly when there are many tasks. Reducing computational complexity is a key research direction.

4.3 Task Correlation

The correlation between tasks significantly impacts the performance of meta-learning. Future research could explore how to effectively select tasks and establish stronger relationships between them.

4.4 Explainability

The explainability of meta-learning models is an important area of research. Future efforts could focus on improving the transparency of these models, enabling users to understand their decision-making processes.

Summary

As a significant research domain, meta-learning is progressively demonstrating its potential in various application areas. By understanding and applying its fundamental algorithms, researchers can better tackle learning challenges in scenarios with insufficient samples or rapidly evolving tasks. As research advances, meta-learning is poised to play an even greater role in the future development of artificial intelligence.