In the field of deep learning, fully connected layers, loss functions, and gradient descent are three important cornerstones. If you are embarking on your deep learning journey, understanding these concepts is the first step toward success. This article will provide a detailed breakdown of these three topics, from concepts to code, from basics to advanced, helping you grow from a beginner to a developer capable of solving real-world problems.

Part 1: Fully Connected Layers - The Basic Unit of Neural Networks

1.1 What is a Fully Connected Layer?

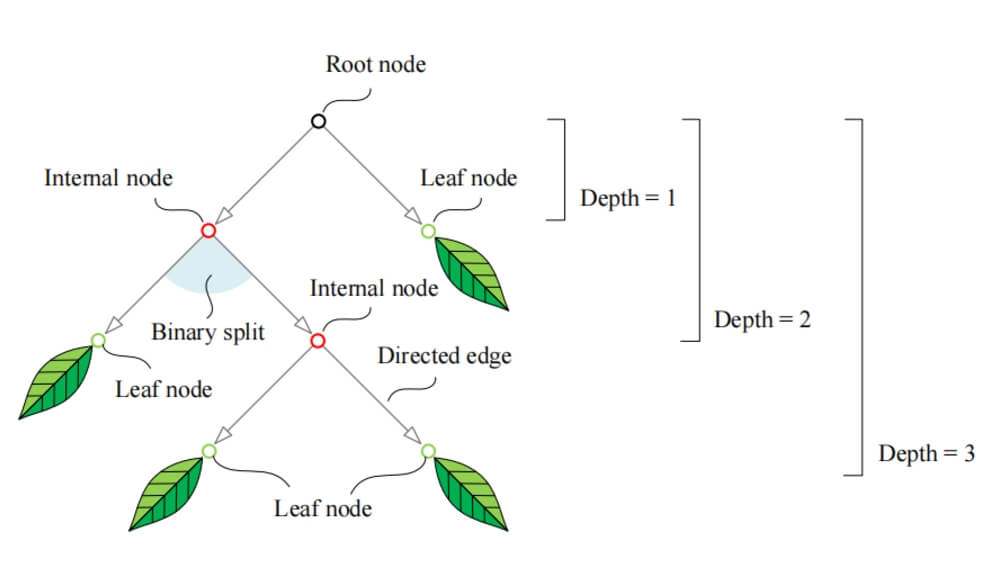

A fully connected layer (FC layer) is one of the most fundamental components of a neural network. Its main task is to map the input features to the output space and learn the complex relationships between features during this process.

Mathematical Definition: The mathematical expression for a fully connected layer is as follows:

: Input vector, representing the input features of the current layer.

: Weight matrix, representing the influence of each input feature on the output features.

: Bias vector, providing more expressive power to the network.

: Activation function, introducing non-linearity to the model.

The core of a fully connected layer is learning the mapping between inputs and outputs through a linear transformation using the weight matrix and bias vector. Finally, a non-linear transformation is applied through the activation function, allowing the network to handle complex tasks.

1.2 Why Do We Need Fully Connected Layers?

The main purposes of fully connected layers are:

Feature Fusion: Combine different features to capture global information.

Non-linear Expression: Through activation functions, the network can learn complex non-linear mappings.

Classification and Regression Tasks: In the last few layers of a network, fully connected layers are commonly used to map features to target classes or regression values.

In an image classification task, fully connected layers are responsible for mapping the features extracted by convolutional layers to the final classification results. For example:

Input: Features output from convolutional layers (e.g., a 512-dimensional vector).

Output: Classification result (e.g., 10 classes).

1.3 Implementation of a Fully Connected Layer with Code Example

Here is a simple fully connected network used to classify MNIST handwritten digits:

import torch import torch.nn as nn # Define a fully connected neural network class FullyConnectedNet(nn.Module): def __init__(self): super(FullyConnectedNet, self).__init__() self.fc1 = nn.Linear(28 * 28, 128) # Input layer to hidden layer self.fc2 = nn.Linear(128, 64) # Hidden layer to another hidden layer self.fc3 = nn.Linear(64, 10) # Hidden layer to output layer def forward(self, x): x = x.view(x.size(0), -1) # Flatten the 2D input x = torch.relu(self.fc1(x)) # ReLU activation function x = torch.relu(self.fc2(x)) x = self.fc3(x) # Output classification return x # Test the network model = FullyConnectedNet() sample_input = torch.randn(1, 28, 28) # Simulate an MNIST sample output = model(sample_input) print(output)

Code Explanation:

nn.Linearcreates a fully connected layer by defining input and output dimensions.torch.reluuses the ReLU activation function to introduce non-linearity.x.viewflattens the input tensor to provide a 1D vector for the fully connected layer.

1.4 Limitations of Fully Connected Layers

Although fully connected layers are powerful, they also have certain limitations:

Large Parameter Count: Fully connected layers require a lot of weights and biases, which can lead to overfitting.

Lack of Spatial Awareness: They are not effective at utilizing spatial information in the input data (such as pixel structure in images), which is where convolutional layers come into play.

High Computational Complexity: Large-scale networks can lead to significant computational costs during training and inference.

Part 2: Loss Functions - The Learning Objective of the Model

2.1 What is a Loss Function?

A loss function is a mathematical function used to measure the discrepancy between the model's predicted values and the true values. The goal of deep learning is to minimize the loss function by adjusting the model parameters through optimization algorithms like gradient descent.

There are two main types of loss functions:

Regression Problems: Predict continuous values, and common loss functions include Mean Squared Error (MSE) and Mean Absolute Error (MAE).

Classification Problems: Predict discrete values, with the most commonly used loss function being Cross-Entropy Loss.

2.2 Common Loss Functions

Mean Squared Error (MSE)

For regression tasks, MSE computes the squared difference between predicted values and true values.

Cross-Entropy Loss

For classification tasks, this loss measures the difference between the predicted distribution and the true distribution:

Binary Cross-Entropy Loss

For binary classification tasks, the formula is:

2.3 Loss Function Code Implementation

The following code demonstrates how to compute cross-entropy loss using PyTorch:

import torch

import torch.nn as nn

# Simulate model output and true labels

output = torch.tensor([[0.1, 0.8, 0.1], [0.7, 0.2, 0.1]]) # Model predictions

target = torch.tensor([1, 0]) # True labels

# Define cross-entropy loss

criterion = nn.CrossEntropyLoss()

loss = criterion(output, target)

print(f"Loss: {loss.item()}")Explanation:

The model's output is raw scores (logits) before applying softmax.

nn.CrossEntropyLossautomatically applies softmax.

2.4 How to Choose the Right Loss Function?

Regression Problems: MSE is the default choice, but MAE performs better in scenarios sensitive to outliers.

Classification Problems: Cross-entropy is preferred, especially for multi-class tasks.

Modeling Probability Distributions: Use Kullback-Leibler Divergence (KL Divergence) to measure differences between distributions.

Part 3: Gradient Descent - The Optimization Tool

3.1 The Principle of Gradient Descent

Gradient descent is an iterative optimization algorithm used to find the optimal parameters by minimizing the loss function. The core idea is to adjust parameters along the negative gradient direction of the loss function until the loss is minimized.

Parameter Update Formula:

: Model parameters.

: Learning rate, controlling the step size.

: Gradient of the loss function with respect to parameters.

3.2 Three Variants of Gradient Descent

Batch Gradient Descent:

Calculates the gradient for the entire dataset.

Advantages: Stable.

Disadvantages: Computationally expensive, especially for large datasets.Stochastic Gradient Descent (SGD):

Calculates the gradient using one sample at a time.

Advantages: Faster updates.

Disadvantages: Unstable convergence.Mini-Batch Gradient Descent:

Calculates the gradient using a small batch of samples.

Advantages: A compromise between speed and stability, commonly used in deep learning tasks.

3.3 Gradient Descent Code Implementation

Here is a complete training process using a PyTorch optimizer:

import torch.optim as optim

# Define model, loss function, and optimizer

model = FullyConnectedNet()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

# Simulate training process

for epoch in range(5):

optimizer.zero_grad() # Clear previous gradients

output = model(sample_input) # Forward pass

target = torch.tensor([3]) # Assume true label

loss = criterion(output, target) # Compute loss

loss.backward() # Backpropagate

optimizer.step() # Update parameters

print(f"Epoch {epoch+1}, Loss: {loss.item()}")Optimization Strategies and Advanced Techniques

Dynamic Learning Rate:

Adjusting the learning rate during training helps the model converge faster. For example:

from torch.optim.lr_scheduler import StepLR scheduler = StepLR(optimizer, step_size=2, gamma=0.1) for epoch in range(5): train() # Assume training logic scheduler.step()

Momentum Optimization:

Momentum accelerates gradient descent and reduces fluctuations, improving convergence speed:

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9)

Adam Optimizer

Adam is an adaptive learning rate optimization algorithm that combines the advantages of momentum and RMSProp, making it suitable for most tasks:

optimizer = optim.Adam(model.parameters(), lr=0.001)

Summary

Fully connected layers, loss functions, and gradient descent are the cornerstones of deep learning. Through the detailed analysis in this article, you have not only understood their theoretical foundations but also mastered their implementation and optimization techniques. These three pillars will empower you to build robust models and solve real-world problems on your deep learning journey.