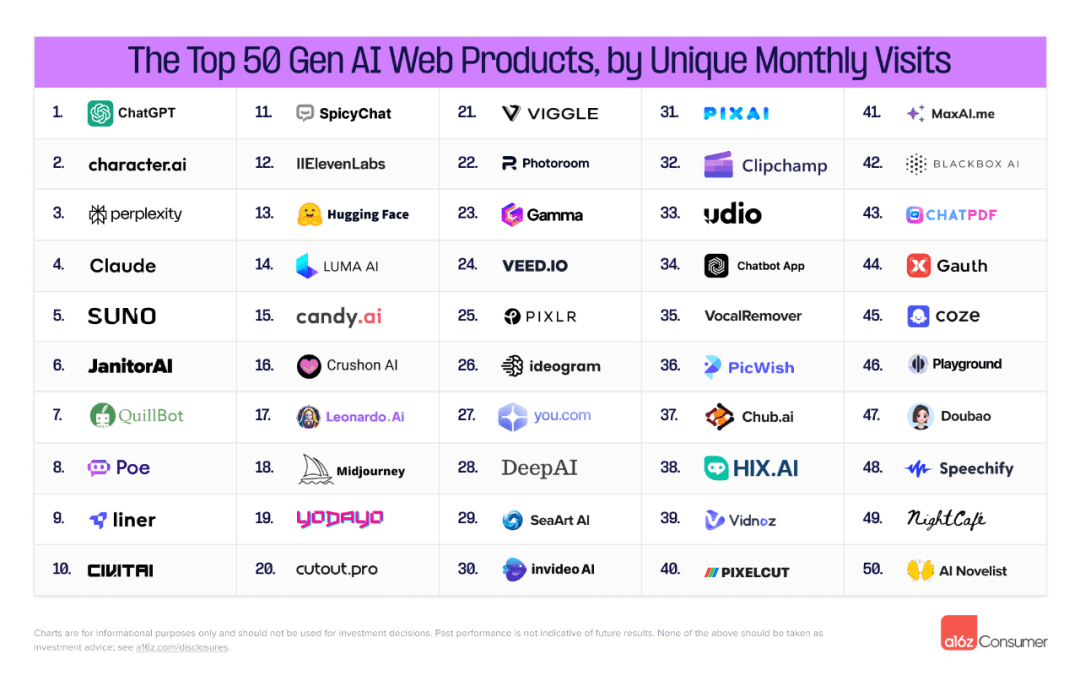

In machine learning, bias and variance are two critical concepts that together affect the model's performance. Understanding the essence of bias and variance helps us find the balance in the model, improve its generalization ability, and avoid underfitting and overfitting. This article will delve into the concepts of bias and variance in machine learning and demonstrate these concepts with code examples.

1. Definitions of Bias and Variance

In machine learning, bias and variance are the two main sources of error. They are closely related to the model's degree of fit and its generalization ability.

1.1 Bias

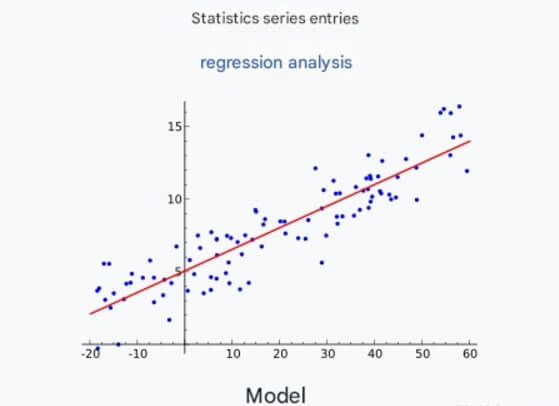

Bias represents the degree of deviation between the model's predicted values and the true values. Bias is typically caused when the model is too simple to capture the complex relationships in the data. A model with high bias tends to underfit because it cannot model the training data accurately enough.

Characteristics of a high-bias model:

Simple model assumptions, such as linear regression fitting nonlinear data.

Poor performance on both training and test data.

1.2 Variance

Variance represents the model's sensitivity to the random noise in the data. Models with high variance tend to overfit the training data, meaning they learn too much from the noise in the training data and perform poorly on test data.

Characteristics of a high-variance model:

Good performance on training data, but poor performance on test data.

Complex models that flexibly fit the details in the training data, leading to overfitting the noise.

2. Bias-Variance Tradeoff

The relationship between bias and variance is like a seesaw—decreasing bias often increases variance, and vice versa. Therefore, one of the most important tasks in machine learning is finding the balance between bias and variance to achieve the best model performance.

High bias, low variance: The model is too simple to capture the complexity of the data, resulting in underfitting.

Low bias, high variance: The model is too complex and overfits the training data, resulting in overfitting.

Balanced bias and variance: The model performs well on both training and test data and can generalize effectively.

Finding the right model complexity that balances bias and variance is one of the core tasks in machine learning. Next, we will use some code examples to demonstrate the concepts of bias and variance and how to find a balance between the two.

3. Code Example: Demonstrating Bias and Variance

In this example, we will use a simple dataset to demonstrate the bias and variance of different models.

We will use Python and the scikit-learn library to create data and train models. First, let's import the necessary libraries:

import numpy as np import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split from sklearn.preprocessing import PolynomialFeatures from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error

3.1 Creating the Dataset

We will create a nonlinear dataset with noise to demonstrate the fitting behavior of different models.

# Create dataset np.random.seed(0) X = np.sort(np.random.rand(100, 1) * 10, axis=0) y = np.sin(X).ravel() + np.random.randn(100) * 0.5 # Split data into training and test sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

3.2 Fitting with Different Models

We will use models with different complexities to fit the dataset and observe the bias and variance. Specifically, we will use linear regression (high bias, low variance) and polynomial regression (low bias, high variance).

3.2.1 Linear Regression (High Bias, Low Variance)

# Linear regression model

linear_reg = LinearRegression()

linear_reg.fit(X_train, y_train)

y_pred_train = linear_reg.predict(X_train)

y_pred_test = linear_reg.predict(X_test)

# Plotting

plt.scatter(X, y, color='gray', label='Original data')

plt.plot(X, linear_reg.predict(X), color='red', label='Linear Regression')

plt.legend()

plt.title('Linear Regression Fit (High Bias, Low Variance)')

plt.xlabel('X')

plt.ylabel('y')

plt.show()

# Calculate error

print("Training error (MSE):", mean_squared_error(y_train, y_pred_train))

print("Test error (MSE):", mean_squared_error(y_test, y_pred_test))From the above plot, we can see that linear regression does not fit the nonlinear data well, showing high bias (underfitting). The errors on both the training and test sets are large.

3.2.2 Polynomial Regression (Low Bias, High Variance)

Next, we will use polynomial regression to fit the dataset and observe its fitting behavior.

# Polynomial regression using polynomial features

poly_features = PolynomialFeatures(degree=9)

X_poly_train = poly_features.fit_transform(X_train)

X_poly_test = poly_features.transform(X_test)

poly_reg = LinearRegression()

poly_reg.fit(X_poly_train, y_train)

y_poly_pred_train = poly_reg.predict(X_poly_train)

y_poly_pred_test = poly_reg.predict(X_poly_test)

# Plotting

plt.scatter(X, y, color='gray', label='Original data')

plt.plot(X, poly_reg.predict(poly_features.transform(X)), color='blue', label='Polynomial Regression')

plt.legend()

plt.title('Polynomial Regression Fit (Low Bias, High Variance)')

plt.xlabel('X')

plt.ylabel('y')

plt.show()

# Calculate error

print("Training error (MSE):", mean_squared_error(y_train, y_poly_pred_train))

print("Test error (MSE):", mean_squared_error(y_test, y_poly_pred_test))In this case, polynomial regression fits the training data well with a small error, but the error on the test set is large. This is because the polynomial model overfits the noise in the training data, leading to poor generalization on the test data (high variance, overfitting).

3.3 Balancing Bias and Variance

To find a good balance between bias and variance, we can use cross-validation and regularization techniques to help choose an appropriate model complexity. Here are some common methods:

Cross-validation: By splitting the data into multiple parts and training the model on different subsets, we can evaluate its performance and select the best model.

Regularization: L1 and L2 regularization can help control the complexity of the model, thereby reducing variance and preventing overfitting.

For example, using L2 regularization with Ridge Regression can control the complexity of the polynomial regression model:

from sklearn.linear_model import Ridge

# Using Ridge Regression for regularization

ridge_reg = Ridge(alpha=1.0)

ridge_reg.fit(X_poly_train, y_train)

y_ridge_pred_test = ridge_reg.predict(X_poly_test)

# Calculate test error for Ridge Regression

print("Test error (MSE) - Ridge Regression:", mean_squared_error(y_test, y_ridge_pred_test))By introducing regularization, Ridge Regression can prevent overfitting of the polynomial model, improving the model's generalization ability.

4. Bias-Variance Balancing Strategies

In practical applications, we can achieve the balance between bias and variance using the following strategies:

4.1 Adjusting Model Complexity

Selecting the right model type and complexity is the first step in balancing bias and variance. For simple datasets, linear regression might be sufficient, but for complex nonlinear relationships, polynomial regression or more advanced models should be used.

4.2 Increasing Data Size

Increasing the amount of training data typically reduces model variance, thereby alleviating the risk of overfitting. More data helps the model better represent the sample space and improves generalization.

4.3 Using Ensemble Learning

Ensemble methods (e.g., Random Forest, Gradient Boosting) combine the predictions of multiple weak learners, effectively reducing both bias and variance and improving overall model performance.

4.4 Early Stopping

When training neural networks, early stopping can help prevent overfitting. Training is stopped when the error on the validation set starts increasing, avoiding the model from overfitting the training data.

5. Conclusion

Bias and variance are two key factors that affect machine learning model performance. High bias typically leads to underfitting, while high variance can lead to overfitting. One of the most important tasks when building machine learning models is finding the right balance between bias and variance to improve generalization.

Through comparing different model complexities and using code examples, we can clearly see the impact of bias and variance on model performance. Cross-validation and regularization techniques help us achieve a balance between bias and variance, resulting in better and more stable models.

Understanding the concepts of bias and variance is fundamental in machine learning. It not only helps evaluate model performance but also guides the selection of suitable algorithms and tuning strategies when building models. I hope this article helps you understand bias and variance!