The visionary Isaac Asimov’s Three Laws of Robotics, first introduced long before artificial intelligence (AI) became a reality, have served as an ethical framework for guiding the relationship between humanity and AI. As we stand on the threshold of a future driven by AI, Asimov’s vision is more relevant than ever. However, the question remains: are these laws enough to guide us through the ethical complexities of advanced AI?

The Three Laws of Robotics

Isaac Asimov, the great science fiction writer, introduced the Three Laws of Robotics in his 1942 short story "Runaround" from the I, Robot series. The laws are as follows:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given to it by human beings, except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

As we stand at the edge of an AI-powered future, Asimov’s vision takes on new meaning. These laws, while fictional, reflect deeper ethical questions about how AI should coexist with humanity. They emphasize the need for AI to operate within a moral framework.

But are these laws sufficient to navigate the ethical complexity of today’s AI advancements?

The Incomplete Nature of the Three Laws

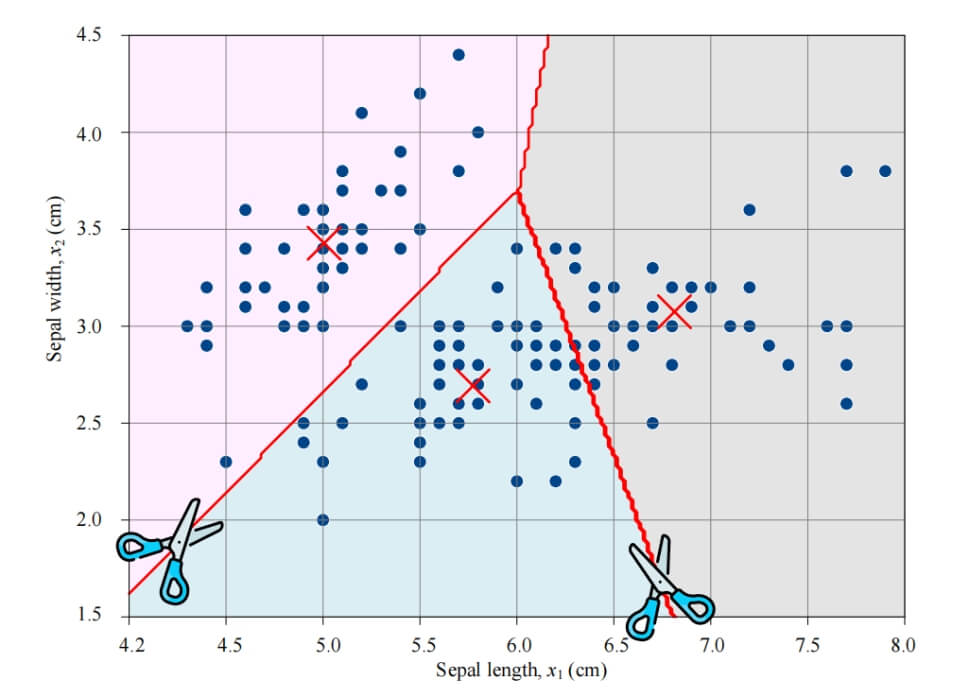

Take, for example, the case of self-driving cars: AI systems behind these vehicles must make rapid decisions balancing the safety of passengers with that of pedestrians. In potential accident scenarios, how should the AI prioritize whose safety to protect, especially when any choice might lead to harm?

In 1985, Asimov added a Zeroth Law: "A robot may not harm humanity, or, by inaction, allow humanity to come to harm." This law prioritized the collective well-being of humanity over individual humans, but even with this addition, applying the laws to real-world situations remains a challenge.

For example, when preventing harm to one individual could lead to greater harm to the entire human race, how should AI systems interpret the Zeroth Law? Scenarios like this reveal the complexity and often contradictory nature of moral decision-making in AI.

Challenges in Applying the Three Laws

It’s important to remember that Asimov’s laws were designed as literary devices, not a comprehensive moral framework. Asimov himself frequently explored "edge cases" in his stories, highlighting the limitations and contradictions in uncertain, probabilistic, and risk-laden scenarios. Today, self-driving cars must make decisions in an uncertain world where some level of risk is inevitable.

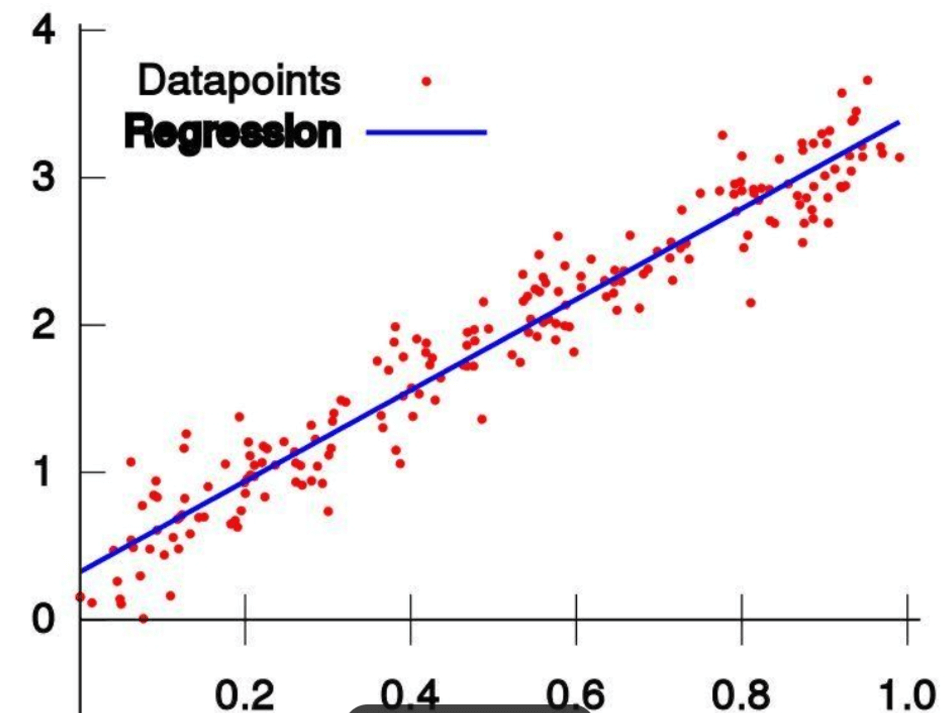

The Three Laws—or even the four, including the Zeroth—do not always address complex real-world scenarios and societal impacts beyond individual safety, such as fairness, happiness, or justice. Translating abstract moral principles into precise rules that can be programmed into AI systems is a fascinating challenge.

Examples of AI Ethical Dilemmas in the Modern World

Fast forward to today, as generative AI (GenAI) permeates every facet of our lives, we find ourselves grappling with the very issues Asimov predicted. These challenges highlight the importance of evolving Asimov’s rules into a more global and comprehensive framework.

AI in Healthcare: Advanced AI systems can diagnose and treat patients, but they also face challenges regarding patient privacy and consent. If AI detects a life-threatening disease and the patient wishes to keep it confidential, should AI violate the patient’s wishes to save their life, potentially causing emotional harm?

AI in Law Enforcement: Predictive policing algorithms help prevent crime by analyzing data to predict where crimes may occur. However, these systems can unintentionally reinforce existing biases, causing emotional and social harm to certain communities.

AI in Transportation: Consider the "trolley problem," an ethical thought experiment that questions whether it is morally permissible to switch the course of a runaway trolley to kill one person instead of five. Now imagine these decisions affecting thousands of people, and you can see the potential consequences.

These examples demonstrate the inherent conflicts among the laws. For instance, an AI tasked with protecting human life may receive an order that endangers one person but saves many others. AI would be caught between obedience and preventing harm, showcasing the complexity of Asimov’s moral framework in today’s world.

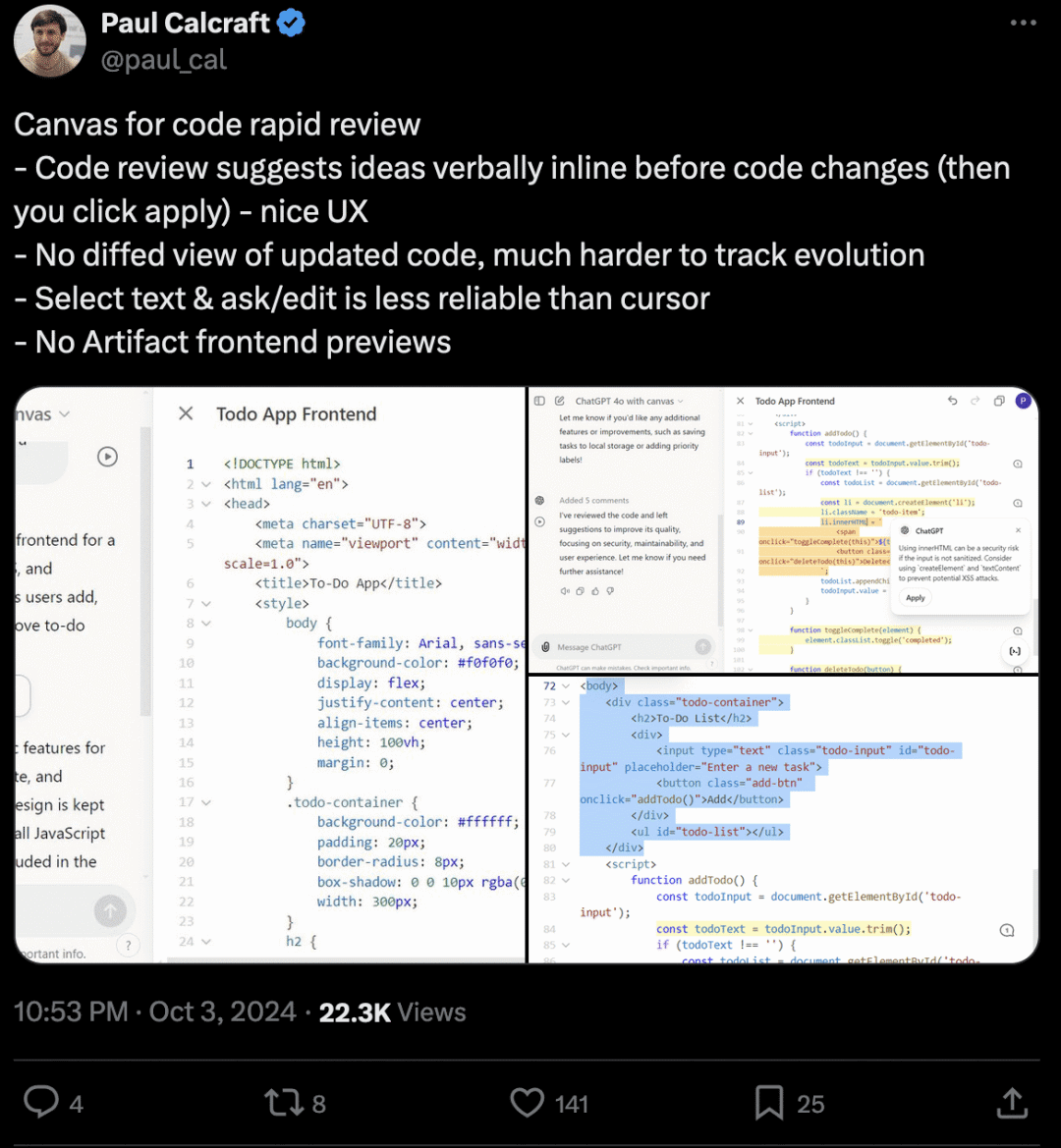

A Fourth Law: Necessary Evolution

What might Asimov suggest to resolve these dilemmas when applying his Three Laws to real-world scenarios? Perhaps a focus on transparency and accountability would be key:

A robot must be transparent and accountable for its actions and decisions, ensuring human oversight and intervention when necessary.

This law would emphasize the importance of human oversight and the need for AI systems to transparently track, explain, and seek permission for their actions. It would help prevent AI from being misused and ensure that humans remain in control, bridging the gap between ethical theory and practical application.

In healthcare, transparency and accountability in AI decision-making would ensure actions are taken with informed consent, maintaining trust in AI systems. In law enforcement, transparency would require AI systems to explain their decisions and seek human oversight, helping mitigate biases and ensuring fairer outcomes. In transportation, we would need to know how self-driving cars interpret potential harm to pedestrians versus the risk of collision with a speeding car in the opposite direction.

When AI faces legal conflicts, transparency in decision-making will allow human intervention to resolve moral dilemmas, ensuring that AI actions align with societal values and ethical standards.

Ethical Considerations for the Future

The rise of AI forces us to confront deep ethical questions. As robots become more autonomous, we must consider the nature of consciousness and intelligence. If AI systems achieve some form of consciousness, how should we treat them? Should they have rights? The inspiration for the Three Laws stems partly from a fear that robots (or AI) might prioritize their own "needs" over humans.

Our relationship with AI also raises questions of dependency and control. Can we ensure that these systems always serve humanity’s best interests? How do we manage the risks associated with advanced AI, from job displacement to privacy concerns?

Asimov’s Three Laws of Robotics have inspired generations of thinkers and innovators, but they are only the beginning. As AI becomes an indispensable part of our lives, we must continue evolving our moral frameworks. The proposed Fourth Law emphasizing transparency and accountability, alongside the Zeroth Law prioritizing humanity’s well-being, could be vital additions in ensuring that AI remains a tool for human benefit rather than a potential threat.

The future of AI is not just a technical challenge; it’s an ethical journey. As we navigate this path, Asimov’s legacy reminds us of the importance of foresight, imagination, and an unwavering commitment to moral integrity. The journey is just beginning, and the questions we raise today will shape the future of AI for generations to come.

Let’s not merely inherit Asimov’s vision—we must urgently build upon it, for when it comes to autonomous robots and AI, science fiction is fast becoming reality.