As data privacy and security regulations continue to tighten, traditional centralized machine learning methods are increasingly restricted. To efficiently train models in distributed data scenarios while protecting user data privacy, Federated Learning (FL) has emerged. It allows multiple parties to train models on local data and collaborate on model building by sharing model parameters instead of raw data.

This article focuses on the Federated Averaging algorithm (FedAvg), the most classic algorithm in Federated Learning, exploring its principles, code implementation, and practical solutions for handling data imbalance. Through rich examples and detailed analysis, we comprehensively showcase the potential and challenges of Federated Learning.

1. Overview of Federated Learning

1.1 Definition and Background of Federated Learning

Federated Learning, proposed by Google, is a distributed machine learning method aimed at addressing issues of data privacy, decentralization, and heterogeneity. Unlike traditional centralized methods, Federated Learning involves training models on local devices (such as smartphones, hospitals, etc.) and only uploading model parameters to the server, avoiding the direct sharing of sensitive data.

Typical Federated Learning scenarios include:

-

Personalized Recommendations: For example, optimizing input methods on mobile devices or advertisement recommendations.

-

Healthcare: Hospitals share models to improve diagnostic accuracy without sharing patient data.

-

Finance: Cross-bank fraud detection models.

1.2 Features of Federated Learning

-

Privacy Protection: By training models locally, it protects the data privacy of participants.

-

Distributed Training: Models are independently trained on multiple devices, reducing reliance on a central server.

-

Data Heterogeneity: It adapts to non-independent and identically distributed (Non-IID) data between clients.

2. Federated Averaging Algorithm (FedAvg)

Federated Averaging (FedAvg) is one of the core algorithms of Federated Learning, proposed by McMahan et al. in 2017. It updates the global model by performing a weighted average of local model updates, greatly simplifying the implementation of Federated Learning.

2.1 Core Idea of FedAvg

The key steps of the FedAvg algorithm are as follows:

-

Global Model Initialization: The central server initializes the global model parameters (w^0).

-

Distribute Model: The server sends the global model to all clients.

-

Local Training: Each client trains the model locally on its data for several epochs and updates the model parameters.

-

Upload Updates: Clients send their local model updates back to the server.

-

Global Aggregation: The server aggregates the client model parameters using a weighted average and updates the global model.

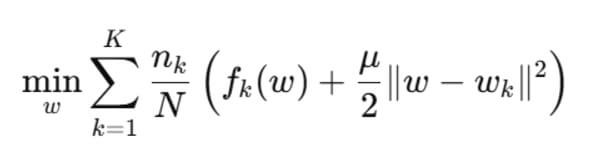

2.2 Formula Derivation of FedAvg

Assume there are clients, with the data size of client denoted by , and the total global data size is . In round , the local update for client is denoted as .

The global model update formula is:

This formula performs a weighted average of the client models, ensuring that clients with larger data sizes have a greater influence on the global model update.

2.3 Pseudocode for FedAvg

Below is the pseudocode for the FedAvg workflow:

-

Initialize the global model parameters .

-

For each training round :

-

a. The server sends the global model to the clients.

-

b. Each client performs several local optimization steps on its local data to obtain updated parameters .

-

c. Clients upload to the server.

-

d. The server aggregates the client parameters and updates the global model:

-

Return the final global model .

2.4 Code Implementation of FedAvg

Below is a simple implementation of the FedAvg algorithm based on PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader, Dataset

# Define a simple dataset

class SyntheticDataset(Dataset):

def __init__(self, size, num_features):

self.data = torch.randn(size, num_features)

self.labels = (self.data.sum(axis=1) > 0).long() # Simple binary classification task

def __len__(self):

return len(self.data)

def __getitem__(self, idx):

return self.data[idx], self.labels[idx]

# Define a simple model

class SimpleModel(nn.Module):

def __init__(self, input_dim):

super(SimpleModel, self).__init__()

self.fc = nn.Linear(input_dim, 2)

def forward(self, x):

return self.fc(x)

# Local training function

def local_training(model, dataloader, optimizer, criterion, epochs):

model.train()

for _ in range(epochs):

for x, y in dataloader:

optimizer.zero_grad()

outputs = model(x)

loss = criterion(outputs, y)

loss.backward()

optimizer.step()

return model.state_dict()

# Federated Averaging implementation

def fed_avg(global_model, client_loaders, rounds, local_epochs, lr):

for round_idx in range(rounds):

local_models = []

for loader in client_loaders:

# Clone the global model

local_model = SimpleModel(global_model.fc.in_features)

local_model.load_state_dict(global_model.state_dict())

optimizer = optim.SGD(local_model.parameters(), lr=lr)

criterion = nn.CrossEntropyLoss()

# Local training

local_state_dict = local_training(local_model, loader, optimizer, criterion, local_epochs)

local_models.append(local_state_dict)

# Aggregate local models

global_state_dict = global_model.state_dict()

for key in global_state_dict.keys():

global_state_dict[key] = torch.mean(torch.stack([local_model[key] for local_model in local_models]), dim=0)

global_model.load_state_dict(global_state_dict)

print(f"Round {round_idx + 1} completed.")

return global_model

# Simulate data and training

num_clients = 5

data_per_client = 100

input_dim = 10

client_loaders = [

DataLoader(SyntheticDataset(data_per_client, input_dim), batch_size=10, shuffle=True)

for _ in range(num_clients)

]

global_model = SimpleModel(input_dim)

global_model = fed_avg(global_model, client_loaders, rounds=10, local_epochs=5, lr=0.01)

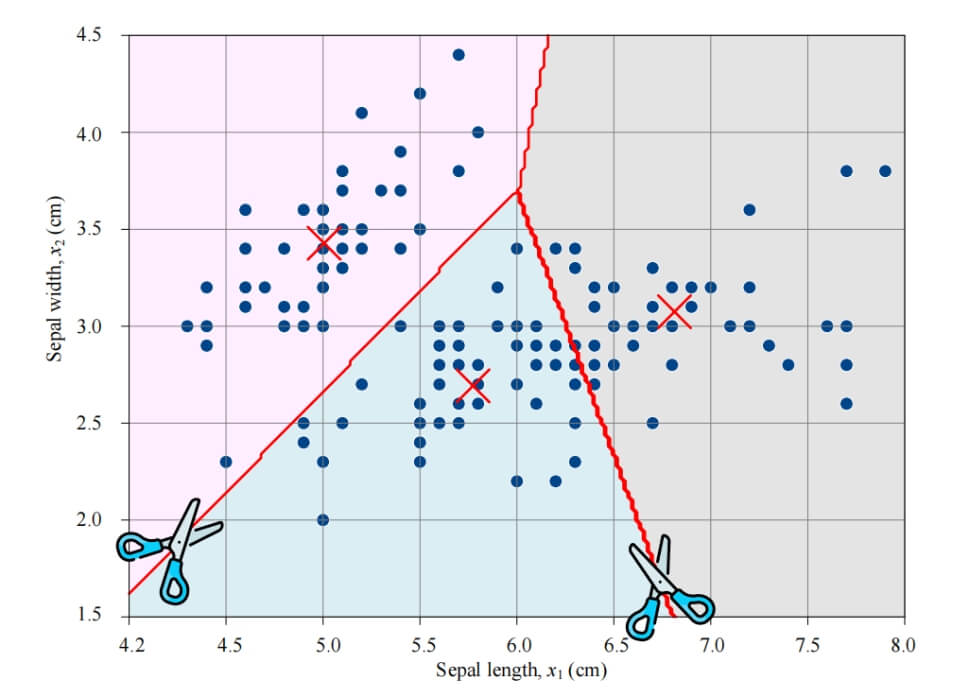

3. The Impact of Data Imbalance on FedAvg

3.1 Definition of Data Imbalance

In Federated Learning, data imbalance typically manifests in the following forms:

-

Quantity Imbalance: Significant differences in the data size across clients.

-

Class Imbalance: Uneven distribution of classes within a single client’s dataset, where some classes dominate.

The impact of data imbalance on Federated Learning includes:

-

Model Bias: The global model performs poorly on certain classes or clients' data.

-

Training Instability: Uneven contributions from clients may disrupt the model update process.

3.2 Strategies for Addressing Data Imbalance

-

Adjusting Client Weights: Adjust client weights based on data size to reduce the negative impact of small sample clients.

-

Resampling: Perform over-sampling or under-sampling in local datasets to balance the data distribution.

-

Data Augmentation: Generate more samples using data augmentation techniques to alleviate class imbalance.

-

Algorithmic Improvements: Methods like FedProx introduce regularization terms to prevent excessive updates and improve robustness.

3.3 Experimental Example: Simulating and Comparing Imbalanced Data

The following code shows how to simulate data imbalance:

def create_imbalanced_loaders(num_clients, input_dim):

loaders = []

for i in range(num_clients):

if i % 2 == 0:

data_size = 200 # Larger data size

else:

data_size = 50 # Smaller data size

dataset = SyntheticDataset(data_size, input_dim)

loaders.append(DataLoader(dataset, batch_size=10, shuffle=True))

return loaders

imbalanced_loaders = create_imbalanced_loaders(num_clients, input_dim)

# Run FedAvg on imbalanced data

global_model = fed_avg(global_model, imbalanced_loaders, rounds=10, local_epochs=5, lr=0.01)

By comparing training results on balanced and imbalanced data, the impact of data imbalance on model performance can be observed.

4. Improvement Methods: FedProx and Personalized Federated Learning

FedProx introduces regularization terms to prevent overfitting of local models, thereby improving the global model's robustness on non-IID data.

5. Conclusion and Outlook

Federated Learning, as a cutting-edge technology in distributed machine learning, enables collaborative modeling while protecting data privacy. FedAvg, as a classic algorithm, is simple and efficient but has limitations when addressing data imbalance and non-IID data. Future research will focus on algorithmic improvements and communication optimization to meet more practical needs.

Through this article, we hope readers gain a deeper understanding of Federated Learning, FedAvg, and the challenges and solutions related to data imbalance, providing theoretical and practical support for real-world applications.