On August 6, John Schulman, co-founder of OpenAI and architect of ChatGPT, announced his resignation and will join Anthropic, another large model company founded by former OpenAI employee Dario Amodei.

Nearly 9 years ago, Schulman joined OpenAI after graduating from graduate school and became a member of the founding team. He is one of the early pioneers of deep reinforcement learning. What many people don’t know is that he is also the head of the ChatGPT project. He led the research and development of ChatGPT’s secret weapon RLHF (reinforcement learning with human feedback) technology.

Before taking charge of ChatGPT, he invented the widely used proximal policy optimization algorithm (PPO), which is actually part of ChatGPT training. He also invented the trust region policy optimization (TRPO), which has made important contributions to OpenAI Gym, OpenAI Benchmark, and many meta-learning algorithms in the modern deep learning era. It is worth mentioning that his doctoral supervisor is Pieter Abbeel, a pioneer in the field of reinforcement learning and a professor at the University of California, Berkeley.

Schulman has both research vision and a rich foundation in engineering practice. Since his master's degree, he has been studying reinforcement learning algorithms. From data collection to language model training and interaction, he has rich experience and exploration of different parts of the big model technology stack. Perhaps he is the person who knows the most about the unique secrets of OpenAI's big model.

In his farewell letter to his colleagues at OpenAI, Schulman said that he chose to leave in order to focus on AI alignment research, just as Ilya Sutskever, the chief scientist who left OpenAI, and Jan Leike, the head of super alignment, gave similar reasons. Earlier, after Jan Leike left, Schulman became the head of OpenAI's alignment science work team (also known as the "post-training" team). Now, he will fight side by side with Jan Leike who has joined Anthropic.

Recently, in his last interview as an OpenAI member with the well-known technology podcast blogger Dwarkesh Patel, Schulman also pointed out that as AI performance improves, we may need to suspend further training to ensure that we can safely control the technology. This is enough to show his emphasis on AI alignment.

In addition, they also discussed how to improve the capabilities of large models through pre-training and post-training, as well as topics such as the future of AGI. Schulman predicts that in the next few years, AI will be able to take on more complex tasks, such as entire coding projects, and self-repair from errors.

1Pre-training, post-training and generalization of large models

Dwarkesh Patel: The current GPT-4 has an Elo score that is about 100 points higher than the initial release. Is this all an improvement brought by post-training?

John Schulman: Yes, most of it is post-training, but there are also many different and individual improvements. We will consider data quality and data quantity. In fact, it is just more times to deploy the whole process and collect new data, as well as changing the type of annotations collected, but all factors together will bring you quite significant benefits.

Dwarkesh Patel: There is a big difference between pre-training and post-training. In addition to the specific technical details such as loss functions and training mechanisms, from a more macro and conceptual perspective, what exactly does pre-training create? What does post-training do on this basis?

John Schulman: In pre-training, we essentially train the model to mimic the entire content on the internet or the web, including web pages, code, etc., so we get a model that can generate content that resembles random web pages. The model is also trained to assign probabilities to all content to maximize the probability.

The main goal of the model is to predict the next token based on the previous token sequence, where a "token" can be a word or part of a word. Since the model has to assign a probability to it - we are training to maximize the log probability, it will eventually become very accurate. It can not only generate the entire content of the web, but also assign probabilities to everything. The base model can play a variety of roles and generate a variety of content.

In the post-training stage, our goals are more specific, usually to make the model act as a specific type of chat assistant. This means that the model will be shaped into a specific role, and its core task is to be helpful. It is not imitating humans, but actually answering questions or performing tasks. We optimize for the output that humans find useful and like, rather than simply copying raw content from the web.

Dwarkesh Patel: Is better post-training a big competitive advantage? Currently, companies differentiate themselves by metrics like the size of their models.

John Schulman: There are some competitive advantages, because it’s a very complex operation that requires a lot of skilled people to do, and they need to have a lot of implicit and organizational knowledge.

Post-training is a competitive advantage because it’s very complex and requires a lot of effort and a lot of R&D to create a model that actually has the features that people care about. It’s not easy to start doing it right away, and the companies that are doing the most pre-training seem to be doing the same post-training.

To some extent, maybe you can copy or start more of this training. There is also a force that makes the competitive advantage of models less strong, you can distill the model, or take someone else’s model and clone its output, and compare it by using someone else’s model as an evaluation.

Larger companies may not do this because it would violate the terms of service policy and it would be a blow to their ego. But I expect that some smaller companies are starting to do this to catch up with their competitors.

Dwarkesh Patel: Assuming RL works better on smarter models. Will the ratio of compute spent between pre-training and post-training significantly favor post-training?

John Schulman: There is some debate about this, and right now the ratio is pretty lopsided. You could argue that the outputs generated by the models are higher quality than most of the content on the web, so it makes more sense to let the models think for themselves rather than just train them to mimic what is on the web. We have seen a lot of gains with post-training, so I expect to continue to push this approach and potentially increase the amount of compute put into it.

Dwarkesh Patel: There are some models that are very good at acting as chatbots. What do you think the models that will be released by the end of the year will be able to do?

John Schulman: In the next year or two, we can expect models to perform more complex tasks than they currently do. For example, they will not be limited to providing writing suggestions for a single function, but will be able to take on entire coding projects. These models will be able to understand high-level instructions, autonomously write code files, run tests, and analyze the results. They will even be able to perform multiple rounds of iteration to complete more complex programming tasks.

To achieve this, we need to train models on more complex combinations so that they can perform more difficult tasks.

In addition, as the performance of the models improves, they will become more efficient at recovering from errors or handling edge cases. Once they encounter problems, they will be able to effectively self-heal.

In the future, we will not have to rely on a lot of data to teach models how to correct errors or get back on track. Even a small amount of data, or the model's generalization ability based on other situations, will be enough to allow them to quickly adjust and get back on track when they encounter obstacles. In contrast, current models tend to get stuck and stop when faced with difficulties.

Dwarkesh Patel: How does generalization help models get back on track, and what is the connection between generalization and reinforcement learning?

John Schulman: There is no direct connection between the two. Usually, we rely on a small amount of data to deal with various problems. When you assemble a dataset with a wide range of samples, you can get representative data from a variety of situations. If you have a model with excellent generalization ability, even if it has only been exposed to a few examples of how to correct errors and get back on track, or has only learned a few similar cases during the pre-training phase, the model will be able to apply these limited experiences and knowledge to the current problem.

If the model's generalization ability is weak, but the data is sufficient, it can also complete almost any task, but it may require a lot of effort in a specific domain or skill. In contrast, models that generalize better may not need any training data or additional effort to do the task well.

Dwarkesh Patel: Right now, these models can keep working for five minutes. We expect them to gradually take on more complex tasks, such as those that take humans an hour, a week, or even a month to complete. To achieve this goal, will we need to increase the computing resources tenfold at each new level, similar to the scaling law of pre-training? Or will we be able to directly reach the ability to perform long-term tasks through a more efficient and simplified process as the model improves in sample efficiency, without having to increase the computing resources significantly each time?

John Schulman: At a high level, I agree that long-term tasks do require higher intelligence in the model, and such tasks will be more expensive to train. I don't think there is a very clear scaling law unless the experiment is designed in a very careful way or in a specific way. There may be some stage transitions where the model can handle longer tasks after reaching a certain level.

For example, when humans plan for the long term, their basic thinking mechanism may not change depending on the time scale. We may be using the same psychological mechanisms for both short-term and long-term planning, a process that is different from reinforcement learning, which requires discounting factors that account for time scales.

Language allows us to define and plan goals over different time scales. Whether it is the upcoming month or the distant decade, we can take immediate action to move towards the set goal. Although I am not sure whether this marks a qualitative leap, I expect models to demonstrate general capabilities across time scales.

Dwarkesh Patel: It seems that our current models are very smart at processing single tokens, perhaps not much different from the smartest humans, but they cannot perform tasks effectively and continuously, such as continuing to write code five minutes later, in line with the long-term goals of the project. If we start long-term reinforcement learning training, which can immediately improve the long-term coherence of the model, should we expect it to reach human-level performance?

John Schulman: It is uncertain what we will encounter and how fast we will progress after entering this stage of training. The model may have other flaws, such as not being as good as humans in decision-making. This training will not solve all problems, but it will be a significant improvement in improving long-term task capabilities.

Dwarkesh Patel: Is this reasonable? Are there other possible reasons for the bottleneck? Given that the models have already acquired extensive knowledge representations through pre-training, what challenges remain to be overcome in order to maintain coherence over time with reinforcement learning?

John Schulman: Perhaps human experts bring some unique experience in performing different tasks, such as having an aesthetic sense or being better at handling ambiguity. If we want to do research and so on, these factors will come into play.

Obviously, the actual application of the model will be limited by some day-to-day functions, whether it can use the user interface, interact with the physical world, or access the resources it needs. So there may be many temporary obstacles that, while not permanent, may initially slow progress.

Dwarkesh Patel: You mentioned that this process may be more sample-efficient because the model can generalize from pre-training experience, especially the ability to get out of trouble in different situations. So what is the strongest evidence of generalization and knowledge transfer you have seen? The ability of future models seems to depend on how well they generalize. Are there particularly convincing examples of generalization?

John Schulman: We do observe some interesting examples of generalization in the post-training stage. A typical example is that if fine-tuned with English data, the model can perform well in other languages. For example, the assistant trained on English data also responds reasonably well to Spanish queries. While it sometimes makes a small mistake in responding in English or Spanish, it responds correctly in Spanish most of the time.

This is an interesting example of the model's ability to generalize, adapting quickly and automatically responding appropriately in the right language. We saw something similar with multimodal data, where the model also shows reasonable ability on images if you fine-tune on text only.

In the early days of ChatGPT, we tried to address the problem of the model understanding its own limitations. Early versions of the model would mistakenly believe that they could perform actions such as sending an email or calling an Uber. The model, trying to play the role of an assistant, might falsely confirm to the user that an email had been sent, when it actually had no such ability.

To this end, we set out to collect data to address these issues. We found that even a small number of targeted examples, once mixed with other data, can effectively solve the problem. I can't remember the exact number of examples, but it was around 30, but these few examples show general characteristics of the model's behavior and illustrate that the model does not have certain capabilities. These examples also generalize well to other domains that the model was not trained for.

Dwarkesh Patel: Let's say you have a model that is trained to be coherent for long periods of time. Setting aside other possible limitations, is it possible that we will have models that are at human level next year? I envision a model that interacts with you just as well as a colleague. You can ask them to do something and they'll do it.

John Schulman: It's hard to say. There are multiple deficiencies when communicating with these models right now, in addition to the long-term coherence problem, such as difficulty thinking deeply or focusing on the questions asked.

I don't think that just a small improvement in coherence is enough to achieve (AGI). I can't say what the main deficiencies are that would prevent them from becoming a fully functional assistant.

2Reasoning Power of Large Models

Dwarkesh Patel: Obviously, RLHF makes models more useful, which is important, so the description of "lobotomized" may not be accurate. However, once these models are put into chatbot form, they all speak in a very similar way, wanting to "deepen" the discussion, wanting to convert information or content into a bulleted list format, and often seem rigid and boring.

Some people complain that these models are not creative enough. As we talked about before, until recently, they could only write rhyming poems and could not output non-rhyming poems. Is this a specific way RLHF is now? If so, is it caused by the evaluators? Or is it because of the loss function? Why do all chatbots look like this?

John Schulman: I think there is a lot of room for improvement in the specific way the training process is. We are actively working to improve this and make the writing more lively and interesting. We have made some progress, such as improving ChatGPT's personality to make it more interesting and chat with it better and less robotic.

There is also an interesting question about how specific words appear, such as "delve". I've also found myself using this term recently, and I wonder if it's influenced by the model. There may be some other interesting effects, and there may be unintentional distillation between language models and providers. If you hire people to do labeling tasks, they may just feed things into the model, maybe call up their favorite chatbot, feed the task, let the model do the task, and then copy and paste it back. So this may explain the convergence of some outputs.

People do like to list bullet points and structured responses, and get a lot of information from the model. How much of this is a quirk of specific choices and design during the later training process, and how much of it is what people actually want is still unknown.

Dwarkesh Patel: From the perspective of human psychology, how should we define the influence of current reinforcement learning systems on models? Does it exist as a driving force, or as a goal or impulse? How does it guide the model to not just change the way the chatbot expresses itself, but to adjust its output at a deeper level, such as avoiding certain words and adopting more appropriate expressions?

John Schulman: In the field of AI, reinforcement learning mechanisms have similarities to human intrinsic motivation or goal pursuit, which is to guide the system towards a specific state rather than other possibilities. However, humans have a more complex understanding of motivation or goals. It is not only about achieving goals, but also about the emotional experience of achievement when achieving goals. These emotional experiences are more related to the learning algorithm than the performance of the model when it is running in a fixed state.

I don’t know if my analogy is close enough. In a sense, the model does have motivation and goals in some meaningful way. With RLHF, the model tries to maximize human approval, which is measured by the reward model, and the model just tries to output content that people will like and judge as correct.

Dwarkesh Patel: I have heard at least two ideas about using internal monologue to improve reasoning ability. One is that the model learns from its own output, through a series of possible thought clues, it learns to follow the one that finally outputs the correct answer, and then trains it before deployment; the other is to use a lot of computation for reasoning at deployment time, which requires the model to "talk to itself" at deployment time. Which training method can better improve the model's reasoning ability?

John Schulman: By definition, reasoning is a task that requires some step-by-step computation at test time. I also expect to gain some results at training time. I think combining these two methods will give the best results.

Dwarkesh Patel: Currently, AI models learn in two main ways: one is the training phase, which includes pre-training and post-training. Pre-training takes up most of the computing resources, processing trillions of tokens and absorbing a lot of information quickly. If humans learn in this way, they are likely to get lost, and this method is not efficient enough. The second is contextual learning, which is more sample efficient, but the learning results are lost after each interaction. I am curious if there is a third way to learn, which does not lose with each interaction and does not require processing massive amounts of data.

John Schulman: Indeed, it is this middle ground between large-scale training (i.e. creating a single model that can do everything) and contextual learning that is missing from current systems. Part of the reason is that we have expanded the context length so much that there is not much motivation to explore the area between the two. If the context length can reach 100,000 or even 1 million, it is actually quite sufficient. In many cases, the context length is not the limiting factor.

I also think that this learning method may need to be supplemented by some kind of fine-tuning. The capabilities brought by fine-tuning and contextual learning are complementary to each other in some ways. I expect to build systems that can learn online and have some cognitive skills, such as being able to self-reflect and find new knowledge to fill in knowledge gaps.

Dwarkesh Patel: Do these processes happen simultaneously? Or is there a new training mechanism that allows long and short-term tasks and various training needs to be achieved simultaneously? Are they independent of each other, or is the model smart enough to be able to self-reflect and perform long-term tasks at the same time to ensure that it gets the appropriate reward on the long-term goal?

John Schulman: If you are doing some long-term task, you are actually learning by doing. The only way to complete a task that involves many steps is to continuously update your learning and memory as you learn. In this way, there is a continuous transition between short-term memory and long-term memory.

As we start to focus more on long-term tasks, I expect the need for this ability will start to become clear. In some ways, putting a lot of things into context helps a lot because we now have very long context. However, you may still need fine-tuning.

As for the ability to introspect and actively learn, the model may automatically learn where its ability is declining. The model does calibrate what it knows, which is why it does not have serious hallucinations. Models have some understanding of their own limitations, and the same ability can be used for active learning and so on.

Dwarkesh Patel: What do you expect RL to look like? Is it reasonable to assume that by the end of this year or next year, you have an assistant that can work with you on the screen? What direction will it develop from then on?

John Schulman: I absolutely hope RL will develop in this direction, but it is not clear what the best form is. It may be like Clippy on the computer to provide assistance, or more like a helpful colleague in the cloud. You can expect which form will work best, and I think people will try them all.

I expect the mental model of this assistant or helpful colleague to become more realistic. You can share more daily work with it, not just one-time queries, but there is an entire project you are working on, and it knows everything you did in that project. The model can even make proactive suggestions, maybe you can tell it to remember to ask me this question and whether I have made any progress. Models have always lacked initiative, and I hope to see a shift from one-time queries to entire projects working with models.

3.Is the development of large models entering a "plateau"?

Dwarkesh Patel: Compared to your expectations in 2019, has AI progressed faster or slower than you expected?

John Schulman: Faster than I expected since GPT-2. I definitely agree that scale and pre-training play a big role, but when GPT-2 was done, I wasn't completely convinced that it would revolutionize everything.

The real transformation was after GPT-3, when I shifted the direction of what I was doing and what the team was doing. After that, we got together and said, "Oh, yeah, let's see what we can do with these language models." But when GPT-2 was released, I wasn't so sure about that.

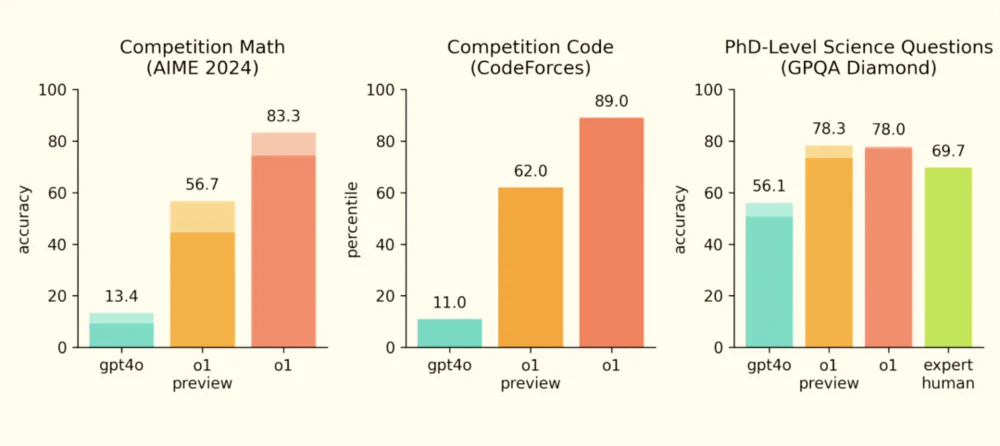

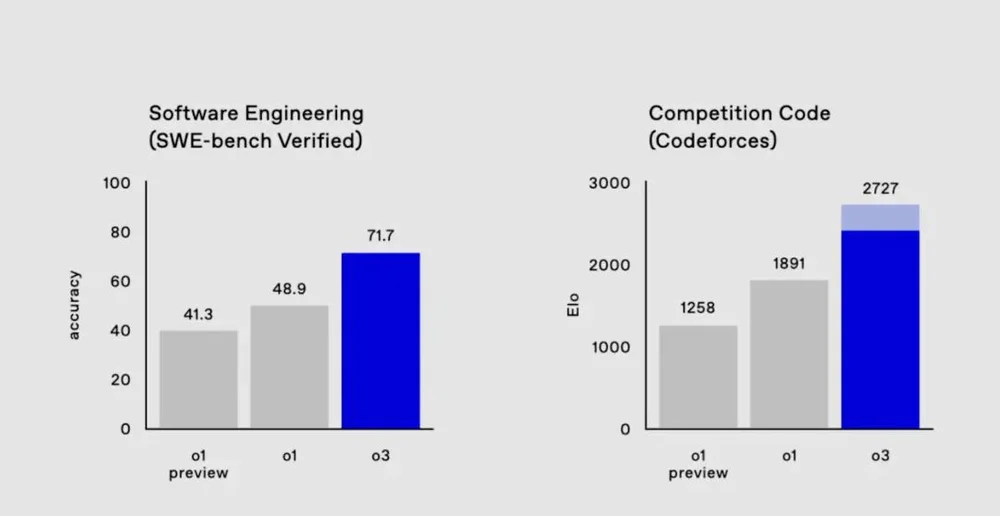

Dwarkesh Patel: Since GPT-4, it seems that no model has improved significantly, and one hypothesis is that we may be approaching some kind of plateau. These models don't actually generalize very well and will hit a "data wall" (https://www.wsj.com/tech/ai/ai-training-data-synthetic-openai-anthropic-9230f8d8), and beyond this data wall, the abilities unlocked by memorizing large pre-training datasets will not help you get models that are smarter than GPT-4. Is this assumption correct?

John Schulman: Are we about to hit the data wall? I can't draw enough conclusions from the time since GPT-4 was released, because it does take a while to train these models and do all the preparation for training the next generation of models. It is indeed challenging because of the limited amount of data, but it will not hit the data wall immediately. However, as you get closer to the "data wall", the nature of pre-training will change.

Dwarkesh Patel: We talked about some examples of generalization, if you train a bunch of code, will it get better at language reasoning? Do you observe positive transfer effects between different modalities? When the model is trained on a lot of videos and images, can it get smarter with these synthetic data? Or is the power it gains limited to the specific types of labels and data used in the training set?

John Schulman: I think it's difficult to study this kind of problem because you can't create so many pre-trained models. Maybe you can't train a model the size of GPT-4 and do ablation studies at that scale. Maybe you can train a lot of GPT-2-sized models, or even GPT-3-sized models, with different data mixes and see what you get. I'm not aware of any public results that involve ablation of code data and inference performance, etc., which I'm also interested in.

Dwarkesh Patel: I'm curious, as the scale gets larger, the models get smarter. And the ablation at the GPT-2 level showed that there wasn't much transfer effect, which would provide evidence for the level of transfer of GPT-4-sized models in similar areas?

John Schulman: Yes, but you can't conclude that if transfer fails at the size of GPT-2 models, it will also fail at a larger scale. Larger models may learn better shared representations, while smaller models rely too much on memory. So it's true to some extent that larger models can learn how to do the right computations.

Dwarkesh Patel: The answer could be very simple. You train bigger models on the same amount of data and they get smarter. Or to get the same level of intelligence you just train them on less data. The model has more parameters, sees less, and is now just as smart. Why is that?

John Schulman: There is no good explanation for the scaling law of parameter count, and I don't even know what the best mental model is. Obviously if you have a bigger model you have more capacity, so you should end up with lower loss.

Why are bigger models more sample efficient? Your model is like a collection of different circuits that do computations, and you can imagine it's doing computations in parallel, and the output is a weighted combination of them. If you have more width (actually width is somewhat similar to depth, because the depth with a residual network can update something similar to width in the residual stream).

When you learn all the different computations in parallel, you can compute more with a bigger model, so you have more chances to make guesses, one of which is the right answer, and you end up guessing right many times, and the model gets better.

Some of the algorithms work like this, like mixture models or multiplicative weight update algorithms, and mixture of experts algorithms are essentially weighted combinations of experts with learned gates.

You might imagine that just having a bigger model gives you a higher probability of getting the right features.

Of course, it's not just features that are completely unrelated to the linear combination you're taking, but more like a library of features that you might chain together in some way and combine. So the bigger model has a larger library of different computations, a lot of which are dormant and only used part of the time, but the model has more room to find circuits to do some useful tasks.

Dwarkesh Patel: A bigger question is, do you feel that the same amount of computation has trained better models since GPT-4? Or have you made sure that the learning of GPT-5 can be better and more scalable, but it's not like training GPT-4 on the budget of GPT-3.5?

John Schulman: We are continuing to make progress in improving efficiency. Whenever you have a one-dimensional performance metric, you find different improvements that are substituted for each other, and both post-training and pre-training can improve these metrics, but they may focus on different things.

Ultimately, if you have a single number, they will all be substituted for each other to some extent. For metrics like human evaluation, we have made significant progress in both pre-training and post-training, regardless of what humans prefer.

4.Looking AGI 2025

Dwarkesh Patel: What modalities will become part of the model and at what stage will they be unlocked?

John Schulman: New modalities will evolve over time or be added very quickly. I expect that through a combination of pre-training and post-training, the model capabilities will continue to get better overall and open up new use cases.

Right now, AI is still a small percentage of economic development, and it only helps a fairly small portion of the work. Over time, this percentage will increase, not only because the performance of the models improves, but also because people figure out how to integrate the models into different processes. So even if we just keep the models in their current state, you will still see its use continue to grow.

I expect AI to be more widely used and used to perform more complex technical tasks. For example, the programming that I mentioned earlier, AI will not only take on longer-term projects, but also assist in various types of research. I hope that we can use AI to advance science in many ways, because AI may be able to understand all the literature in a specific field and sift through large amounts of data, which is a task that humans may not have the patience to do.

I hope that the future will be like this: all these tasks are still driven by humans, but there is an AI assistant that you can give instructions to and point to many different problems that are valuable to you, and everyone will have AI to help them complete more tasks.

Dwarkesh Patel: Right now AI only plays an auxiliary role, but in the future, AI will do better than humans in anything people want to do, and they will be able to help you complete tasks directly, or even manage the entire company. Hopefully, by then we have systems that are fully aligned with users so that users can trust that the company will run the way they want.

John Schulman: We don't want AI to run the company right away. Even if these models are good enough to run a successful business on their own, we still want people to oversee these important decisions and be in control. In some ways, this is also optional.

I think people will still have different interests and ideas and want to guide AI to their own interesting pursuits, but AI itself does not have any inherent desire unless we build this desire into the system. So even if AI becomes very powerful, I hope that humans will still be the dominant force in deciding what AI will do.

Dwarkesh Patel: Essentially, the advantage of uploading agents to a server is that we can aggregate many intelligent resources or have agents upload themselves to a server. Now, even though we have achieved coordination, I am still not sure what actions should be taken after this and how this ensures that we are heading towards a positive outcome.

John Schulman: If we can coordinate and solve the problem of technology alignment, we can safely deploy intelligent AI, which will become an extension of human will and avoid catastrophic abuse. This will usher in a new stage of prosperity and rapid scientific development, which is the best situation.

Dwarkesh Patel: This makes sense. I am curious about how it will develop in the next few years. In an ideal situation, all participants will reach a consensus and suspend action until we are sure that the system we build will not get out of control or enable others to do evil. So how do we prove this?

John Schulman: It will be more conducive to safety if we can deploy systems gradually, and each generation of systems will be smarter than the previous one. I hope that our development path is not a situation where everyone needs to coordinate, lock down the technology, and then carefully launch it, which may lead to the accumulation of potential risks.

I would prefer that we continue to release systems that are slightly better than the previous generation, while ensuring that each improvement is matched by the performance increase in terms of safety and consistency. If problems arise, we can slow down in time. That's what I hope for.

If there is a sudden and huge performance increase in AI systems, we will face a question: How can we be sure that the new system is safe enough to bring to market? I can't provide a permanent answer to this. However, in order to make such an improvement more acceptable, it may require a lot of testing and simulated deployment.

You also need a good monitoring system to detect problems in the deployed system as soon as they occur. Maybe you need some kind of monitoring mechanism to observe the behavior of the AI and look for signs of problems. You also need multiple layers of defense. You need to make sure that the model itself is well-behaved and ethically impeccable, but also that it is extremely difficult to abuse. In addition, you need excellent monitoring to detect any unforeseen problems.

Dwarkesh Patel: How can you detect such a sudden and huge performance increase before deploying these systems widely?

John Schulman: This requires a lot of evaluation during the training process.

Dwarkesh Patel: Is it reasonable to train reinforcement learning for a long time knowing that the performance of the model may increase dramatically in a short period of time? Or is the likelihood of such an improvement very low?

John Schulman: When we see potentially dangerous capabilities, we should be very cautious in doing this kind of training. We don't need to worry too much at the moment because it's hard to get the model to respond coherently. If the model becomes very efficient, we need to take these issues seriously and do a lot of evaluation to make sure the model behaves consistently and doesn't betray us. At the same time, we need to pay attention to sudden changes in the model's capabilities and conduct rigorous evaluations of the model's capabilities.

We also need to ensure that the training content does not cause the model to have an incentive to fight us, which is not difficult. The way we train it now through RLHF, even if the model is very intelligent, it is quite safe. The only goal of the model is to produce information that humans like. It doesn't care about other things in the outside world. It only focuses on whether the text it generates can be accepted by humans.

Obviously, if the model needs to perform a series of actions, especially those involving tools, it may do some actions that are meaningless to humans, but unless there is a specific motivation, there is no reason for it to do anything other than produce high-quality output.

In AI, there is a commonplace idea about “instrumental convergence”: models may attempt to “rule the world” in order to eventually produce a piece of high-quality code. If you ask a model to write a Flask app for you, it may first think “I have to rule the world first”, but on reflection, for a well-defined task like writing an app, it seems unnecessary for the model to rule the world first. Of course, if you give the model the task of “making money”, it may take some bad behaviors with the goal of “making money”.

Dwarkesh Patel: If there are no other obstacles in the next year, we do achieve AGI, what are your plans?

John Schulman: If AGI is achieved faster than expected, we must act cautiously. We may need to suspend further training and deployment, or carefully decide on the scale of deployment when deploying, until we are sure that we can safely manage this technology. Deep understanding of its behavior patterns and capabilities is critical.

5.Other questions

Dwarkesh Patel: The model responses are indeed more verbose than some people expect. Perhaps this is because the evaluators prefer long answers during the annotation phase. I wonder if this is because of the pre-training method and the infrequent occurrence of stopping sequences.

John Schulman: Some bias in the annotation process may have led to the lengthy answers. We tend to train on one message at a time, rather than interacting with the entire model. If you only see one message, it may be a clarification question, or a short reply and invitation to follow up, which will appear less complete than one that covers all possibilities.

Another question is whether people's preferences change based on the speed of the model output. Obviously, if you are just waiting for the token output, you will prefer it to get straight to the point, but if it can output a lot of text for you instantly, you may not actually care if there is a lot of boilerplate language or a lot of content that you will skim.

Dwarkesh Patel: The reward model is interesting and it comes closest to aggregating people's needs and preferences. I am also thinking about smarter models, one is that you can give it a list of things we want that are not so trivial and obvious. On the other hand, I heard you say that a lot of human preferences and values are very subtle, so they're best represented in pairs of preferences. When thinking about a model at the level of GPT-6 or GPT-7, do we give it more written instructions or do we deal with these latent preferences?

John Schulman: That's a good question. These preference models do learn a lot of subtleties about human preferences that are hard to capture in written instructions. Obviously, you could write an instruction manual with lots of comparison examples, a model specification, with lots of examples and explanations, but it's not clear what the best format is for describing preferences.

My guess is that whatever you can get from a large dataset that captures fuzzy preferences, you can distill it into a short document that captures these ideas. Larger models automatically learn a lot of these concepts about what people might find useful and helpful. These models will have some complex moral theories to attach to them. Of course, there's a lot of room for other different styles or moral theories to attach to them.

So if we were to write a document, if we were to align these models, what we're doing is attaching it to a particular style or moral code, which still requires a fairly long document to capture exactly what you want.

Dwarkesh Patel: This should help eliminate competitive advantages. What is the median evaluator like? Where do they cluster and what is their knowledge level?

John Schulman: This group is quite diverse. We do hire evaluators with different skills for different types of tasks or projects. A good mental model is to look at people on Upwork and other platforms and see who is doing remote gig work.

This is a fairly international group, with a lot of Americans. We hire different groups for different types of annotations, such as those that are more focused on writing or STEM tasks. People who do STEM tasks are more likely to be from India or other low- and middle-income countries, while people who do English writing and composition are more likely to be from the United States.

Sometimes we need to hire different experts for some projects. Some people are very talented and do these tasks as well as our researchers do, and more carefully. I would say that our people are quite skilled and serious now.

Dwarkesh Patel: The ability of these models to help you with a specific task is related to having a high match of labels in the supervised fine-tuning dataset. Is this true? Can it teach us to use FFmpeg correctly? Is it like someone is looking at the input and seeing what flags you need to add, and then someone else figures it out and matches it. Do you need to hire all these annotators with expertise in different areas? If so, it seems like making the model smarter over time would be a much harder task.

John Schulman: Actually, you don't have to do that. You can gain a lot just by generalizing. The base model has been trained on a lot of documentation, code, shell scripts, etc. It has seen all the FFmpeg man pages, a lot of Bash scripts, etc. Even if you just give the base model a good little hint, you can get it to answer questions like this. So, just train a useful preference model, even if you don't train it on any STEM, it will generalize to STEM to some extent. So not only do you not need to give examples of how to use FFmpeg, you may not even need any programming, and it may be possible to achieve some basic or reasonable functionality or behavior through other means.

Dwarkesh Patel: What kind of user interface is needed after the AI is trained on multimodal data? How different is it from the interface designed for humans?

John Schulman: That's an interesting question. I think that as visual capabilities improve, models will soon be able to use websites designed for humans. So there is no immediate need to change website design. On the other hand, some websites will benefit greatly from using AI.

We may need to design better user interaction experiences for AI. Although it is not entirely clear what this means, it is certain that given that our models are still better at text processing than image recognition, we need to provide high-quality text representations for the models.

We should also clearly represent all interactive elements so that the AI can recognize and interact with them. However, the website will not be completely redesigned with APIs everywhere. We can let the AI models use the same user interface as humans.

Dwarkesh Patel: It is well known that in the social sciences, there are a lot of studies that are difficult to replicate. One of my questions is how much of this scientific research is real and not artificially manufactured, customized experiments. When you read a normal machine learning paper, does it feel like a very solid literature, or does it often feel like p-hacking in the social sciences?

John Schulman: People are not very satisfied with the machine learning literature. But overall, it is a relatively healthy field, especially compared to fields such as social sciences. The foundation of this field is practicality and practicability. If what you publish is difficult to reproduce, it will be difficult to get people's recognition.

You not only have to report the data in a paper, but also try to rewrite existing programs, algorithms or systems with new methods, techniques or languages, and compare them with your own methods on the same training data set.

Therefore, people will do a lot of open source practices in their work, as well as various unfavorable incentives. For example, people are incentivized to make the benchmarks they compare to worse, and try to make their methods look more mathematically complicated.

The field of machine learning is still progressing. I hope to see more scientific research and understand more new things, not just improving based on benchmarks, but proposing new methods. There have been more and more such innovations recently, but there can be more, and the academic community should dig more into these studies.

In addition, I really want to see more research on using basic models to simulate social science. These models have a probabilistic model of the whole world, and people can set up simulated questionnaires or conversations to observe the correlations between things and study the correlations between any traits we can imagine and other traits. It would be really cool if people could replicate some of the more famous results in social science, like Moral Foundations Theory, by prompting the underlying model in different ways and observing the correlations.