1. Introduction

Deep learning models have demonstrated outstanding performance in various fields such as image classification, natural language processing, and time series analysis. However, in practical applications, conventional standard procedures are often insufficient to achieve optimal results. This article provides various deep learning practical tips, including detailed operations and code examples for data preprocessing, model design optimization, training strategies, and evaluation and hyperparameter tuning, aiming to offer effective guidance and support for real-world applications.

2. Data Processing Techniques

2.1 Advanced Data Augmentation Methods

Data augmentation is an effective method to improve the generalization ability of deep learning models and prevent overfitting. Excellent data augmentation techniques can diversify the dataset, thereby enhancing the model's learning capability.

2.1.1 Random Cropping and Rotation

In image classification tasks, random cropping, rotation, and color perturbation can enhance the image and make the data more diverse, improving the model's learning ability. Below is a code example implemented in TensorFlow.

import tensorflow as tf def advanced_data_augmentation(image): image = tf.image.random_crop(image, size=[28, 28, 3]) # Random crop to 28x28 size image = tf.image.random_flip_left_right(image) # Random horizontal flip image = tf.image.rot90(image, tf.random.uniform(shape=[], minval=0, maxval=4, dtype=tf.int32)) # Random rotation by multiples of 90 degrees image = tf.image.random_brightness(image, max_delta=0.5) image = tf.image.random_contrast(image, lower=0.2, upper=1.8) return image

2.1.2 Cutout and Mixup

Cutout is a data augmentation technique that randomly blocks part of an image, while Mixup performs a linear interpolation between two samples to mix the data. The following code examples demonstrate the implementation of these two augmentation methods.

import numpy as np def cutout(image, mask_size=16): h, w, _ = image.shape y = np.random.randint(h) x = np.random.randint(w) y1 = np.clip(y - mask_size // 2, 0, h) y2 = np.clip(y + mask_size // 2, 0, h) x1 = np.clip(x - mask_size // 2, 0, w) x2 = np.clip(x + mask_size // 2, 0, w) image[y1:y2, x1:x2] = 0 return image def mixup(x1, y1, x2, y2, alpha=0.2): lam = np.random.beta(alpha, alpha) x = lam * x1 + (1 - lam) * x2 y = lam * y1 + (1 - lam) * y2 return x, y

2.2 Automated Data Cleaning

Errors and outliers in the data should be detected and processed promptly to ensure that the deep learning model learns effective information. The following code uses IsolationForest to detect and remove outliers in the data.

import pandas as pd

from sklearn.ensemble import IsolationForest

def clean_data(df, column_name):

clf = IsolationForest(contamination=0.05)

df['outlier'] = clf.fit_predict(df[[column_name]])

df_clean = df[df['outlier'] != -1]

return df_clean.drop('outlier', axis=1)3. Model Architecture Optimization

3.1 Using Different Layer Types to Improve Model Performance

Model architecture optimization is an effective way to enhance model performance. For example, applying depthwise separable convolutions can reduce computation, and using attention mechanisms can focus on the most relevant information.

3.1.1 Depthwise Separable Convolutions

Depthwise separable convolutions reduce computation by separating the convolution and pointwise convolutions, commonly used in lightweight networks like MobileNet.

from tensorflow.keras.layers import SeparableConv2D model = tf.keras.models.Sequential([ SeparableConv2D(64, (3, 3), activation='relu', input_shape=(128, 128, 3)), tf.keras.layers.MaxPooling2D((2, 2)), SeparableConv2D(128, (3, 3), activation='relu'), tf.keras.layers.MaxPooling2D((2, 2)), tf.keras.layers.Flatten(), tf.keras.layers.Dense(256, activation='relu'), tf.keras.layers.Dense(10, activation='softmax') ])

3.1.2 Attention Mechanism — Self Attention

Self-attention mechanisms help models focus on the most important parts of the input, widely used in natural language processing and image processing.

from tensorflow.keras.layers import Attention input_layer = tf.keras.Input(shape=(128, 128, 3)) flatten = tf.keras.layers.Flatten()(input_layer) attention_output = Attention()([flatten, flatten]) output_layer = tf.keras.layers.Dense(10, activation='softmax')(attention_output) model = tf.keras.models.Model(inputs=input_layer, outputs=output_layer)

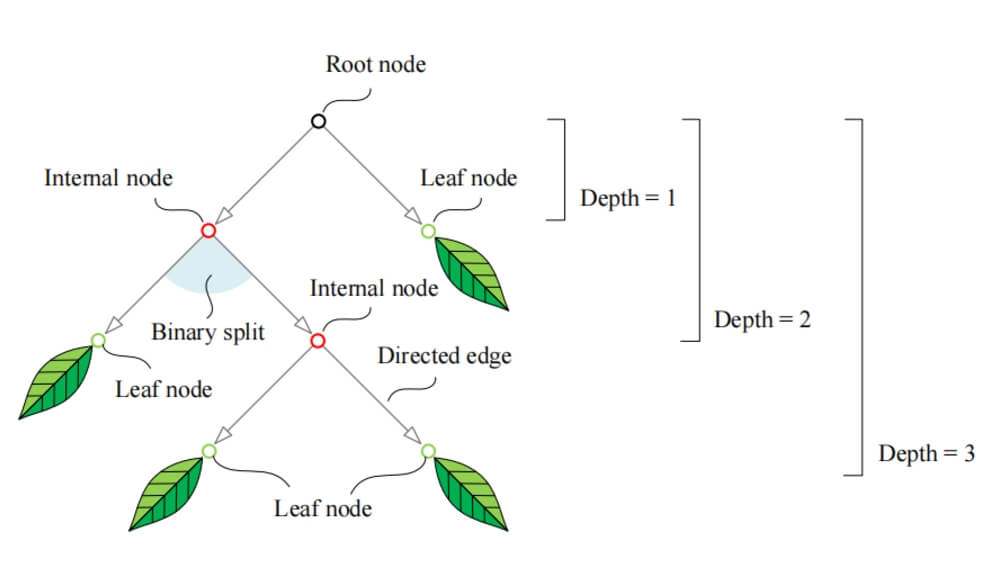

3.2 Network Depth and Residual Connections

3.2.1 Vanishing Gradient Problem in Deep Networks

In deep neural networks, vanishing gradients is a common issue, especially when the network has many layers, making training difficult. To overcome this problem, residual connections (ResNet) are introduced. These connections pass the input directly to subsequent layers, mitigating the effects of vanishing gradients.

from tensorflow.keras.layers import Add input_layer = tf.keras.Input(shape=(32,)) x = tf.keras.layers.Dense(64, activation='relu')(input_layer) residual = x x = tf.keras.layers.Dense(64, activation='relu')(x) x = Add()([x, residual]) output_layer = tf.keras.layers.Dense(10, activation='softmax')(x) model = tf.keras.models.Model(inputs=input_layer, outputs=output_layer)

4. Training Strategy Optimization

4.1 Dynamic Learning Rate Scheduling

Choosing the right learning rate is crucial for model convergence during training. Dynamic learning rate scheduling automatically adjusts the learning rate based on the training progress, helping to find the optimal solution faster. Below is an example of implementing a custom cyclical learning rate schedule.

from tensorflow.keras.callbacks import LearningRateScheduler def cyclic_lr_schedule(epoch, lr): if epoch < 5: return lr elif epoch < 10: return lr * 0.1 else: return lr * 0.01 model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy']) model.fit(x_train, y_train, epochs=20, callbacks=[LearningRateScheduler(cyclic_lr_schedule)])

4.2 Adding Dropout to Prevent Overfitting

Overfitting is a common problem in deep learning models, especially when training data is insufficient. By adding Dropout layers, some neurons are randomly dropped during training to reduce the risk of overfitting.

from tensorflow.keras.layers import Dropout model = tf.keras.models.Sequential([ tf.keras.layers.Dense(256, activation='relu', input_shape=(784,)), Dropout(0.5), tf.keras.layers.Dense(128, activation='relu'), Dropout(0.5), tf.keras.layers.Dense(10, activation='softmax') ])

4.3 Early Stopping

Early stopping is an effective method to prevent overfitting. By monitoring the loss on the validation set, training can be stopped early when the loss no longer improves, ensuring the model stops at its best state.

from tensorflow.keras.callbacks import EarlyStopping early_stopping = EarlyStopping(monitor='val_loss', patience=5, restore_best_weights=True) model.fit(x_train, y_train, validation_data=(x_val, y_val), epochs=50, callbacks=[early_stopping])

5. Model Evaluation and Hyperparameter Tuning

5.1 Hyperparameter Optimization

Hyperparameter optimization is an important step in improving model performance. Common methods include grid search, random search, and automated tools like Optuna based on Bayesian optimization. Below is an example of how to use Optuna for hyperparameter optimization.

import optuna

def objective(trial):

lr = trial.suggest_loguniform('lr', 1e-5, 1e-2)

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(64, activation='relu', input_shape=(784,)),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=lr),

loss='categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(x_train, y_train, epochs=5, validation_data=(x_val, y_val))

return history.history['val_accuracy'][-1]

study = optuna.create_study(direction='maximize')

study.optimize(objective, n_trials=10)

print("Best trial:", study.best_trial.params)5.2 Confusion Matrix and F1-Score

Accuracy alone cannot fully reflect model performance. The confusion matrix and F1-Score provide a more comprehensive evaluation, especially when dealing with imbalanced classes.

from sklearn.metrics import confusion_matrix, f1_score

y_pred = model.predict(x_test)

y_pred_classes = np.argmax(y_pred, axis=1)

y_true = np.argmax(y_test, axis=1)

conf_matrix = confusion_matrix(y_true, y_pred_classes)

f1 = f1_score(y_true, y_pred_classes, average='weighted')

print("Confusion Matrix:\n", conf_matrix)

print("F1 Score:", f1)6. Conclusion

Through an in-depth discussion on data augmentation, network structure optimization, training strategies, and evaluation methods, this article provides detailed techniques and practical code examples for improving the performance of deep learning models. Flexibly applying these techniques in real-world projects will help enhance the model's generalization ability and stability. We hope this content will assist you in achieving better outcomes in your deep learning work, injecting more practical experience and innovative ideas into deep learning projects.

7. Future Outlook

The field of deep learning is developing rapidly. Future research priorities will include automated machine learning (AutoML), more efficient network architecture search (NAS), and effective fine-tuning of large-scale pretrained models. Through continuous exploration and innovation, we can expect deep learning models to achieve more groundbreaking advancements across various fields, solving increasingly complex real-world problems