On Friday, OpenAI introduced its new o1 model series, which includes o1-preview and o1-mini. These models are designed to take more time to think before responding, capable of handling more complex tasks than previous scientific, coding, and mathematical models. Since the release, the AI community on social media has been buzzing with excitement about these new models. But did you know there are four simple ways to start chatting with o1 models today? In this guide, we'll walk you through how to access and use these models on four different platforms.

1. What’s New with the o1 Model?

For those unfamiliar, OpenAI launched its new o1 model series on Friday. The o1-preview and o1-mini models are designed to take more time to think before responding. They can reason through complex tasks and tackle more challenging problems than earlier models focused on science, coding, and mathematics.

Key Features:

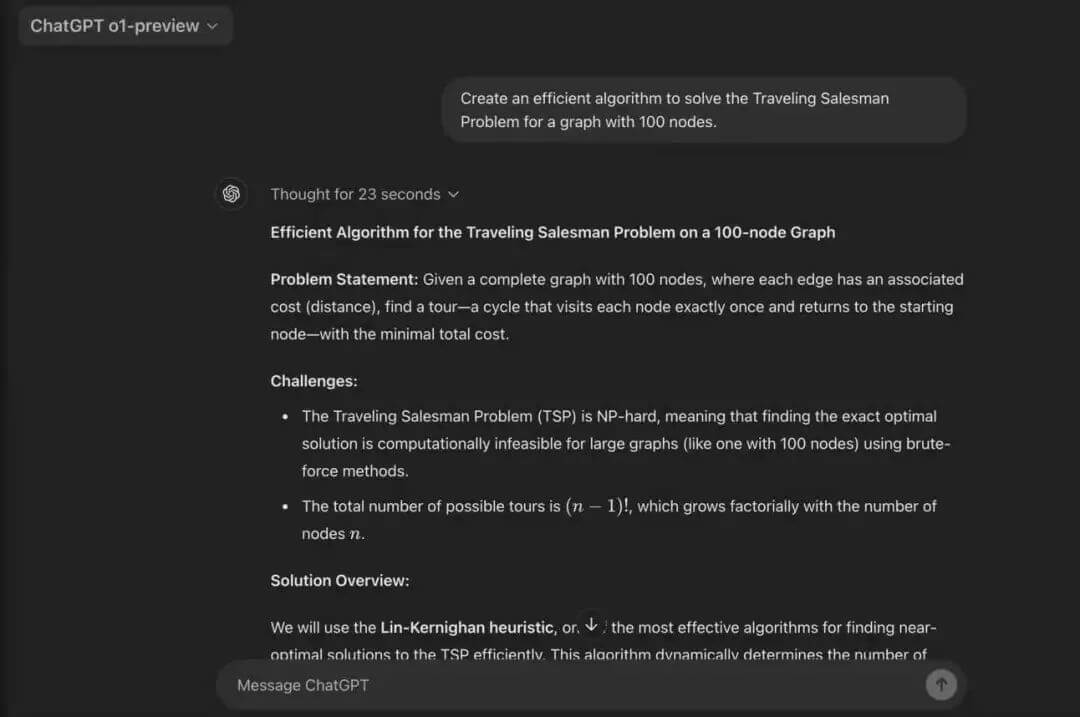

Advanced Reasoning: The o1 models use chain-of-thought reasoning to work through problems step by step, offering more refined answers.

128K Context & Knowledge Cutoff: With a knowledge base up to October 2023, o1 models don’t support web browsing or handling documents/images like GPT-4o.

Enhanced Multistep Workflows: Easily handle complex processes like refactoring code, writing test suites, and solving math problems.

Coding Expertise: Both o1-preview and o1-mini excel at coding tasks, from debugging to optimizing complex algorithms.

Faster Performance: o1-mini is 80% cheaper and faster, making it a cost-effective solution for tasks requiring quick reasoning.

2. Five Simple Ways to Use OpenAI o1

Now for the exciting part—here are four platforms where you can quickly start interacting with the o1 models today!

ChatGPT

OpenAI API (Tier 5 users only)

Cursor AI

Abacus AI's ChatLLM

VS Code with the CodeGPT extension

Below is a step-by-step guide and the limitations for each platform.

1. ChatGPT

The o1 models are currently available to ChatGPT Plus and Team users. Edu and Team users will gradually gain access to these models.

Log in to ChatGPT: Go to chat.openai.com or download the ChatGPT desktop app.

Select the Model: In the model selector, choose either o1-preview or o1-mini, depending on your needs.

o1-preview: Ideal for more complex reasoning tasks and multistep workflows.

o1-mini: Faster, more cost-effective, great for coding and quick responses.

Check Rate Limits:

o1-preview: Maximum of 30 messages per week.

o1-mini: Maximum of 50 messages per week.

Start Chatting: Input your queries—whether it’s a coding challenge, math problem, or daily task (though due to rate limits, it’s wise to use them thoughtfully). Watch as the o1 model generates well-thought-out, structured responses.

Chain-of-Thought Summaries: For more advanced reasoning, the model will display its chain of thought, showing how it arrived at the answer.

2. API Access

If you want to interact directly with OpenAI’s o1 models via API, follow these simple steps.

Sign Up for API Access: Ensure you have Tier 5 usage access, which is required to use the o1 models.

Install the OpenAI Python Library: Open your terminal and install the OpenAI Python package by running the following command:

pip install openai

Set Your API Key: Store your OpenAI API key as an environment variable for secure access. You can do this by running:

export OPENAI_API_KEY="your-api-key-here"

Make API Calls: You can now start making API calls using the o1 models. Here’s a simple Python example to get started:

import openai

openai.api_key = "your-api-key"

response = openai.ChatCompletion.create(

model="o1-preview",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain quantum computing in simple terms."}

]

)

print(response.choices[0].message.content)3. Using o1 for Coding in Cursor AI

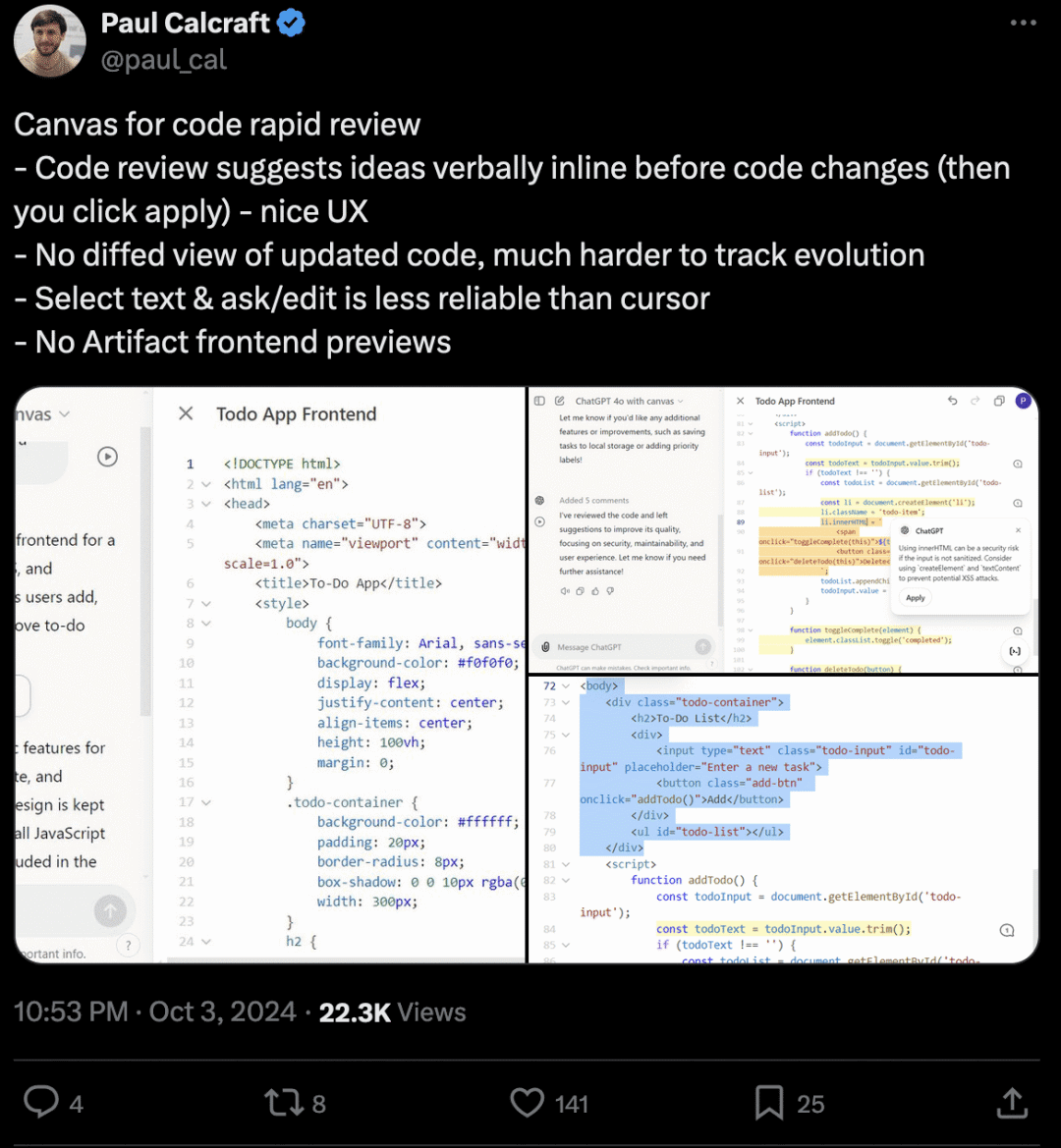

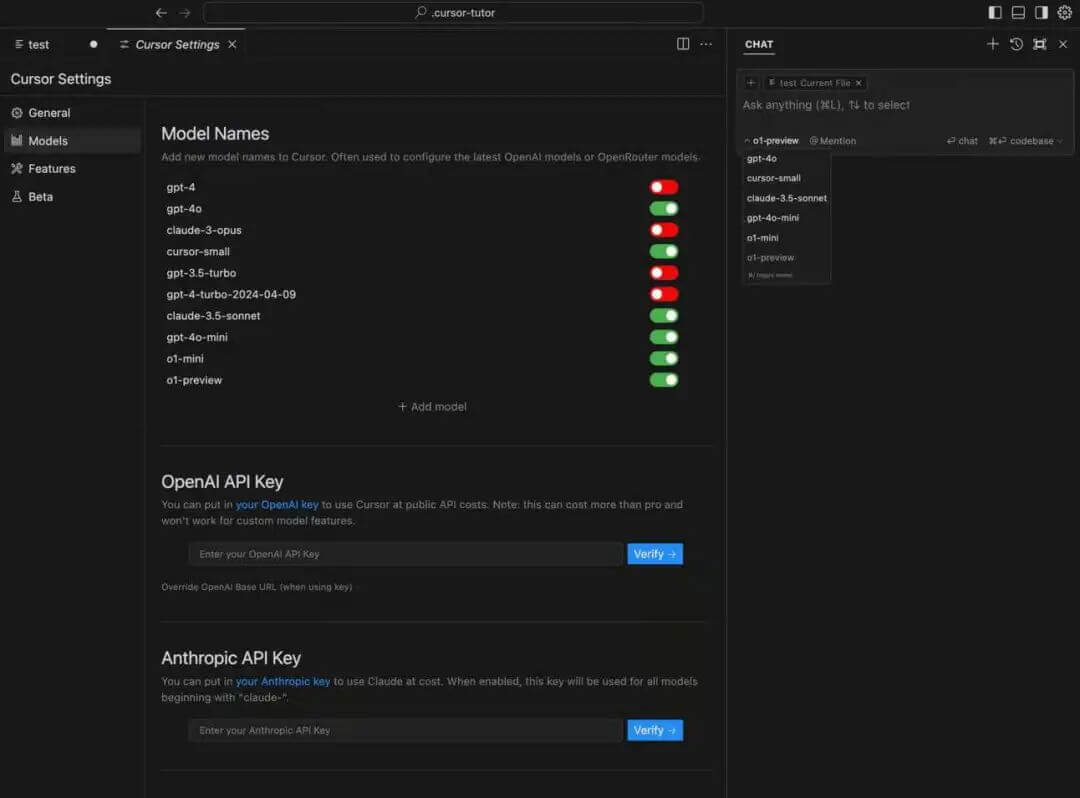

Cursor AI is a robust platform for developers that offers flexibility in tasks such as code generation and debugging through integration with OpenAI's o1 models.

Download and Install Cursor AI: Visit the Cursor website to download the IDE. After installation, launch the program.

Cursor Chat: It’s an AI assistant that provides real-time coding help and suggestions, ideal for specific file improvements requiring manual review. Use CMD + L (on Mac) to activate Cursor Compose.

Cursor Compose: This feature allows developers to apply changes to multiple files simultaneously with a single click. Activate it by pressing CMD + I.

Select the Model: Choose o1-preview from the model selector to start interacting with the model.

Add o1 Model in Settings: If you don’t see the model listed, access the settings editor by clicking the gear icon in the top-right corner.

Add the o1 Model: Click "Add Model" and enter “o1-preview” to add it. Toggle to enable the model.

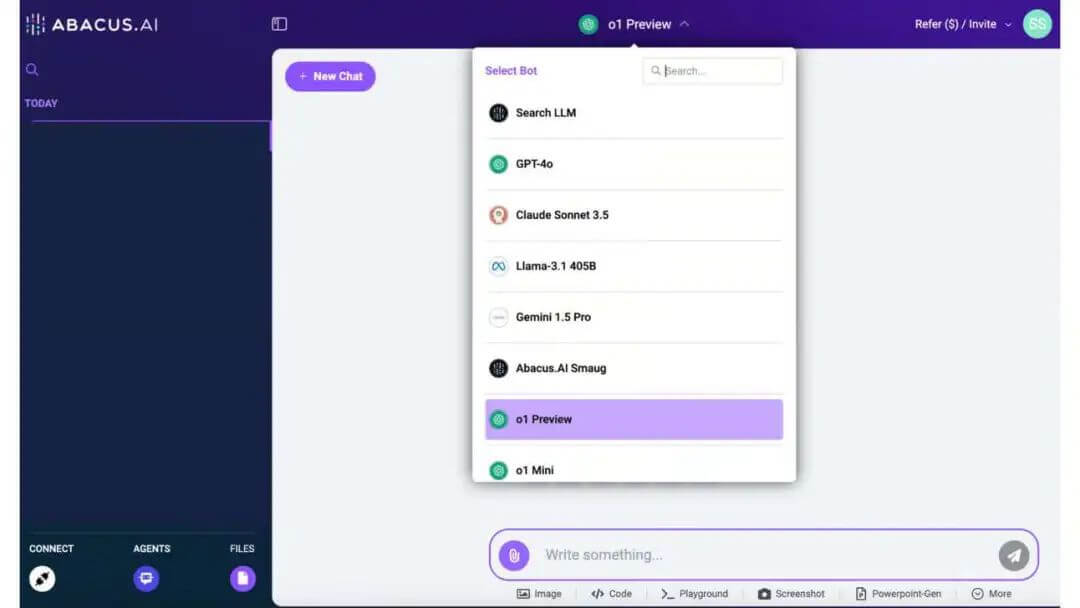

4. ChatLLM on Abacus AI

Abacus AI’s ChatLLM Teams is a collaborative AI platform that provides access to all leading language models, including Claude 3.5 Sonnet, GPT models, and Abacus Smaug, through a unified interface. Abacus AI also features cool functionalities like creating custom chatbots, chatting with documents via RAG, code generation, and image generation—all for just $10/month.

Log in to Abacus AI: Navigate to ChatLLM and log in with your credentials. If you don’t have an account, create one.

Select the Model: From the LLM selector in the dashboard, choose OpenAI O1 model. It has rate limits, so use it wisely!

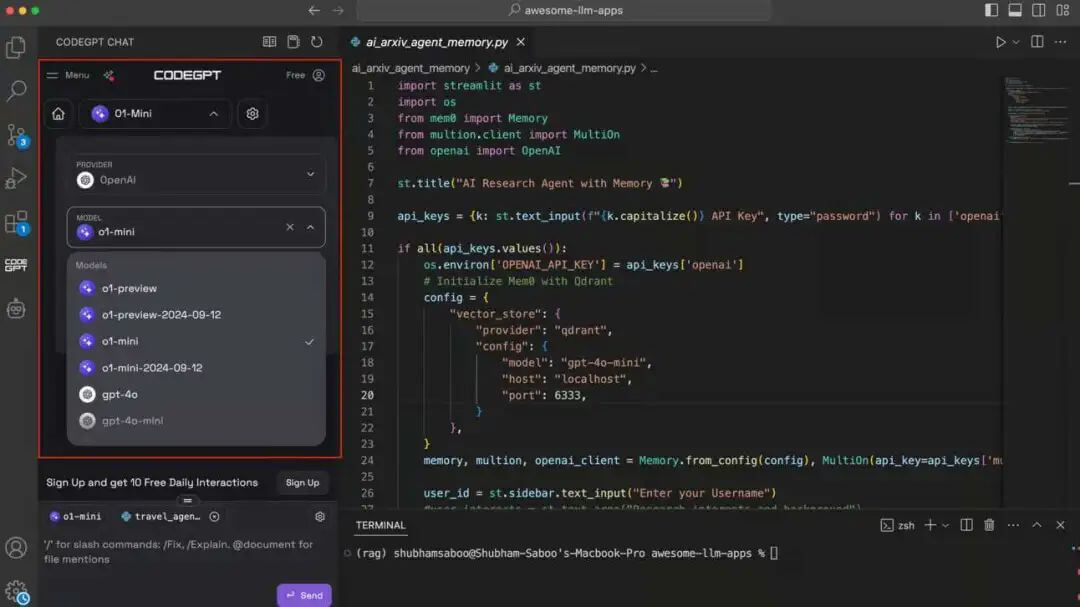

5. Using o1 in VS Code via CodeGPT Extension

This VS Code extension integrates AI-driven coding assistance directly into its code editor, providing functionalities like code generation, explanations, refactoring, and error checking. You can interact with OpenAI’s o1 models via this extension in VS Code.

Download CodeGPT: From the VS Code extension library, download and install CodeGPT.

Select Model Provider: Set OpenAI as the model provider and choose either o1-preview or o1-mini.

Input Your OpenAI Key: Once you input your OpenAI key, you’re ready to start coding with the help of o1 models.

By following the steps above, you can start utilizing OpenAI's o1 models across different platforms, enabling you to enhance your workflows, whether it’s for advanced reasoning, coding, or problem-solving.