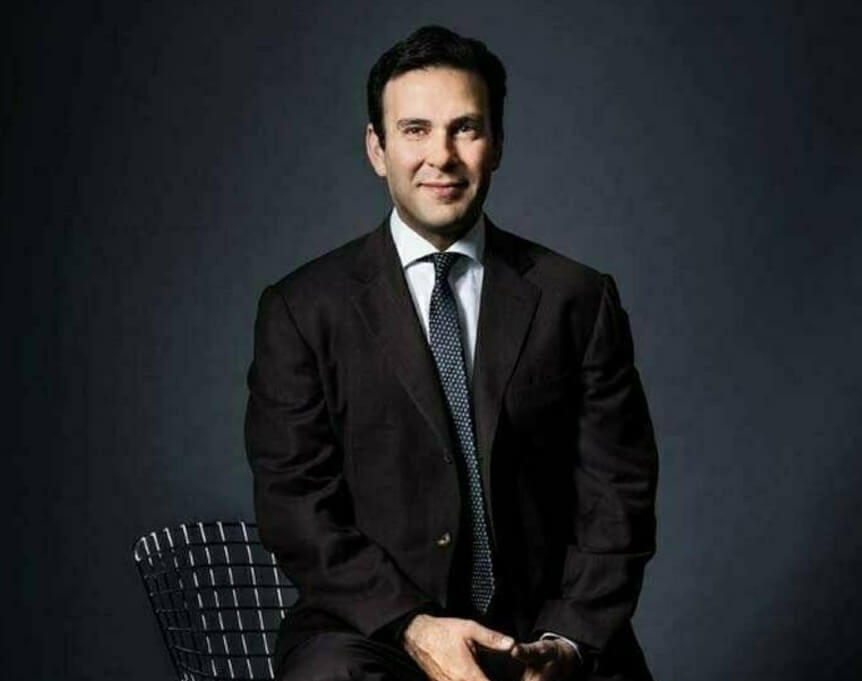

Huang Renxun, the founder and CEO of Nvidia, was awarded an honorary doctorate in engineering at the Ph.D. degree ceremony held on November 23 at the Hong Kong University of Science and Technology (HKUST). After the ceremony, Huang Renxun had a dialogue with Shen Xiangyang, Chairman of the Board of Trustees of HKUST, to discuss topics regarding technology, leadership, and entrepreneurship.

Full Transcript of the Dialogue:

Shen Xiangyang: Last night, I couldn't sleep, and one of the key reasons was that I was eager to introduce to everyone this most outstanding CEO in the universe. But I also secretly worried about your company, especially since Apple’s stock price rose while Nvidia’s performance was slightly weaker. I couldn’t wait to know the results of the stock market closing! This morning, the first thing I asked my wife was whether Nvidia had held up. You've been leading in the field of artificial intelligence for a long time. Can you talk again about your views on artificial intelligence and the potential impacts of this technology, or even AGI (Artificial General Intelligence)?

Huang Renxun: As you understand, when an AI network is able to learn and grasp understanding from various data types—from bytes and language to images and protein sequences—a transformative, groundbreaking capability is born. We suddenly have computers that can understand the meaning of words. Thanks to generative AI, information can freely transform between different modes, such as from text to images, from proteins to text, from text to proteins, and even from text to chemicals. Originally a tool used as a function approximator and language translator, now the real challenge we face is: How can we fully utilize it? You’ve witnessed the explosion of startups around the world, combining these different models and capabilities to showcase limitless possibilities.

So, I think the truly amazing breakthrough is that we can now understand the true meaning of information. This means that as a digital biologist, you can understand the data’s meaning and accurately capture key information from vast amounts of data; as an Nvidia chip designer, system designer, or as an agricultural technologist, climate scientist, or energy researcher, in the search for new materials, this is undoubtedly groundbreaking.

Shen Xiangyang: Today, the concept of a universal translator has taken shape, giving us the ability to understand everything in the world. Many people have heard you talk about the incredible impact AI will have on society. Your viewpoints have deeply moved me and, in some ways, even shocked me. Looking back at history, the Agricultural Revolution allowed us to produce more food, and the Industrial Revolution significantly increased our steel production. Entering the information age, the amount of information has exploded. Now, in this intelligent era, Nvidia and AI are working together to "manufacture" intelligence. Could you elaborate on why this work is so important?

Huang Renxun: From a computer science perspective, we have reinvented the entire stack. This means that the way we developed software in the past has fundamentally changed. When talking about computer science, software development is naturally an essential part, and how it is achieved is crucial.

In the past, we relied on manually writing software, using imagination and creativity to conceptualize functions, design algorithms, and then turn them into code that was input into the computer. From Fortran to Pascal, to C and C++, these programming languages allowed us to express ideas with code. Code ran very well on CPUs, we input data into the computer and asked it to find functions within that data. By observing the data we provided, the computer could identify patterns and relationships.

However, things are different now. We no longer rely on traditional coding methods, but have shifted to machine learning and machine generation. This is no longer just a software issue, it involves machine learning, which generates neural networks and processes them on GPUs. This shift—from coding to machine learning, from CPU to GPU—marks the arrival of an entirely new era.

Moreover, because of the immense power of GPUs, the types of software we can now develop are extraordinary, and on top of this, artificial intelligence is flourishing. This is the transformative change that has occurred, and computer science has undergone a huge transformation. Now, we need to think about how this change will impact our industries. We are all competing to use machine learning to explore new areas of artificial intelligence. So, what exactly is AI? It's a concept that we are all familiar with—cognitive automation and problem-solving automation. Automation of problem-solving can be summarized into three core concepts: observing and perceiving the environment, understanding and reasoning about the environment, and then proposing and executing plans.

For example, in autonomous vehicles, the car can perceive its surroundings, reason about the position of itself and other vehicles, and then plan the driving route. This is essentially a digital driver. Similarly, in healthcare, we can observe CT scan images, understand and reason the information in the images, and if abnormalities are detected, they may indicate the presence of a tumor. We can then mark it and inform the radiologist. At this point, we are acting as a digital radiologist. Almost everything we do can find applications related to AI, which can excel at specific tasks.

If we have enough digital agents, and these agents can interact with the computers that generate these digital pieces of information, then this constitutes digital artificial intelligence. However, right now, all of us, despite the large amount of energy consumption in data centers, are mostly producing something called “Tokens” in data centers, rather than true digital intelligence.

Let me explain the difference between these two. 300 years ago, General Electric and Westinghouse invented a new type of instrument—generators—and eventually developed into alternating current generators. They wisely created a “consumer” to consume the electricity they generated, such as light bulbs, toasters, and other electrical devices. Of course, they also created a variety of digital devices or electrical appliances, all of which consumed electricity.

Now, let's look at what we are doing. We are creating intelligent tools like Copilots and ChatGPT, which are different types of smart “consumers” we’ve created. These are essentially energy-consuming devices, like light bulbs and toasters. But imagine those amazing, smart devices we will all use, which will connect to a new factory. This factory was once an alternating current power plant, but now, the new factory will be a digital intelligence factory.

From an industrial perspective, we are actually creating a new industry, one that absorbs energy and generates digital intelligence, which can then be applied to various scenarios. We believe that the energy consumption of this digital intelligence industry will be enormous, and this industry did not exist before, just like the alternating current power industry did not exist before.

Shen Xiangyang: You’ve outlined a bright and hopeful future, much of which is due to your and Nvidia's outstanding contributions over the past decade. Moore's Law has long been a major focus in the industry, and in recent years, the "Huang's Law" has gradually become well-known. In the early days of the computer industry, Intel’s Moore’s Law predicted that computing power would double every 18 months. However, in the past 10 to 12 years, particularly under your leadership, the growth rate of computing power has even exceeded this prediction, achieving a doubling of performance every year, or even higher.

Looking from the consumer side, the computing demand for large language models has increased by more than four times annually over the past 12 years. If this rate continues for another decade, the growth in computing demand will be an astonishing number—up to one million times. This is a key argument I use when explaining why Nvidia’s stock price has risen 300 times over the past 10 years. Considering this massive increase in computing demand, Nvidia's stock price might not seem high. So, when you use your “crystal ball” to predict the future, do you think we will witness another 1 million times increase in computing demand over the next decade?

Huang Renxun: Moore’s Law relies on two core concepts: one is the design principles of very large-scale integrated circuits (VLSI), which were inspired by the works of myself, Caltech's Carver Mead, and Lynn Conway. These works inspired an entire generation; the second is that as transistor sizes keep shrinking, we can double semiconductor performance every period of time, about once every one and a half years, which leads to a 10x improvement in performance every five years and 100x every ten years.

We are now in a trend: the larger the neural network, and the more data used for training, the more intelligent AI seems to perform. This empirical rule is quite similar to Moore's Law, and we can call it the "Scaling Law." This law still seems to be working. However, we are also keenly aware that relying solely on pre-training—automatically mining knowledge from massive data across the globe—is far from enough. Just like graduating from college is a crucial milestone, but not the end. After this, there is post-training, which involves deeply focusing on a specific skill. This requires the use of reinforcement learning, human feedback, AI feedback, synthetic data generation, and multi-path learning techniques. In short, post-training means selecting a specific domain and committing to deep research in it. This is similar to when we enter our careers and continue learning and practicing professionally.

After that, we will eventually enter the so-called "thinking" phase, which is known as test-time computation. Some problems you can see the answer immediately, while others require breaking down into multiple steps and finding solutions from first principles. This might require multiple iterations to simulate different possible outcomes because not all answers are predictable. Therefore, we call it thinking, and the longer the thinking time, the higher the quality of the answer. A large amount of computing resources will help us produce higher-quality answers.

Although today's answers are the best we can provide, we are still seeking a critical point where the answers we get are no longer limited to the best level we can currently offer. At this point, you must judge whether the answer is truthful, meaningful, and wise. We must reach a level where the answers are largely trustworthy. I think this will take years to achieve.

At the same time, we still need to continue improving computing power. As you mentioned earlier, in the past decade, we have increased computing performance by a million times. Nvidia's contribution is that we have reduced the marginal cost of computing by the same magnitude. Imagine if something you rely on in daily life, such as electricity or any other choice, had its cost reduced by a million times, your habits and behavior would fundamentally change.

For computing, our perspective has also undergone a complete transformation, and this is one of Nvidia's greatest achievements ever. We use machines to learn vast amounts of data, a task that researchers could not accomplish alone, and this is key to the success of machine learning.

Shen Xiangyang: I am eager to hear your thoughts on how Hong Kong should position itself amidst the current opportunities. One particularly exciting topic is "AI for Science," a field you have been extremely passionate about. The Hong Kong University of Science and Technology (HKUST) has invested heavily in computational infrastructure and GPU resources, and we are especially focused on promoting interdisciplinary collaboration, such as in fields like physics and computer science, materials science and computer science, biology and computer science, etc. You have also delved deeply into the future of biology. Additionally, it’s worth mentioning that the Hong Kong government has decided to establish its third medical school, and HKUST is the first university to submit this proposal. So, what advice do you have for the university’s president, myself, and the entire university?

Huang Renxun: First of all, I introduced artificial intelligence (AI) at the Supercomputing Conference in 2018, but at that time, it was met with much skepticism. The reason is that AI back then was more like a "black box." Admittedly, it still retains some characteristics of a "black box" today, but it has become more transparent compared to before.

For example, both you and I are "black boxes," but now we can ask AI questions like, "Why did you make this suggestion?" or "Please elaborate on how you arrived at this conclusion." By asking such questions, AI is becoming more transparent and interpretable. This is because we can probe its thinking process through questions, just as professors ask questions to understand students' thought processes. What matters is not just getting answers, but understanding the reasoning behind those answers and whether they are based on first principles. This was not possible in 2018.

Secondly, AI currently cannot derive answers directly from first principles; it learns and draws conclusions by observing data. Therefore, it is not a solver of first principles but rather an imitation of intelligence, an imitation of physics. So, is this imitation valuable for science? I believe its value is immeasurable. Because in many areas of science, while we understand first principles, such as the Schrödinger equation or Maxwell’s equations, we struggle to simulate and understand large systems. Hence, we cannot solve everything purely based on first principles, which is computationally limited, and in some cases, impossible. However, we can leverage AI to train it to understand these physical principles and use it to simulate large systems, helping us understand them better.

So, in what areas can this application make a difference? First, human biology operates on a scale from the nanoscale and spans a time scale from nanoseconds to years. With such vast scales and time spans, traditional solvers are simply incapable of achieving this. The question now is: Can we use AI to simulate human biology in order to better understand these extremely complex multiscale systems?

In this way, we may be creating a digital twin of human biology. This is where our high hopes lie. Today, we may have the computational technology that enables digital biologists, climate scientists, and researchers tackling enormous and complex problems to truly understand physical systems for the first time. This is my hope, and I look forward to seeing this vision come true in this interdisciplinary field.

Regarding your medical school project, for HKUST, a unique medical school is about to be established here, despite the university's traditional focus on technology, computer science, and artificial intelligence. This is quite different from most medical schools around the world, which often try to introduce AI and technology after they become medical schools, and typically face skepticism and distrust about these technologies. However, you have the opportunity to start from scratch, building an institution that is closely linked with technology from the very beginning and driving the continuous development of technology. The people here understand the limitations and potential of technology. I believe this is a once-in-a-lifetime opportunity, and I hope you will seize it.

Shen Xiangyang: We will certainly take your advice. HKUST has always been outstanding in technology and innovation, constantly pushing the frontiers of fields like computer science, engineering, and biology. Therefore, as Hong Kong’s third medical school, we are confident that we can carve out a unique path, combining traditional medical training with our strengths in technological research. I am sure we will seek more of your advice in the future. But I’d like to shift the topic slightly to leadership. You are one of the longest-serving CEOs in Silicon Valley, probably surpassing most others. You've been the CEO of Nvidia for nearly 30 or 31 years, right?

Huang Renxun: Almost 32 years!

Shen Xiangyang: But you seem to never get tired.

Huang Renxun: No, I actually feel very tired. When I arrived here this morning, I said I was super tired.

Shen Xiangyang: Yet you keep moving forward. So, of course, we want to learn from your experience in leading a large organization. How do you lead a massive organization like Nvidia? It has tens of thousands of employees, incredible revenue, and a vast range of customers. How do you manage to lead such a large organization with such efficiency?

Huang Renxun: Today, I want to say that I am quite surprised. Normally, you would see computational biologists or business students, but today we see computational biologists who are also business students. This is fantastic. I’ve never taken any business courses, nor have I ever written a business plan. I had no idea where to start. I rely on all of you to help me.

What I want to tell you is that, first, you should learn as much as you can, and I have been constantly learning. Second, when it comes to anything you want to devote yourself to and make your life’s work, the most important thing is passion. Treat whatever you do as your life's work, not just a job. I think this mindset makes a huge difference in your heart. Nvidia is my life’s work.

If you want to become a CEO of a company, there is a lot you need to learn, and you must constantly reinvent yourself. The world is always changing, and your company and technology are always changing. Everything you know today will be useful in the future, but it’s still not enough, so I basically learn every day. When I was flying here, I was watching YouTube and chatting with my AI. I’ve found an AI mentor, asking it lots of questions. AI gives me an answer, and I ask it why it gave that answer, having it walk me through the reasoning. I apply that reasoning to other things and ask for analogies. There are many different learning methods, and I use AI for them. So, there are many ways to learn, but I want to emphasize that you need to keep learning.

As for my thoughts on being a CEO and a leader, I’ve summarized a few points:

First, as a CEO and leader, you don’t need to play the role of someone who knows everything. You must firmly believe in the goal you are pursuing, but that doesn’t mean you have to understand every detail. Confidence and certainty are two very different concepts. On the way to your goal, you can move forward with confidence, while being open-minded and embracing the uncertainty that comes with it. This uncertainty actually gives you the space to continue learning and growing. Therefore, learn to draw strength from uncertainty and see it as a friend pushing you forward, not as an enemy.

Second, leaders do need to show resilience, because many people are relying on your strength and drawing courage from your determination. However, resilience doesn’t mean you must always hide your vulnerability. When you need help, it’s okay to seek support from others. I’ve always adhered to this philosophy and have asked for help countless times. Vulnerability is not a sign of weakness, and uncertainty is not a lack of confidence. In this complex and ever-changing world, you can face challenges with strength and confidence, while also honestly accepting your own vulnerability and uncertainty.

Furthermore, as a leader, your decisions should always revolve around the mission and consider the well-being and success of others. Only when your decisions truly benefit others can you earn their trust and respect. Whether it’s your employees, partners, or the entire ecosystem we serve, I’m always thinking about how to promote their success and safeguard their interests. In decision-making, I always start with what’s in the best interest of others, and that guides our actions. I believe these points may be helpful.

Shen Xiangyang: I have an interesting question regarding teamwork. You have 60 direct reports. How do your team meetings work? How do you effectively manage so many senior executives? This seems to reflect your unique leadership style.

Jensen Huang: The key is maintaining transparency. I clearly explain our reasoning, goals, and the actions we need to take, and we collaborate to develop strategies together. Whatever the strategy is, everyone hears it at the same time. Since they all participate in making the plan, when the company needs to decide on something, it is something we have all discussed together. It’s not just me deciding or telling them what to do.

We discuss together and reach conclusions together. My job is to ensure that everyone receives the same information. I am usually the last one to speak, setting the direction and priorities based on our discussions. If there’s any ambiguity, I work to clear up the doubts. Once we reach a consensus and everyone understands the strategy, I move forward based on the fact that everyone is an adult. As I mentioned before about my guiding principle—continuously learning, confident but embracing uncertainty—if I am unclear about something, or if they are unclear, I expect them to speak up. If they need help, I want them to seek support from us. Here, no one faces failure alone.

Then, when others see my behavior—how as CEO and leader, I can show vulnerability, seek help, admit uncertainty, and make mistakes—they realize they can do the same. What I expect is that if they need help, they should be brave enough to ask for it. But beyond that, my team of 60 people are all top experts in their respective fields. In most cases, they do not need my help.

Shen Xiangyang: I must say, your management approach is indeed effective. I remember your speech at the graduation ceremony, where you mentioned many statistics about HKUST, especially the number of startups founded by our alumni, and the number of unicorns and listed companies nurtured by our university. This university is indeed famous for nurturing new entrepreneurs and companies. However, even in such an environment, many of our master's students are still studying here. You and your team founded your company at a very young age and have achieved such remarkable success. So, what advice do you have for our students and faculty? When and why should they start their own business? Besides the promise you made to your wife about founding a company before turning 30, do you have other advice?

Jensen Huang: That was indeed just a little trick I used to start a conversation, not something I meant seriously. I started college when I was 16, and I met my wife when I was 17, and she was 19. As the youngest student in the class, with only three girls out of 250 students, and me looking like a child, I had to learn some attention-getting skills. I went up to her and told her that although I looked young, she would definitely think I was smart. So, I mustered up the courage to say, "Do you want to see my homework?"

Then, I made a promise to her: "If you do homework with me every Sunday, I guarantee you will get perfect grades." So, every Sunday, we would go on dates and study together all day. To get her to eventually marry me, I also told her that by the time I turned 30 (when I was only 20), I would be a CEO. I had no idea what I was saying at the time. Later, we really did get married. So, that’s my entire advice, with a bit of humor and sincerity.

Shen Xiangyang: I received a question from a student who wanted to know: he performs excellently in school, but needs to focus fully on his studies. After reading your love story, he worries that if he also spends time on romance, it might affect his academic performance.

Jensen Huang: My advice is, absolutely not. But the premise is that you must maintain excellent grades. She (my wife) never found out this little secret, but I always wanted her to think I was smart. So, before she came over, I would finish my homework. When she came, I already knew all the answers. She probably always thought I was a genius, and she thought so for all four years.

Shen Xiangyang: A professor from the University of Washington made a point a few years ago, suggesting that top American universities like MIT have not made groundbreaking contributions in the deep learning revolution. Of course, he was not just referring to MIT but pointed out that the contributions of top American universities in the last decade have been relatively limited. In contrast, we’ve seen top companies like Microsoft, OpenAI, and Google DeepMind achieve remarkable results, and one important reason is that they have strong computational power. Given this situation, how should we respond? Should we consider joining NVIDIA or collaborating with NVIDIA? As our new ally, can you offer us some advice or help?

Jensen Huang: The issue you raised indeed touches on a serious structural challenge that universities are facing today. We all know that without machine learning, we wouldn't be able to drive scientific research at the pace we see today. And machine learning relies on powerful computational support. It's like how researching the universe depends on radio telescopes or how studying fundamental particles requires particle accelerators. Without these tools, we cannot delve into the unknown. Today’s "scientific instruments" are AI supercomputers.

A structural issue that universities face is that researchers usually have to raise funds themselves, and once they have the funding, they are less willing to share resources. But machine learning has a characteristic: these high-performance computers need to be fully utilized during certain time periods, not left idle. No one will monopolize all the resources, but everyone at some point needs massive computational power. So, how should universities address this challenge? I think universities should lead the way in building infrastructure, consolidating resources to advance research across the whole campus. But this is very difficult to implement at top universities like Stanford or Harvard, because computer science researchers there often can raise substantial funds, while researchers in other fields struggle more.

So, what’s the solution now? I believe that if universities can build infrastructure for the entire campus, they will be able to effectively lead the transformation in this field and have a profound impact. However, this is indeed a structural challenge universities are facing today. That’s why many researchers choose to intern or do research at companies like ours, Google, Microsoft, etc., because we can provide access to advanced infrastructure. Then, when they return to their universities, they hope we will help maintain the vitality of their research so they can continue to advance their work. Moreover, many professors, including adjunct professors, balance teaching and research work. We have hired several such professors. So, while there are many ways to solve the problem, the most fundamental one is that universities need to rethink and optimize their research funding systems.

Shen Xiangyang: I have a challenging question I’d like to ask you. On one hand, we are excited to see the significant improvement in computing power and the decrease in prices, which is undoubtedly good news. On the other hand, your GPUs consume a large amount of energy, and some predictions suggest that global energy consumption will significantly increase by 2030. Are you concerned that, because of your GPUs, the world is actually consuming more energy?

Jensen Huang: I’ll answer this with a reverse way of thinking. First, I want to emphasize that if the world is consuming more energy to power the global AI factories, then when this happens, our world will be a better place. Now, let me elaborate on a few points.

First, the goal of AI is not just to train models, but to apply these models. Of course, going to school and studying for the sake of learning is itself a noble and wise action. However, most students come here, invest a lot of money and time, and their goal is to be successful in the future and apply the knowledge they have learned. Therefore, the true goal of AI is not just training, but reasoning. The reasoning process is highly efficient, and it can discover new ways to store carbon dioxide, for example, in reservoirs; it might be able to design new types of wind turbines; it might discover new materials for energy storage or more efficient solar panel materials, and so on. So, our goal is to eventually create AI that can be applied, not just AI for training purposes.

Second, we must remember that AI doesn’t care where it "learns." We don’t need to place supercomputers near the power grid on campuses. We should start considering placing AI supercomputers in locations farther from the power grid, where they can use sustainable energy, rather than putting them in densely populated areas. We must remember that all power plants were originally built to meet the electricity needs of our home appliances, like light bulbs and dishwashers, and now, due to the rise of electric vehicles, they also need to be close to us. But supercomputers don’t need to be near our homes; they can learn and compute elsewhere.

Third, what I hope to see is that AI can efficiently and intelligently discover new scientific achievements, to the extent that we can solve our existing energy waste problems—whether it’s the waste of the power grid, where the grid is overbuilt most of the time and underbuilt in rare instances—we can use AI to save energy across many different fields, reduce our waste, and ultimately save 20% to 30% of energy. This is my hope and dream, and I want to see using energy for intelligent activities as the best way we can imagine using energy.

Shen Xiangyang: I completely agree that using energy efficiently for intelligent activities is the best way to utilize it. If devices are manufactured outside places like the Greater Bay Area (including Shenzhen, Hong Kong, Guangdong, etc.), their efficiency often decreases because it’s hard to find all the necessary components. Take DJI as an example; this local commercial drone company has impressive technology. My question is, as the physical aspect of intelligence becomes increasingly important—such as with robots, especially self-driving cars, a specific type of robot—what is your view on the trend of these physical intelligent entities emerging rapidly in our lives? How should we harness and utilize the enormous potential of the Greater Bay Area’s hardware ecosystem in our professional lives?

Jensen Huang: This is a great opportunity for China and the entire Greater Bay Area. The reason is that this region already has a high level of mechatronics integration, which is the fusion of mechanical and electronic technologies. However, for robotics, one key missing element is AI that understands the physical world. Current large language models, such as ChatGPT, are good at understanding cognitive knowledge and intelligence, but they know little about physical intelligence. For example, it may not understand why a cup doesn't pass through the table when you put it down. Therefore, we need to teach AI to understand physical intelligence.

In fact, I want to tell you that we are making significant progress in this area. You may have seen some demonstrations where generative AI can convert text into videos. I can generate a video starting with a photo of me, and then give the command, "Jensen, pick up the coffee cup and take a sip." Since I can give commands to make AI perform actions in a video, why can’t I generate the correct command to control a robotic arm to do the same? Therefore, the leap from current generative AI to general-purpose robots is not far. I am very excited about the prospects in this field.

There are three types of robots that are likely to be mass-produced, and almost limited to these three. Other types of robots that have appeared historically have had difficulty achieving large-scale mass production. Mass production is crucial because it drives the technology flywheel effect. High investment in R&D can lead to technological breakthroughs, which in turn produce better products, further expanding production scale. This R&D flywheel is key to any industry.

In fact, while only three types of robots can truly achieve mass production, two of them will become the most produced. The reason is that these three types of robots can all be deployed in the current world. We call this the "brownfield" (areas to be redeveloped). These three types of robots are: automobiles, because we’ve built a world for cars over the past 150 to 200 years; drones, because the sky has virtually no limits; and, of course, the largest production volume will be humanoid robots, because we have built a world for ourselves. With these three types of robots, we can expand the application of robotics technology to an extremely high volume, which is exactly the unique advantage that the Bay Area’s manufacturing ecosystem has.

If you think deeply about it, the Greater Bay Area is the only place in the world that simultaneously has both mechatronics technology and AI technology. Elsewhere, this doesn’t exist. The other two mechatronics powerhouse countries are Japan and Germany, but unfortunately, they are far behind in AI technology and really need to catch up. Here, we have a unique opportunity, and I will seize this opportunity tightly.

Shen Xiangyang: I’m very pleased to hear your views on physical intelligence and robots. Hong Kong University of Science and Technology is indeed very good at these areas you mentioned.

Jensen Huang: Artificial intelligence, robotics, and healthcare are the three areas where we really need innovation.

Shen Xiangyang: Indeed, with the establishment of our new medical school, we will further promote development in these areas. However, to realize all these wonderful things, we still need your support; we need your GPUs and other resources.