Amazon's AWS introduces the Trainium2 AI chip, which Apple plans to use for training and deploying Apple Intelligence. This move signals Amazon's growing competition with Nvidia, as the cloud giant aims to offer custom silicon solutions for AI workloads. Apple also focuses on personalized AI services, leveraging its own chips for localized computation on devices, while exploring new cloud partnerships for privacy-focused AI development.

Amazon announced the launch of its AI chip that could potentially replace Nvidia GPUs. AWS's Trainium2 chip will be used to build a 400,000-card cluster to train the next-generation Claude model, and Apple has announced it will use it for training and deploying Apple Intelligence.

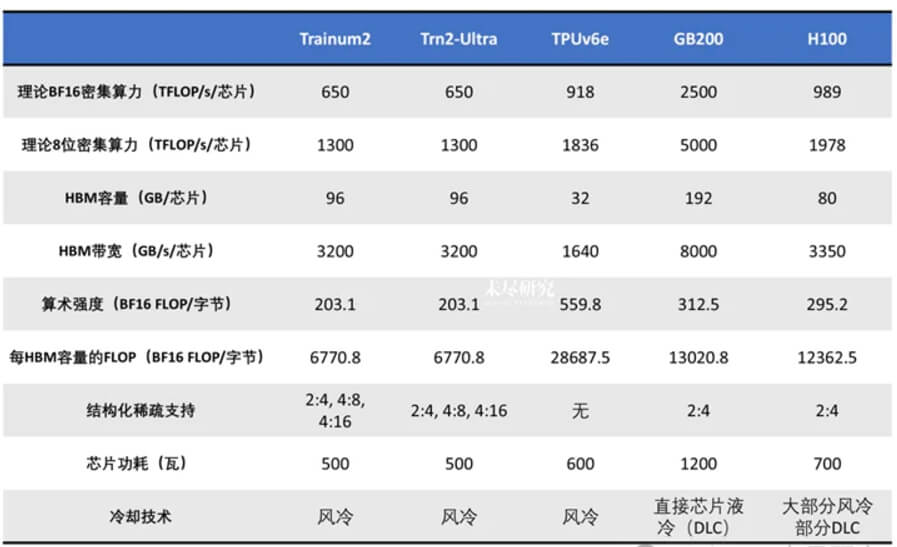

At the re:Invent conference in Las Vegas, AWS launched the Trn2 servers (16 Trainium2 chips), offering 20.8 Pflops performance to train billion-parameter models, aiming to compete with Nvidia and AMD GPUs. Trn2 UltraServers (64 Trainium2 chips) peak at 83.2 Pflops, capable of training and deploying the largest models, including language, multimodal, and vision models.

AWS also announced plans for the next-generation AI chip, Trainium3, expected to double Trainium2's performance, improve energy efficiency by 40%, and use a 3nm process, launching by the end of 2025.

Currently, Amazon's instances based on Trainium1 and Inferentia2 are less competitive for generative AI model training or inference due to weaker hardware specs and software integration. However, with the release of Trainium2, Amazon has made significant adjustments, aligning with Nvidia's products in terms of chip, system, and software compiler/framework, offering competitive custom silicon solutions.

Apple has also shared unusual details of its collaboration with cloud service providers and expressed its willingness to cooperate in building AI. Apple has used AWS services for over ten years for Siri, Apple Maps, and Apple Music. Apple has also used Amazon's Inferentia and Graviton chips to support search services, claiming a 40% efficiency improvement.

Recently, Apple will use Trainium2 for pre-training its own models. Initial assessments show a 50% improvement in pre-training efficiency. After deciding to develop Apple Intelligence, Apple quickly turned to AWS for AI infrastructure support. Apple has also used Google Cloud's TPU servers.

Apple is leading the personal AI application direction, deploying AI models to the edge, focusing on localized computation to provide customized and personalized AI services while prioritizing user privacy.

For Apple, the ultimate goal is not to train large models with hundreds of thousands of cards, but to use AI to better serve its over two billion device users—leveraging custom chips for local computation on devices like iPhones, iPads, and Macs, with only complex tasks going to the cloud. Apple also requires cloud providers to support privacy computing.

Apple Intelligence has its own pace, first launching basic functions like content summarization, drafting emails, and creating memes. It will soon integrate OpenAI’s large model services, and next year, it will enhance Siri, which, empowered by intelligent agent technology, will act more like an assistant that uses apps to carry out user tasks.

AWS is also collaborating with Anthropic to create a 400,000 Trainium2-card cluster to train the next-generation Claude large model. This project, called Project Rainer, will provide five times the computational power compared to training existing models on Eflops. Amazon's $4 billion investment in Anthropic is actually for this 400,000-card cluster, with no other major customers yet.

Elon Musk's xAI has already built a 100,000 H100 card cluster and has boldly claimed to buy another 300,000 B200 cards. Mark Zuckerberg is using a cluster of over 100,000 H100 cards to work overtime training Llama4, not to mention Microsoft/OpenAI. A 100,000 H100 card cluster has become the starting price for participating in the arms race.

However, the Trainium cluster will need to put in more effort to truly challenge Nvidia GPUs. According to semiconductor consulting firm SemiAnalysis, the raw floating-point performance of 400,000 Trainium2 chips is still less than a 100,000 GB200 cluster. This means, due to Amdahl's Law, Anthropic will still struggle to compete with a 100,000-card GB200 cluster. Performing collective communication on 400,000 Trainium2 chips and EFA will be very challenging, so Anthropic will need to make significant innovations in asynchronous training.

Note: EFA stands for Elastic Fabric Adapter, a high-performance network interface technology provided by AWS to support high-performance computing (HPC) and machine learning workloads.

The three major cloud giants, AWS, Microsoft Azure, and Google Cloud, currently source their data center chips mainly from Nvidia, AMD, and Intel. At the same time, they are actively exploring self-developed chips to bring benefits in cost and customization, used for both general computing and acceleration tasks such as training and inference of large models. AWS claims that with Trainium, Anthropic's large model Claude Haiku 3.5 performs 60% faster than other chips.

As generative AI moves into large-scale applications, businesses will seek chips and computational solutions that are more suitable for specific applications, more customizable, cost-effective, and energy-efficient.

By 2025, we will see a trend where more computational power will be deployed for inference-side reinforcement learning and large-scale AI applications, raising new demands for chips, servers, tools, architectures, and services, providing new opportunities for cloud providers' silicon technologies and startup chip companies.