Introduction

In the wave of digitalization, Natural Language Processing (NLP) has become one of the core technologies in artificial intelligence. Whether it’s in smart assistants, translation applications, or sentiment analysis on social media, NLP is rapidly transforming our lives at an incredible pace. According to market research, the market size of NLP is expected to grow rapidly in the coming years, becoming an indispensable part of various industries. This article will delve into the basics, key technologies, practical applications, and future development trends of NLP to help you gain a comprehensive understanding of this fascinating field.

What is Natural Language Processing (NLP)?

2.1 Definition and Background

Natural Language Processing is an interdisciplinary field of computer science, artificial intelligence, and linguistics that aims to enable computers to understand, analyze, generate, and respond to human natural language. Its goal is to make machines "understand" the semantics and emotions of human language, not just simple text analysis. With the improvement of computational power and the widespread application of big data, NLP has made significant progress in the past decade. For example, with the introduction of deep learning technologies, the performance of many NLP tasks has experienced a qualitative leap.

2.2 Complexity of NLP

The diversity and complexity of human language present numerous challenges to NLP. Some of the key difficulties include:

Ambiguity: The same word can have different meanings in different contexts. For example, "bank" can refer to a financial institution or the side of a river.

Metaphors and Slang: Languages often use metaphors and slang, which can render literal translations ineffective. For example, "breaking the ice" makes no sense literally, but in context, it refers to breaking the stalemate.

Grammar and Structure: Different languages have different grammatical rules and structures, increasing processing complexity. For example, English typically follows a subject-verb-object structure, while Chinese follows a subject-object-verb structure.

Core Technologies of NLP

3.1 Text Preprocessing

Text preprocessing is crucial before performing any NLP task. The main steps include:

Tokenization: Splitting sentences into words or phrases. For languages like Chinese, which lack spaces, tokenization is an important step.

Stopword Removal: Removing common but semantically insignificant words like "the" or "is." This reduces model complexity.

Stemming and Lemmatization: Converting different forms of words to their base form, such as turning "running" and "ran" into "run."

3.2 Vectorization Representation

Word Embeddings: Techniques like Word2Vec and GloVe convert words into vectors, bringing semantically similar words closer in vector space. This allows computers to capture relationships between words.

Contextual Embeddings: Models like BERT and GPT dynamically generate word vectors by considering the context, significantly improving accuracy in understanding and generation, enabling better handling of complex language phenomena.

3.3 Advanced Language Models

Transformer Architecture: This architecture improves long-text comprehension through self-attention mechanisms and has become mainstream in NLP. Transformers allow for parallel processing of input sequences, greatly improving computational efficiency.

Self-attention Mechanism: This allows models to focus on relationships between different parts of the input sequence, capturing long-distance dependencies. This mechanism enables models to flexibly choose which parts of the input to focus on, making it applicable to various NLP tasks.

Practical Applications of NLP

4.1 Intelligent Assistants and Dialogue Systems

Intelligent assistants like Apple’s Siri and Amazon’s Alexa use speech recognition and natural language understanding to enable human-machine interaction, assisting users with tasks. For example, users can ask the assistant to play music, set reminders, or provide weather updates. These systems leverage NLP technologies to make interactions with machines more natural.

4.2 Advances in Machine Translation

Modern machine translation, like Google Translate, uses neural network models to handle complex sentence structures and provide high-quality translations. The introduction of neural networks allows translation systems to learn context, significantly improving translation accuracy and fluency. Through continuous iterative training, machine translation systems have achieved near-human-level performance in several language pairs.

4.3 Sentiment Analysis and Public Opinion Monitoring

Businesses use sentiment analysis tools to monitor social media and analyze user feedback, thereby improving products and services. For example, by analyzing Twitter data, brands can understand public sentiment toward their products. Sentiment analysis helps businesses understand user needs and predict market trends, providing data-driven insights for decision-making.

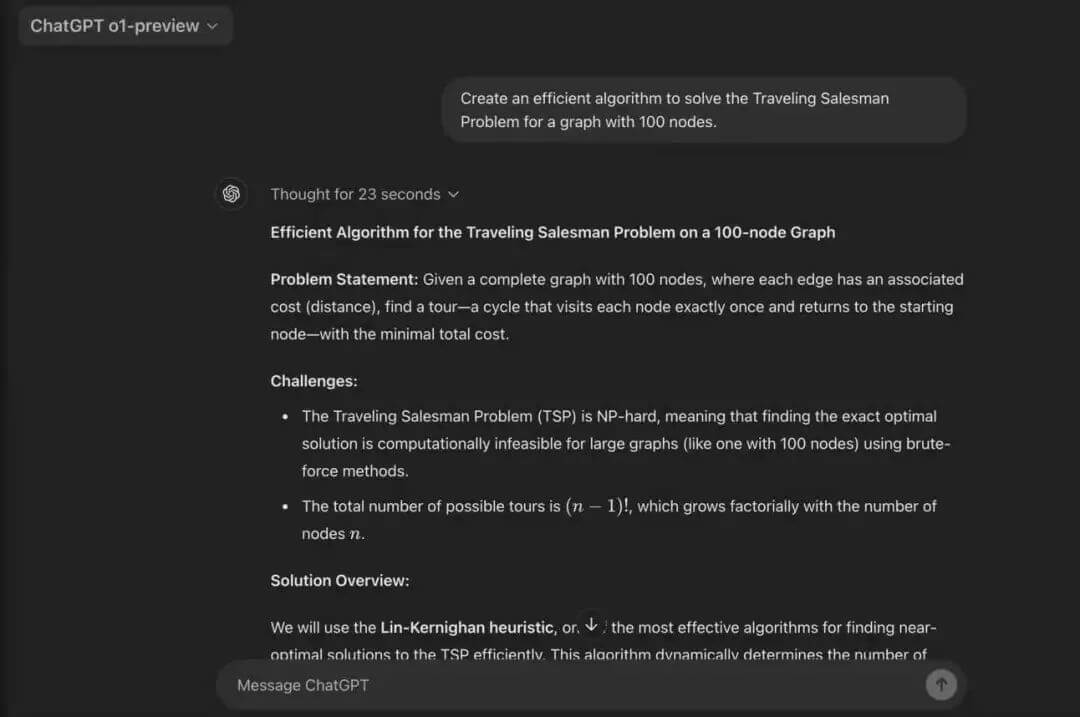

4.4 Content Generation and Creation

NLP is not only used for understanding but also for generating content. OpenAI’s GPT-3 can create articles, write code, and even compose poetry, showcasing AI’s potential in creative fields. The advancement of this technology enables machines to generate high-quality text in specific themes and styles, widely applied in news generation, social media content creation, and more.

Future Developments and Challenges

5.1 Multimodal Learning

Multimodal learning integrates information from text, images, and videos to achieve richer understanding and generation. For instance, by analyzing both images and text on social media, we can gain a more comprehensive understanding of user emotions. The development of this technology will push AI applications into more complex tasks like emotion recognition and content generation.

5.2 Ethics and Bias

NLP models may inherit biases from the data they are trained on, leading to unfair results. For example, if training data contains gender or racial biases, the model may exacerbate these biases in real-world applications. Therefore, researchers need to focus on data diversity and representativeness to build more fair models.

5.3 Continual Learning and Adaptive Systems

How NLP systems can learn in real-time and adapt to changing user needs in dynamic environments is an important research direction. Currently, most NLP systems rely on static training data and lack the ability to adapt to real-time data. Future research will focus on enabling models to update promptly when new data is received, improving their practicality.

Practical Example: Sentiment Analysis in Python

The following example demonstrates how to perform sentiment analysis using Python to monitor real-time user feedback.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.pipeline import make_pipeline

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score

# Example data

data = {

'text': [

'I love this product!',

'This is the worst experience ever.',

'Absolutely fantastic service.',

'I am not happy with my purchase.'

],

'label': [1, 0, 1, 0] # 1: Positive, 0: Negative

}

df = pd.DataFrame(data)

# Split the dataset

X_train, X_test, y_train, y_test = train_test_split(df['text'], df['label'], random_state=42)

# Build the model

model = make_pipeline(CountVectorizer(), MultinomialNB())

model.fit(X_train, y_train)

# Predictions

predictions = model.predict(X_test)

# Output results

print(f"Predictions: {predictions}")

print(f"Accuracy: {accuracy_score(y_test, predictions)}")In this example, we use a Naive Bayes classifier to perform sentiment analysis on simple user reviews. By analyzing text data from the training set, the model learns how to distinguish between positive and negative reviews, which it then validates on the test set.

Case Studies of NLP Applications

7.1 Business Applications

Many large enterprises utilize NLP technologies to enhance operational efficiency. For instance, a well-known e-commerce platform uses sentiment analysis to monitor customer feedback in real time, responding quickly to user queries to improve customer satisfaction. Additionally, by analyzing user comments and behavior data, businesses can optimize product recommendation systems and implement personalized marketing.

7.2 Healthcare Industry

In the healthcare field, NLP can be used to analyze patient electronic health records, extracting key information to assist doctors in diagnosis. For example, NLP techniques can automatically extract symptoms and medical history from doctors' notes to aid in subsequent treatments.

7.3 Education Sector

The application of NLP in education is also growing, with uses such as automatic grading systems and intelligent tutoring tools. By analyzing students' writing, NLP technologies can provide real-time feedback, helping students improve their writing skills.

Conclusion

Natural Language Processing is the bridge between humans and machines. With the development of technology, NLP will play an increasingly important role in more fields. Understanding the basic principles and applications of NLP can help us better understand its potential and challenges, preparing us for a smarter future.