Definition of AI

AI, or artificial intelligence, is a computer program that can simulate human thinking processes to achieve certain tasks of human intelligence. It is a branch of computer science that is dedicated to the study and development of theories, methods, technologies and application systems that imitate, expand and enhance human intelligence. The goal of AI is to understand and build machines that can perform tasks that usually require human intelligence. Such as learning, reasoning, problem solving, language understanding, knowledge representation, planning, natural language processing, pattern recognition, visual recognition, perception, creativity, and autonomous action in a wide range of tasks.

Working principle:

1. Data collection:

AI systems first need a large amount of data as the basis for learning. These data can be in the form of text, images, audio, video, etc., depending on the application scenario of AI, and come from channels such as the Internet, sensors, user input, and databases.

2. Data preprocessing:

The collected raw data needs to be cleaned and sorted, irrelevant information (noise) removed, missing values filled, and normalized or standardized data formats so that useful features can be extracted from it for algorithm processing.

3. Feature extraction:

Selecting important features or variables from the data and extracting useful features from the raw data is a key step. Features are representative properties of data that help algorithms better understand data. These features are key to AI systems understanding and analyzing data and are essential for prediction or classification tasks. For example, in image recognition, edges, colors, textures, etc. can be used as features.

4. Algorithm and model construction and training:

Depending on the nature of the task (such as classification, regression, clustering, etc.), select a suitable machine learning algorithm or neural network architecture. AI systems learn from labeled data through algorithms and models.

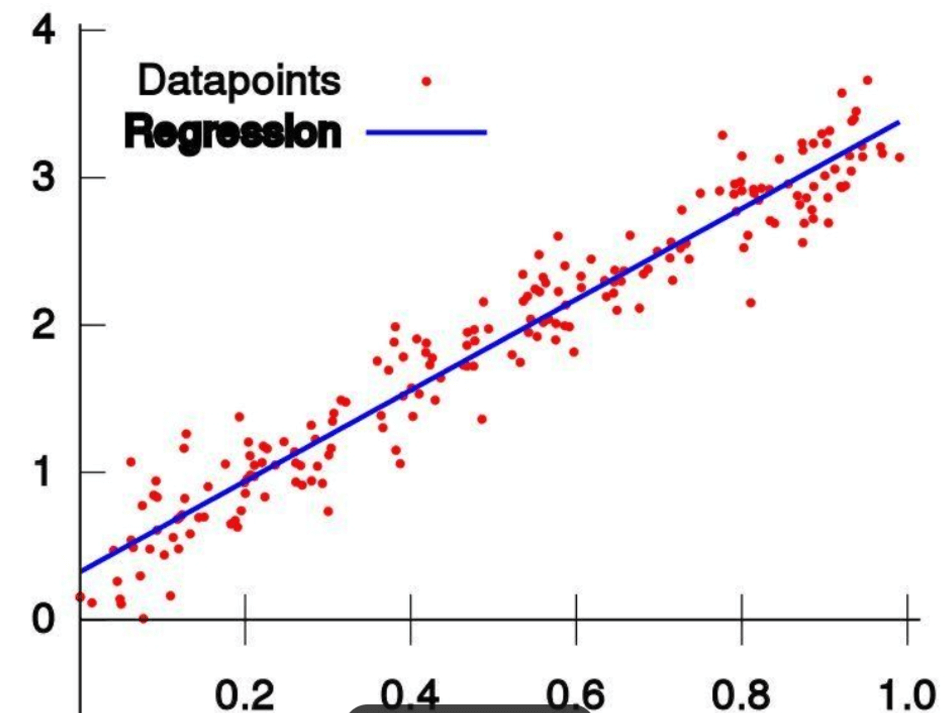

The core of AI is to build models through algorithms and train them with data. Common AI technologies such as machine learning and deep learning adjust the parameters within the model to minimize the difference between prediction errors and actual results (loss function), a process called optimization. In supervised learning, the model learns the mapping relationship between input data and expected output; unsupervised learning looks for structures or patterns in the data. This process usually requires a lot of computing resources and time.

1) Machine learning:

Use statistical methods to let computers learn from data and make predictions or decisions without explicit programming. Machine learning (especially supervised learning) is a particularly important concept in AI. In supervised learning, the model learns from labeled training data, and each training sample has an output label associated with it. By comparing the model's predicted output and the actual label, a loss function can be calculated, and the model parameters can be adjusted through optimization algorithms (such as gradient descent) to reduce this loss.

2) Deep learning:

A special machine learning method that uses a multi-layer neural network structure to learn complex patterns in data. These networks are trained with a large amount of data and computing resources, and are particularly good at processing high-dimensional, complex data, such as performing complex tasks such as image and speech recognition.

5. Model evaluation:

After training is completed, an independent test data set or validation set that was not involved in the training is used to evaluate the performance of the model. Common evaluation indicators include accuracy, recall rate, F1 score, etc. to ensure that the model has strong generalization ability, that is, good performance on new data.

6. Model optimization and adjustment:

Based on the evaluation results, if the model performs poorly, it is necessary to adjust the model architecture, algorithm or training parameters, select different hyperparameters or obtain more data, and then repeat the training and evaluation process until satisfactory performance is achieved.

7. Deployment and Application:

Finally, the optimized model will be deployed to actual application scenarios, such as autonomous driving, medical diagnosis, customer service chatbots, personalized recommendation systems, etc., to process new data (new, unseen data) in real time and make decisions or predictions.

For example, an image recognition model can predict the category of objects in new pictures; a natural language processing model can understand and generate human language.

In some application scenarios, AI also uses reinforcement learning methods to learn the best strategy by interacting with the environment, trying different actions, and learning the best strategy based on feedback (rewards or penalties), gradually improving its performance.

8. Summary:

The working principle of AI involves data-driven, algorithm-supported, and efficient use of computing resources. It revolves around the ability to simulate and expand human intelligence, so that computer systems can automatically perform cognitive tasks such as learning, reasoning, perception, understanding, communication, and decision-making. Its goal is to imitate or even surpass the level of human intelligence in specific tasks.

Core Mechanism:

It mainly revolves around the ability to simulate and expand human intelligence, so that computer systems can automatically perform cognitive tasks such as learning, reasoning, perception, understanding, communication, and decision-making.

1. Machine Learning:

This is a branch of AI that enables computer systems to automatically learn from data and improve their performance without explicit programming. Machine learning includes supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning. Through these methods, AI can generalize patterns from examples and apply them to new situations. For example, by showing a computer a large number of pictures of cats and dogs and telling it which are cats and which are dogs, the computer can automatically learn how to distinguish between cats and dogs by analyzing the patterns and features in these data. Classification: 1) Supervised Learning Through labeled training data sets, the computer learns the mapping relationship between one or more inputs and outputs, so that it can predict unknown data. 2) Unsupervised Learning Without providing labeled data, the computer automatically mines potential patterns and structures from the data, such as clustering and dimensionality reduction. 3) Semi-supervised Learning Combining supervised learning and unsupervised learning, using part of the labeled data for learning, while predicting and annotating the unlabeled data. 4) Reinforcement Learning

Through interaction with the environment, the computer autonomously learns how to complete a task and thus obtains the maximum reward.

2. Deep Learning:

As a special form of machine learning, deep learning uses deep neural networks to learn high-level abstract representations of data. It simulates the neurons of the human brain through multi-level neural networks to achieve learning and understanding of data. Each layer can learn different features of the data, enabling the model to handle more complex pattern recognition tasks, such as image recognition, speech recognition, and natural language processing. Deep learning is a machine learning method based on the structure of artificial neural networks. The core principle of deep learning is the "back propagation" algorithm, which adjusts the connection weights of the network by back-propagating error signals to improve the accuracy and performance of the model. Through the combination of mathematical models, statistics, and optimization algorithms, computers can learn and understand from data to achieve intelligent decision-making and prediction.

As the basis of deep learning, neural networks are network structures that simulate the connections between neurons in the human brain. Neural networks are composed of many artificial neurons that communicate information with each other through connection weights. When input data passes through the neural network, each neuron calculates an output value based on the input value and weight, and is nonlinearly processed by the activation function. Through layer-by-layer transmission and processing between multiple neurons, neural networks can automatically extract and learn features in data, thereby solving complex problems.

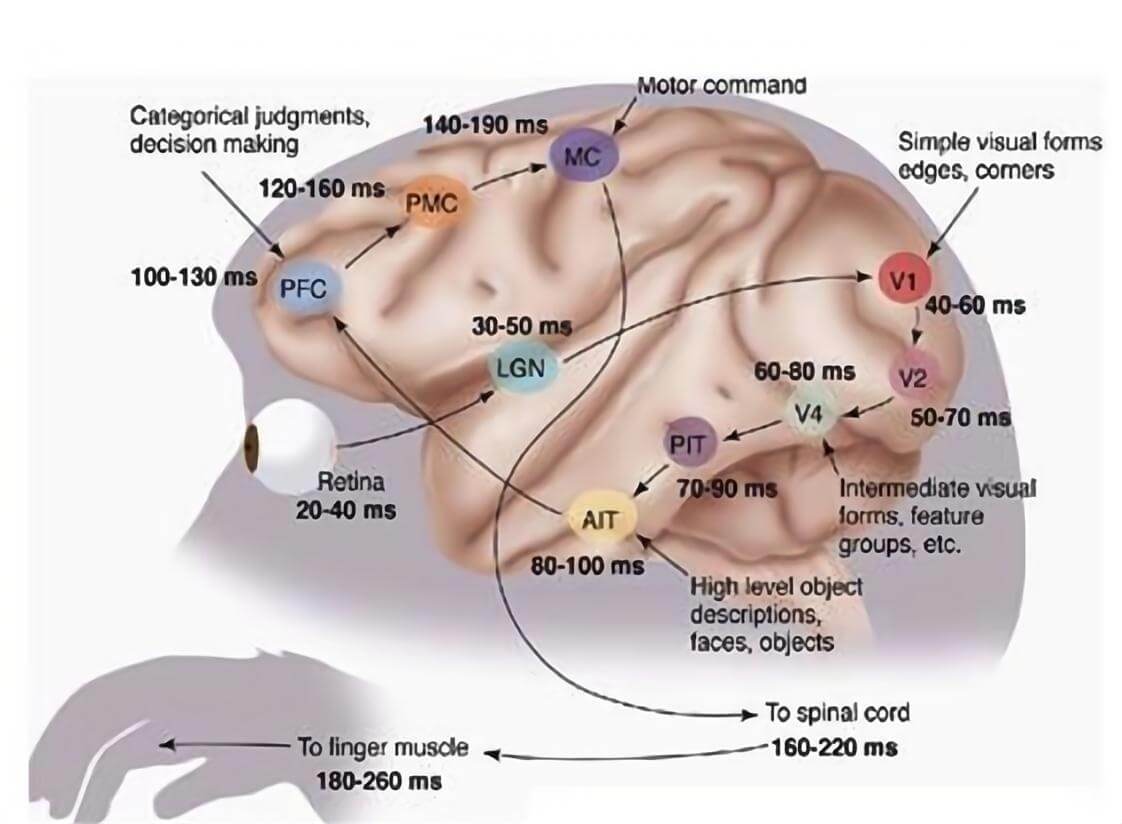

3. Computer Vision (CV):

Enables computers to understand and parse visual information, including images and videos. Through image processing, pattern recognition and machine learning techniques, AI systems can identify objects, scenes, activities, and even understand the semantics of visual content.

CV enables computers to analyze and understand image and video data, and achieve tasks such as image recognition, target detection and face recognition.

4. Natural Language Processing (NLP):

Involves the ability for computers to understand, interpret and generate human language (written or spoken). This includes sentiment analysis, semantic understanding, machine translation, dialogue systems, etc., enabling AI to communicate effectively with humans in natural language, and achieve functions such as speech recognition, machine translation and text generation.

For example: When you upload a photo to social media, the computer can automatically identify the people and objects in the photo. This is achieved through computer vision technology, by analyzing the pixels and features in the image.

5. Reinforcement Learning:

Through trial and error learning, AI agents take actions in a specific environment with the goal of maximizing cumulative rewards. This learning method simulates how organisms learn optimal behaviors in an environment and is suitable for complex decision-making and automatic control scenarios.

6. Decision trees, rule engines, and expert systems:

Although more traditional, these methods still play a role in some AI applications by building a logical framework to simulate the decision-making process of human experts.

7. Meta-learning, transfer learning, and adaptive learning:

These advanced learning mechanisms enable AI to apply learned knowledge across tasks and domains, reduce dependence on large amounts of labeled data, and improve learning efficiency and generalization capabilities.