1 What is a UI Framework?

we discussed that Flutter is divided into three layers: the framework layer, the engine layer, and the embedding layer. We noted that developers primarily interact with the framework layer. This chapter will delve into the principles of the Flutter framework layer. Before that, let's explore what a UI framework is in a broader sense and what problems it solves.

The term "UI Framework" specifically refers to a framework built on a platform that enables rapid development of graphical user interfaces (GUIs). Here, the platform mainly refers to operating systems and browsers. Generally, a platform provides only basic graphics APIs, such as drawing lines and geometric shapes. In most platforms, these basic graphics APIs are usually encapsulated in a Canvas object for centralized management. Imagine how the experience and efficiency would be if we built user interfaces directly with Canvas without the encapsulation of a UI framework! In simple terms, the main problem a UI framework addresses is how to efficiently create a UI based on basic graphics APIs (Canvas).

We have noted that the implementation principles of UI frameworks across platforms are fundamentally similar. This means that whether on Android or iOS, the process of rendering a user interface on the screen is analogous. Thus, before introducing the Flutter UI framework, let's first look at the basic principles of platform graphics processing. This will help readers gain a clear understanding of the underlying UI logic of operating systems.

2 Basic Principles of Hardware Rendering

When discussing principles, we must start with the basic principle of displaying images on a screen. We know that a monitor is made up of physical display units, which we can refer to as physical pixels. Each pixel can emit various colors, and the principle of display is to show different colors on different physical pixels to ultimately form a complete image.

The total number of colors that a pixel can emit is a key indicator of a monitor's quality. For example, a screen that can display 16 million colors means that each pixel can emit 16 million different colors. The colors on the monitor are composed of the RGB color model. Therefore, 16 million colors equate to 2 to the power of 24, meaning that each base color (R, G, B) is extended to an 8-bit depth. The deeper the color depth, the richer and more vibrant the displayed colors.

To update the display, a monitor refreshes at a fixed frequency (fetching data from the GPU). For instance, a mobile phone screen might have a refresh rate of 60Hz. When one frame of an image is completed and ready for the next frame, the monitor issues a vertical synchronization signal (such as vsync), resulting in 60 such signals being sent per second for a 60Hz screen. This signal is primarily used to synchronize the CPU, GPU, and monitor. Generally, in a computer system, the CPU, GPU, and monitor cooperate in a specific manner: the CPU submits the calculated display content to the GPU, which then renders it and places it in a frame buffer. The video controller retrieves frame data from the buffer based on the synchronization signals to display on the monitor.

The tasks of the CPU and GPU are distinct; the CPU primarily handles basic mathematical and logical computations, while the GPU executes complex mathematical operations related to graphics, such as matrix transformations and geometric calculations. The main function of the GPU is to determine the color values of each pixel that will be sent to the monitor.

3 Encapsulation of Operating System Drawing APIs

Since the final graphical calculations and rendering are performed by the corresponding hardware, and direct commands to operate hardware are typically shielded by the operating system, application developers usually do not interact directly with hardware. The operating system provides encapsulated APIs for application developers to use above the hardware layer. However, directly invoking these APIs can be complex and inefficient, as the operating system's APIs are often basic and require understanding many details.

For this reason, nearly all programming languages used to develop GUI applications encapsulate the native APIs of the operating system within a programming framework and model, defining a simplified set of rules for developing GUI applications. This layer of abstraction is what we refer to as the "UI framework." For instance, the Android SDK encapsulates the Android operating system APIs and provides a UI framework with a "UI description file XML + Java/Kotlin for DOM manipulation." Similarly, iOS's UIKit abstracts views in a comparable manner. They both abstract the operating system APIs into fundamental objects (such as a Canvas for 2D graphics) and then define rules for UI, such as UI tree structures and single-threaded principles for UI operations.

4 Flutter UI Framework

We can see that both the Android SDK and iOS's UIKit share similar responsibilities; they differ only in the programming language and underlying system. Is it possible to create a UI framework that allows development in a single programming language, while abstracting an interface consistent across various operating system APIs? If achieved, we could use the same codebase to write cross-platform applications. This is precisely the principle behind Flutter. It provides a Dart API and employs OpenGL, a cross-platform rendering library, at the lower level (which internally calls the operating system APIs) to allow a single codebase to span multiple platforms. Since the Dart API also calls the operating system APIs, its performance is close to native.

There are two key points to note:

While Dart calls OpenGL, which in turn calls the operating system APIs, this is still native rendering. OpenGL serves merely as a wrapper for the operating system APIs and does not require a JavaScript runtime environment or CSS renderer like WebView rendering, thus avoiding performance losses.

In early versions of Flutter, the underlying implementation called cross-platform libraries like OpenGL. However, Apple provided a specialized graphics library called Metal, which offers better rendering performance on iOS. Therefore, Flutter later prioritized using Metal on iOS, falling back to OpenGL only when Metal is unavailable. Nevertheless, application developers do not need to concern themselves with which library is being called; they simply need to know that it calls native drawing interfaces, ensuring high performance.

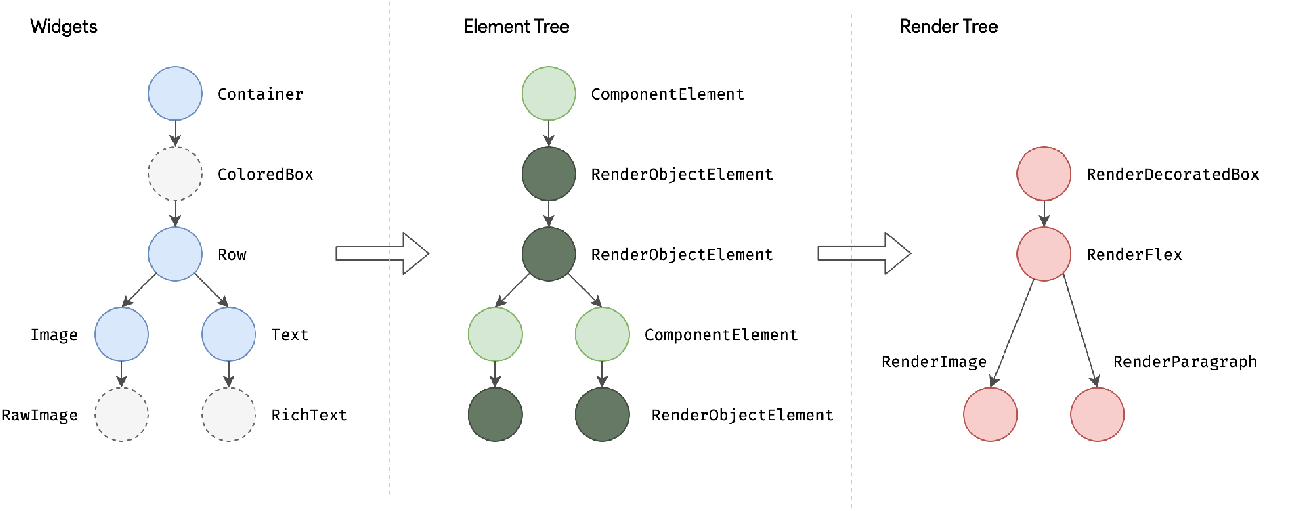

Having covered this part of the Flutter UI framework's interaction with the operating system, we now need to discuss the development standards defined for application developers. In previous chapters, we have become quite familiar with these standards, which can be summarized as: composition and responsiveness. To develop a UI interface, we need to create it by composing other widgets. In Flutter, everything is a widget. When the UI needs to change, we do not directly modify the DOM; instead, we update the state and let the Flutter UI framework rebuild the UI based on the new state.

At this point, readers may notice that the concepts of the Flutter UI framework and Flutter Framework are quite similar, and indeed they are. We use the term "UI framework" for conceptual consistency across platforms. Readers need not be concerned about the terminology itself.

In the following subsections, we will first detail the concepts of Element and RenderObject, which are the cornerstones of the Flutter UI framework. Finally, we will analyze the Flutter application startup and update processes.