80.1 Principles of HTTP Chunked Download

This section will demonstrate the specific usage of Dio through an example of "HTTP chunked downloading."

The HTTP protocol defines the Transfer-Encoding header field for chunked transfer, but whether it is supported depends on the server implementation. We can specify the "Range" header in our request to verify if the server supports chunked transfer. For example, we can use the curl command to check:

curl -H "Range: bytes=0-10" http://download.dcloud.net.cn/HBuilder.9.0.2.macosx_64.dmg -v

Request Headers:

GET /HBuilder.9.0.2.macosx_64.dmg HTTP/1.1Host: download.dcloud.net.cnUser-Agent: curl/7.54.0Accept: */*Range: bytes=0-10

Response Headers:

HTTP/1.1 206 Partial ContentContent-Type: application/octet-streamContent-Length: 11Connection: keep-aliveDate: Thu, 21 Feb 2019 06:25:15 GMTContent-Range: bytes 0-10/233295878

Adding "Range: bytes=0-10" in the request tells the server that we only want to retrieve the file content from bytes 0 to 10 (inclusive of 10, totaling 11 bytes). If the server supports chunked transfer, the response status code will be 206, indicating "Partial Content," and the response header will include the "Content-Range" field. If not supported, this field will be absent. The "Content-Range" content shows:

Content-Range: bytes 0-10/233295878

Here, "0-10" indicates the returned block, and "233295878" represents the total length of the file in bytes, which is approximately 233MB.

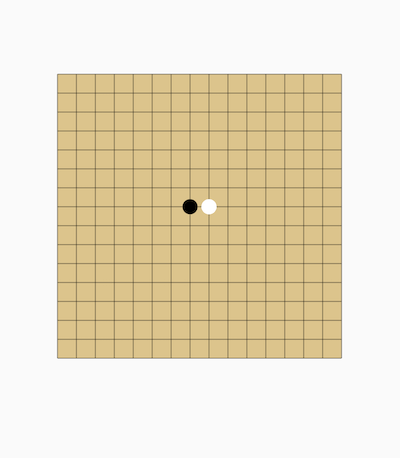

Based on this, we can design a simple multi-threaded file chunk downloader with the following approach:

Check if chunked transfer is supported. If not, download directly; if supported, download the remaining content in chunks.

Save each chunk to its own temporary file, then merge these temporary files once all chunks are downloaded.

Delete the temporary files.

80.2 Implementation

Here’s the overall flow:

// Check server support for chunked transfer with the first chunk request

Response response = await downloadChunk(url, 0, firstChunkSize, 0);

if (response.statusCode == 206) { // If supported

// Parse total file length to calculate remaining length

total = int.parse(response.headers.value(HttpHeaders.contentRangeHeader).split("/").last);

int reserved = total - int.parse(response.headers.value(HttpHeaders.contentLengthHeader));

// Total number of chunks (including the first chunk)

int chunk = (reserved / firstChunkSize).ceil() + 1;

if (chunk > 1) {

int chunkSize = firstChunkSize;

if (chunk > maxChunk + 1) {

chunk = maxChunk + 1;

chunkSize = (reserved / maxChunk).ceil();

}

var futures = <Future>[];

for (int i = 0; i < maxChunk; ++i) {

int start = firstChunkSize + i * chunkSize;

// Download the remaining file in chunks

futures.add(downloadChunk(url, start, start + chunkSize, i + 1));

}

// Wait for all chunks to be downloaded

await Future.wait(futures);

}

// Merge temporary files

await mergeTempFiles(chunk);

}Next, we implement downloadChunk using Dio’s download API:

// start represents the starting position of the current chunk, end represents the ending position

// no indicates the current chunk number

Future<Response> downloadChunk(url, start, end, no) async {

progress.add(0); // progress records the length of data received for each chunk

--end;

return dio.download(

url,

savePath + "temp$no", // Temporary files are named by chunk number for easy merging

onReceiveProgress: createCallback(no), // Create a progress callback, to be implemented later

options: Options(

headers: {"range": "bytes=$start-$end"}, // Specify the content range requested

),

);

}Next, we implement mergeTempFiles:

Future mergeTempFiles(chunk) async {

File f = File(savePath + "temp0");

IOSink ioSink = f.openWrite(mode: FileMode.writeOnlyAppend);

// Merge temporary files

for (int i = 1; i < chunk; ++i) {

File _f = File(savePath + "temp$i");

await ioSink.addStream(_f.openRead());

await _f.delete(); // Delete temporary file

}

await ioSink.close();

await f.rename(savePath); // Rename the merged file to its final name

}Now, let’s look at the complete implementation:

Future downloadWithChunks(

url,

savePath, {

ProgressCallback onReceiveProgress,

}) async {

const firstChunkSize = 102;

const maxChunk = 3;

int total = 0;

var dio = Dio();

var progress = <int>[];

createCallback(no) {

return (int received, _) {

progress[no] = received;

if (onReceiveProgress != null && total != 0) {

onReceiveProgress(progress.reduce((a, b) => a + b), total);

}

};

}

Future<Response> downloadChunk(url, start, end, no) async {

progress.add(0);

--end;

return dio.download(

url,

savePath + "temp$no",

onReceiveProgress: createCallback(no),

options: Options(

headers: {"range": "bytes=$start-$end"},

),

);

}

Future mergeTempFiles(chunk) async {

File f = File(savePath + "temp0");

IOSink ioSink = f.openWrite(mode: FileMode.writeOnlyAppend);

for (int i = 1; i < chunk; ++i) {

File _f = File(savePath + "temp$i");

await ioSink.addStream(_f.openRead());

await _f.delete();

}

await ioSink.close();

await f.rename(savePath);

}

Response response = await downloadChunk(url, 0, firstChunkSize, 0);

if (response.statusCode == 206) {

total = int.parse(response.headers.value(HttpHeaders.contentRangeHeader).split("/").last);

int reserved = total - int.parse(response.headers.value(HttpHeaders.contentLengthHeader));

int chunk = (reserved / firstChunkSize).ceil() + 1;

if (chunk > 1) {

int chunkSize = firstChunkSize;

if (chunk > maxChunk + 1) {

chunk = maxChunk + 1;

chunkSize = (reserved / maxChunk).ceil();

}

var futures = <Future>[];

for (int i = 0; i < maxChunk; ++i) {

int start = firstChunkSize + i * chunkSize;

futures.add(downloadChunk(url, start, start + chunkSize, i + 1));

}

await Future.wait(futures);

}

await mergeTempFiles(chunk);

}

}Now you can perform chunked downloads:

main() async {

var url = "http://download.dcloud.net.cn/HBuilder.9.0.2.macosx_64.dmg";

var savePath = "./example/HBuilder.9.0.2.macosx_64.dmg";

await downloadWithChunks(url, savePath, onReceiveProgress: (received, total) {

if (total != -1) {

print("${(received / total * 100).floor()}%");

}

});

}80.3 Considerations

Does chunked downloading really improve download speed?

In reality, the main bottleneck for download speed is determined by network speed and the server's upload speed. If it’s the same data source, chunked downloading may not offer significant benefits since the server is the same and the upload speed is fixed, primarily depending on network speed. Readers can compare the download speeds of chunked vs. non-chunked downloads in the previous example. If there are multiple download sources, and each has limited bandwidth, chunked downloading may be faster. However, this isn’t guaranteed. For instance, if three sources each have a bandwidth of 1Gb/s, but the connected network peak is only 800Mb/s, the bottleneck is the network. Even if the device's bandwidth exceeds that of any source, the download speed may still not be faster than a single-source download. For example, if source A is three times faster than source B, and both download half the file using chunked downloading, readers can calculate the time taken for each scenario to see which is faster.

The ultimate speed of chunked downloading is influenced by network bandwidth, source upload speed, chunk size, and the number of chunks, making it hard to guarantee optimal speed. In actual development, readers can test and compare before deciding whether to use chunked downloading.

What practical uses does chunked downloading have?

One notable use case for chunked downloading is resuming interrupted downloads. Files can be split into several chunks, and a download status file can be maintained to record the state of each chunk. This way, even if the network is interrupted, the download can resume from the last known state. Readers can try implementing this, keeping in mind some specific details, such as what chunk size is appropriate, how to handle partially downloaded chunks, and whether to maintain a task queue.